文章目录[隐藏]

Yue Lu from Twitter, and collaborator Juncheng Yang and Rashmi from CMU. Pelikan (starting 2020), mostly written by Brian Martin(I really like his obsession to rust) is the current production in-memory cache for small data in twitter which is inspired by the traces captured with eBPF last year can do a smaller granularity analysis of memory cache.

Precondition

-

Slab and LRU are usually used in commercial "in memory" cache, for Memcache and Redis.

-

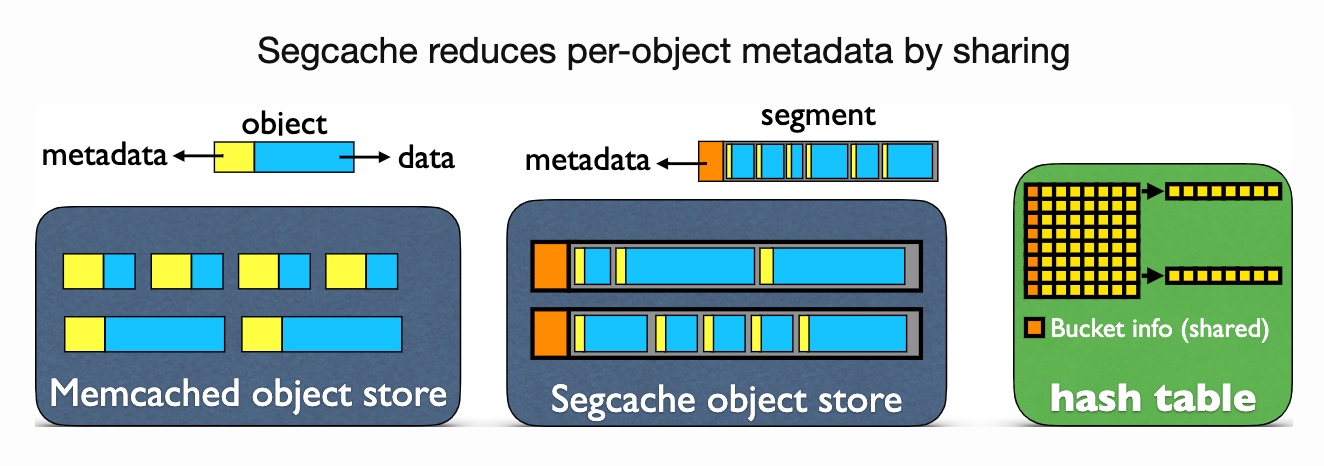

Slab can cause more than 2x memory usage as intended.

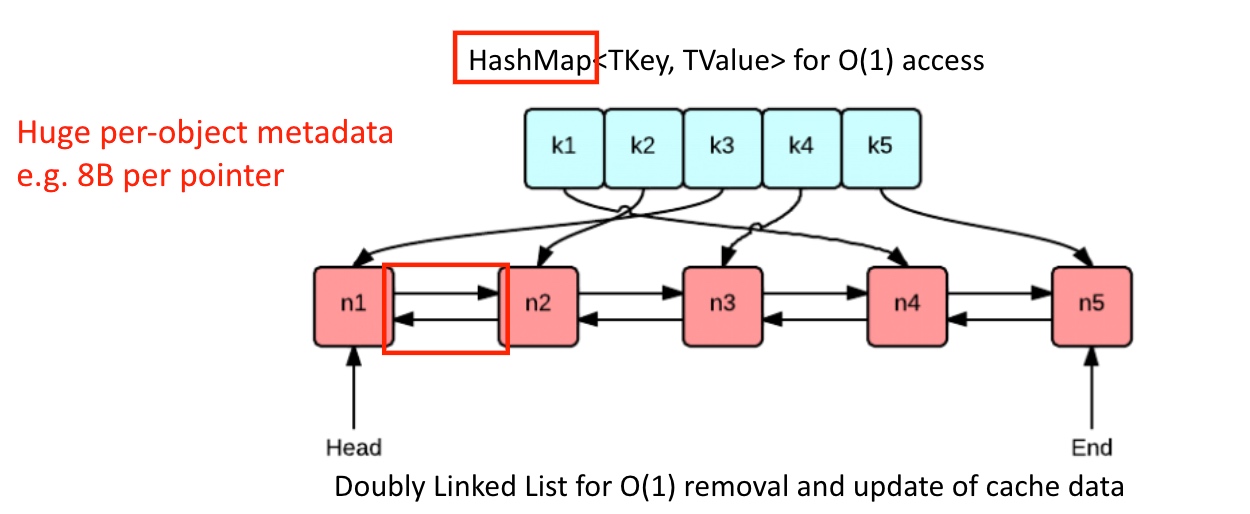

- Metadata in a doubly-linked list

- HashMap stores K-V

- When evict, just remove the end and the last key.

-

LRU lock is not scalable, could lower the rules for eviction to expiry and add discretized TTL with similar metadata concatenation.

-

CAS can be applied when storing in buckets.

-

-

Metadata size and TTL is considered in the industry because they want QPS and are more money efficient.

Good

- Introduce an industrial view of the cache. resolve some of them.

- Add a frequency counter to emulate the eviction since the memory could drain and should recollect.

- The experiment in the paper could be cross-validated with previous points.

- r-LHD(no scanning workload here means no benefit) and r-Hyperbolic: random memory accesses means poor CPU hit rates

- s-Memcached: scanning hurts performance

Bad

- In the experiment, talking about Hit rate/ Miss rate 10x is meaningless.

- Sensitivity analysis algorithm is not clear to understand.

- They will merge N consecutive, un-expired, and un-merged segments

- Segment homogeneity (retain 1/N per segment)

- Across TTL bucket: RR/FIFO/RANDOM

- Merge and create in place.

seg_evictable(struct seg *seg)

{

if (seg == NULL) {

return false;

}

bool is_evictable = seg->w_refcount == 0;

/* although we check evictable here, we will check again after

* we grab the lock, so this is part of the opportunistic concurrency control */

is_evictable = is_evictable &&

(seg->evictable == 1) && (seg->next_seg_id != -1);

/* a magic number - we don't want to merge just created seg */

/* TODO(jason): the time needs to be adaptive */

is_evictable = is_evictable

&& (time_proc_sec() - seg->create_at >= evict_info.seg_mature_time);

/* don't merge segments that will expire soon */

is_evictable = is_evictable &&

seg->create_at + seg->ttl - time_proc_sec() > 20;

return is_evictable;

}

static inline int

cmp_seg_FIFO(const void *d1, const void *d2)

{

struct seg *seg1 = &heap.segs[*(uint32_t *) d1];

struct seg *seg2 = &heap.segs[*(uint32_t *) d2];

/* avoid segments that are currently being written to */

if (!seg_evictable(seg1)) {

return 1;

}

if (!seg_evictable(seg2)) {

return -1;

}

return MAX(seg1->create_at, seg1->merge_at) -

MAX(seg2->create_at, seg2->merge_at);

}