CSE220的project是写个模拟器,暂时想写的是(类承影的 RVV GPU SM subsystem。) 没空了,不写了.这个emulated和sniper最大的区别是这个模拟器是timesampling based,精度会高很多。

project organization

qemuStarted = true;

QEMUArgs *qdata = (struct QEMUArgs *)threadargs;

int qargc = qdata->qargc;

char **qargv = qdata->qargv;

MSG("Starting qemu with");

for(int i = 0; i < qargc; i++)

MSG("arg[%d] is: %s", i, qargv[i]);

qemuesesc_main(qargc, qargv, NULL);

MSG("qemu done");

QEMUReader_finish(0);

pthread_exit(0);

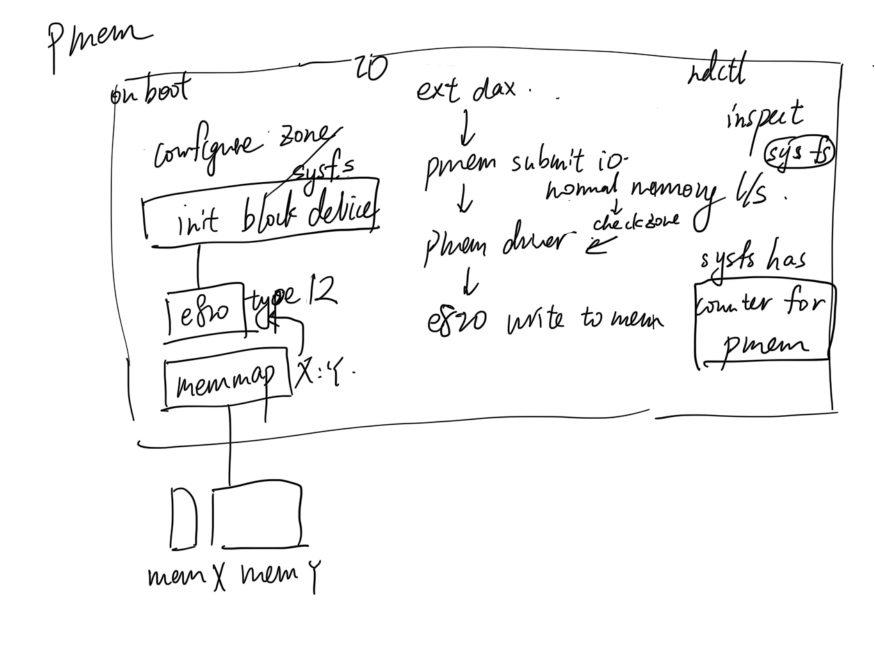

上层先走qemu先写了个bootloader,拿到Physical memory(其在qemu里插了trace记录memory。qemu 走完了(所以这里为毛不用JIT,可能是当时开发的局限吧。然后这边接一个指令parser。

模拟器进入循环后,会从qemu(libemul)中收集timing插桩信息。

// 4 possible states:

// rabbit : no timing or warmup, go as fast as possible skipping stuff

// warmup : no timing but it has system warmup (caches/bpred)

// detail : detailed modeling, but without updating statistics

// timing : full timing modeling

另一个thread会运行下面的模型,初始化到memorysystem会从conf里面读cache的信息

-exec bt

#0 CCache::CCache (this=0x555558ae4360, gms=0x555558ae4280, section=0x555558ae42c0 "IL1_core", name=0x555558ae4340 "IL1(0)") at /home/victoryang00/Documents/esesc/simu/libmem/CCache.cpp:78

#1 0x0000555555836ccd in MemorySystem::buildMemoryObj (this=0x555558ae4280, device_type=0x5555589fa820 "icache", dev_section=0x555558ae42c0 "IL1_core", dev_name=0x555558ae4340 "IL1(0)") at /home/victoryang00/Documents/esesc/simu/libmem/MemorySystem.cpp:61

#2 0x000055555586c99e in GMemorySystem::finishDeclareMemoryObj (this=0x555558ae4280, vPars=..., name_suffix=0x0) at /home/victoryang00/Documents/esesc/simu/libcore/GMemorySystem.cpp:320

#3 0x000055555586c497 in GMemorySystem::declareMemoryObj (this=0x555558ae4280, block=0x5555589ee260 "tradCORE", field=0x555555a7e573 "IL1") at /home/victoryang00/Documents/esesc/simu/libcore/GMemorySystem.cpp:242

#4 0x000055555586bb0e in GMemorySystem::buildMemorySystem (this=0x555558ae4280) at /home/victoryang00/Documents/esesc/simu/libcore/GMemorySystem.cpp:148

#5 0x000055555581c764 in BootLoader::createSimuInterface (section=0x5555589ee260 "tradCORE", i=0) at /home/victoryang00/Documents/esesc/simu/libsampler/BootLoader.cpp:281

#6 0x000055555581c1c4 in BootLoader::plugSimuInterfaces () at /home/victoryang00/Documents/esesc/simu/libsampler/BootLoader.cpp:219

#7 0x000055555581cba1 in BootLoader::plug (argc=1, argv=0x7fffffffd888) at /home/victoryang00/Documents/esesc/simu/libsampler/BootLoader.cpp:320

#8 0x000055555572274d in main (argc=1, argv=0x7fffffffd888) at /home/victoryang00/Documents/esesc/main/esesc.cpp:37

CPU Model

指令被qemu issue了以后会有个taskhandler接,prefetcher和address predictor 也实现在libcore里面.有LSQ和storeSet的实现,就是那个RMO的Load Store forward queue. OoO/prefetch实现也是最全的.

Memory Model

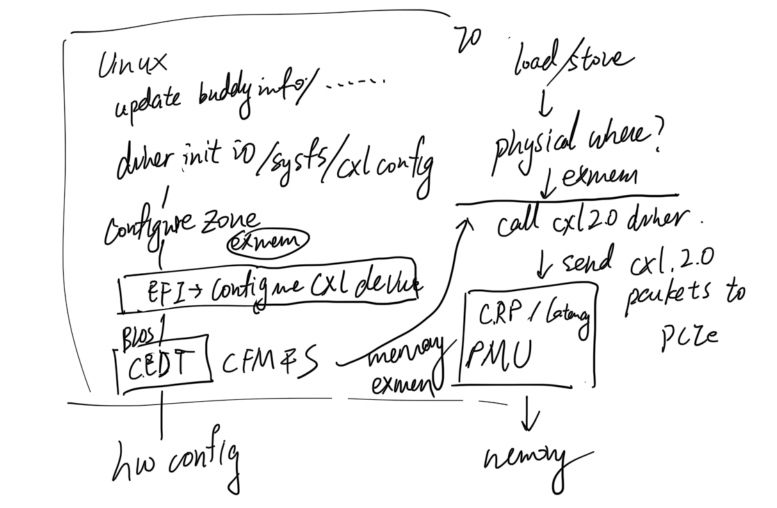

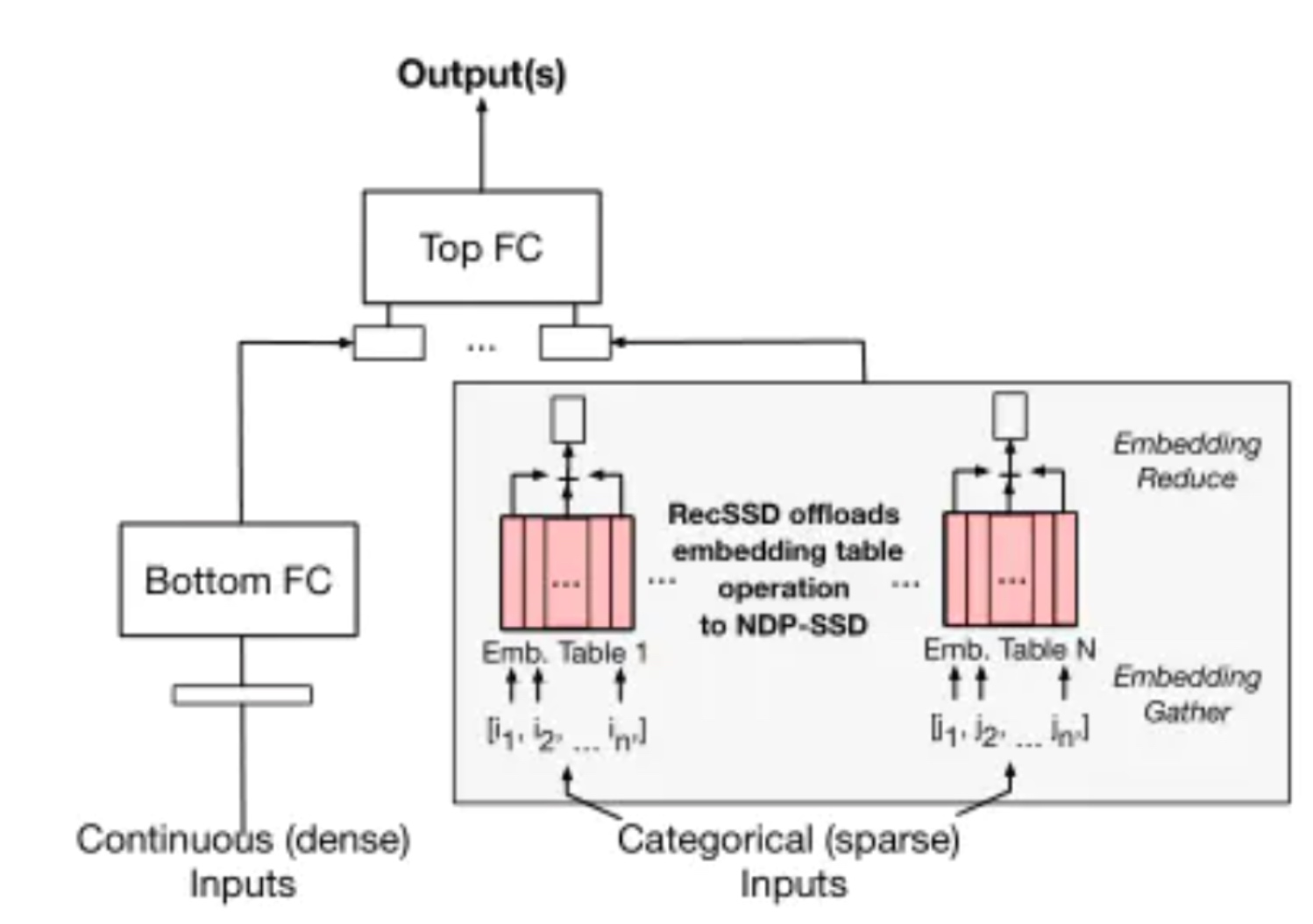

触发ld/st/mv等memory指令的时候会直接call memorysubsystem 核心内的L1/L2都会有MemObj记录现在的地址,自动机抽象成MemRequest,noblocking先查询MSHR,到了不同MemObj更新状态.到Memory侧有controller分配dim和Xbar分配load的路径.

GPU Model

这个走的是cuda模型,编译器会生成riscv host代码和gpu device代码,SM 会执行GPU代码,然后他们的SM流处理器记录的东西和CPU部分一样的metrics.

power emulation

用了一个pwr+McPAT的power model来完成libpeq的模拟,Component category可以模拟L1I等等,还有实时更新的McPT和Gstat的counter。只是现在暂时只有SRAM和cache的model,也没有row buffer的模拟和leakage的模拟,假装所有东西都是正确运行的。

Reference

- https://masc.soe.ucsc.edu/esesc/resources/4-memory.pdf

- https://masc.soe.ucsc.edu/esesc/resources/5-powermodel%20.pdf

- https://masc.soe.ucsc.edu/esesc/resources/6-gpu.pdf