1. Introduction

1.1 Setting the Stage: NVIDIA's CUDA and its Dominance in AI Compute

NVIDIA Corporation, initially renowned for its graphics processing units (GPUs) powering the gaming industry, strategically pivoted over the last two decades to become the dominant force in artificial intelligence (AI) computing. A cornerstone of this transformation was the introduction of the Compute Unified Device Architecture (CUDA) in 2006. CUDA is far more than just a programming language; it represents NVIDIA's proprietary parallel computing platform and a comprehensive software ecosystem, encompassing compilers, debuggers, profilers, extensive libraries (like cuDNN for deep learning and cuBLAS for linear algebra), and development tools. This ecosystem unlocked the potential of GPUs for general-purpose processing (GPGPU), enabling developers to harness the massive parallelism inherent in NVIDIA hardware for computationally intensive tasks far beyond graphics rendering.

This strategic focus on software and hardware synergy has propelled NVIDIA to a commanding position in the AI market. Estimates consistently place NVIDIA's share of the AI accelerator and data center GPU market between 70% and 95%, with recent figures often citing 80% to 92% dominance. This market leadership is reflected in staggering financial growth, with data center revenue surging, exemplified by figures like $18.4 billion in a single quarter of 2023. High-performance GPUs like the A100, H100, and the upcoming Blackwell series have become the workhorses for training and deploying large-scale AI models, utilized by virtually all major technology companies and research institutions, including OpenAI, Google, and Meta. Consequently, CUDA has solidified its status as the de facto standard programming environment for GPU-accelerated computing, particularly within the AI domain, underpinning widely used frameworks like PyTorch and TensorFlow.

1.2 The Emerging "Beyond CUDA" Narrative: GTC Insights and Industry Momentum

Despite NVIDIA's entrenched position, a narrative exploring computational pathways "Beyond CUDA" is gaining traction, even surfacing within NVIDIA's own GPU Technology Conference (GTC) events. The focus of the provided GTC video segment, starting from the 5 minute 27 second mark, on alternatives signifies that the discussion around diversifying the AI compute stack is relevant and acknowledged within the broader ecosystem [User Query].

This internal discussion is mirrored and amplified by external industry movements. Notably, the "Beyond CUDA Summit," organized by TensorWave (a cloud provider utilizing AMD accelerators) and featuring prominent figures like computer architects Jim Keller and Raja Koduri, explicitly aimed to challenge NVIDIA's dominance. This event, strategically held near NVIDIA's GTC 2025, centered on dissecting the "CUDA moat" and exploring viable alternatives, underscoring a growing industry-wide desire for greater hardware flexibility, cost efficiency, and reduced vendor lock-in.

1.3 Report Objectives and Structure

This report aims to provide an expert-level analysis of the evolving AI compute landscape, moving beyond the CUDA-centric view. It will dissect the concept of the "CUDA moat," examine the strategies being employed to challenge NVIDIA's dominance, and detail the alternative hardware and software solutions emerging across the AI workflow – encompassing pre-training, post-training (optimization and fine-tuning), and inference.

The analysis will draw upon insights derived from the specified GTC video segment, synthesizing this information with data and perspectives gathered from recent industry reports, technical analyses, and market commentary found in the provided research materials. The report is structured into the following key sections:

- Crossing the Moat: Deconstructing CUDA's competitive advantages and analyzing industry strategies for diversification.

- Pre-training Beyond CUDA: Examining alternative hardware and software for large-scale model training.

- Post-training Beyond CUDA: Investigating non-CUDA tools and techniques for model optimization and fine-tuning.

- Inference Beyond CUDA: Detailing the diverse hardware and software solutions for deploying models outside the CUDA ecosystem.

- Industry Outlook and Conclusion: Assessing the current market dynamics, adoption trends, and the future trajectory of AI compute heterogeneity.

2. Crossing the Moat: Understanding and Challenging CUDA's Dominance

2.1 Historical Context and the Rise of CUDA

NVIDIA's journey to AI dominance was significantly shaped by the strategic introduction of CUDA in 2006. This platform marked a pivotal shift, enabling developers to utilize the parallel processing power of NVIDIA GPUs for general-purpose computing tasks, extending their application far beyond traditional graphics rendering. NVIDIA recognized the potential of parallel computing on its hardware architecture early on, developing CUDA as a proprietary platform to unlock this capability. This foresight, driven partly by academic research demonstrating GPU potential for scientific computing and initiatives like the Brook streaming language developed by future CUDA creator Ian Buck , provided NVIDIA with a crucial first-mover advantage.

CUDA was designed with developers in mind, abstracting away much of the underlying hardware complexity and allowing researchers and engineers to focus more on algorithms and applications rather than intricate hardware nuances. It provided APIs, libraries, and tools within familiar programming paradigms (initially C/C++, later Fortran and Python). Over more than a decade, CUDA matured with relatively limited competition from viable, comprehensive alternatives. This extended period allowed the platform and its ecosystem to become deeply embedded in academic research, high-performance computing (HPC), and, most significantly, the burgeoning field of AI.

2.2 Deconstructing the "CUDA Moat": Ecosystem, Lock-in, and Performance

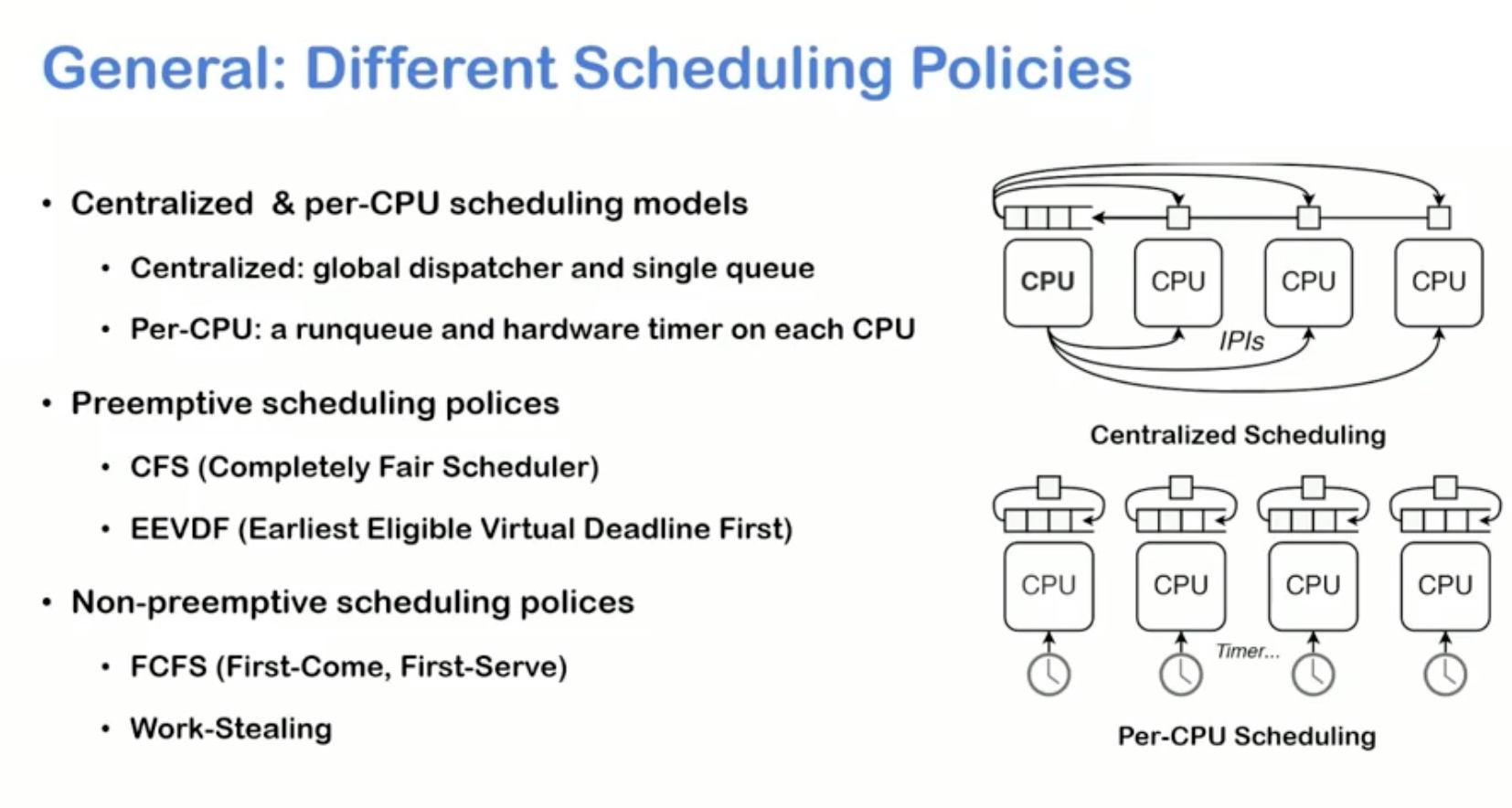

The term "CUDA moat" refers to the collection of sustainable competitive advantages that protect NVIDIA's dominant position in the AI compute market, primarily derived from its tightly integrated hardware and software ecosystem. This moat is multifaceted:

-

Ecosystem Breadth and Network Effects:

The CUDA ecosystem is vast, encompassing millions of developers worldwide, thousands of companies, and a rich collection of optimized libraries (e.g., cuDNN, cuBLAS, TensorRT), sophisticated development and profiling tools, extensive documentation, and strong community support.

CUDA is also heavily integrated into academic curricula, ensuring a steady stream of new talent proficient in NVIDIA's tools.

This widespread adoption creates powerful network effects: as more developers and applications utilize CUDA, more tools and resources are created for it, further increasing its value and reinforcing its position as the standard.

-

High Switching Costs and Developer Inertia:

Companies and research groups have invested heavily in developing, testing, and optimizing codebases built upon CUDA.

Migrating these complex workflows to alternative platforms like AMD's ROCm or Intel's oneAPI represents a daunting task. It often requires significant code rewriting, retraining developers on new tools and languages, and introduces substantial risks related to achieving comparable performance, stability, and correctness.

This "inherent inertia" within established software ecosystems creates high switching costs, making organizations deeply reluctant to abandon their CUDA investments, even if alternatives offer potential benefits.

-

Performance Optimization and Hardware Integration:

CUDA provides developers with low-level access to NVIDIA GPU hardware, enabling fine-grained optimization to extract maximum performance.

This is critical in compute-intensive AI workloads. The tight integration between CUDA software and NVIDIA hardware features, such as Tensor Cores (specialized units for matrix multiplication), allows for significant acceleration.

Competitors often struggle to match this level of performance tuning due to the deep co-design of NVIDIA's hardware and software.

While programming Tensor Cores directly can involve "arcane knowledge" and dealing with undocumented behaviors

, the availability of libraries like cuBLAS and CUTLASS abstracts some of this complexity.

-

Backward Compatibility:

NVIDIA has generally maintained backward compatibility for CUDA, allowing older code to run on newer GPU generations (though limitations exist, as newer CUDA versions require specific drivers and drop support for legacy hardware over time).

This perceived stability encourages long-term investment in the CUDA platform.

-

Vendor Lock-in:

The cumulative effect of this deep ecosystem, high switching costs, performance advantages on NVIDIA hardware, and established workflows results in significant vendor lock-in.

Developers and organizations become dependent on NVIDIA's proprietary platform, limiting hardware choices, potentially stifling competition, and giving NVIDIA considerable market power.

2.3 Industry Strategies for Diversification

Recognizing the challenges posed by the CUDA moat, various industry players are pursuing strategies to foster a more diverse and open AI compute ecosystem. These efforts span competitor platform development, the promotion of open standards and abstraction layers, and initiatives by large-scale users.

-

Competitor Platform Development:

-

AMD ROCm (Radeon Open Compute):

AMD's primary answer to CUDA is ROCm, an open-source software stack for GPU computing.

Key to its strategy is the Heterogeneous-computing Interface for Portability (HIP), designed to be syntactically similar to CUDA, easing code migration.

AMD provides the HIPIFY tool to automate the conversion of CUDA source code to HIP C++, although manual adjustments are often necessary.

Despite progress, ROCm has faced significant challenges. Historically, it supported a limited range of AMD GPUs, suffered from stability issues and performance gaps compared to CUDA, and lagged in adopting new features and supporting the latest hardware.

However, AMD continues to invest heavily in ROCm, improving framework support (e.g., native PyTorch integration

), expanding hardware compatibility (including consumer GPUs, albeit sometimes unofficially or with delays

), and achieving notable adoption for its Instinct MI300 series accelerators by major hyperscalers.

-

Intel oneAPI:

Intel promotes oneAPI as an open, unified, cross-architecture programming model based on industry standards, particularly SYCL (Data Parallel C++ or DPC++).

It aims to provide portability across diverse hardware types, including CPUs, GPUs (Intel integrated and discrete), FPGAs, and other accelerators, explicitly positioning itself as an alternative to CUDA lock-in.

oneAPI is backed by the Unified Acceleration (UXL) Foundation, involving multiple companies.

While offering a promising vision for heterogeneity, oneAPI is a relatively newer initiative compared to CUDA and faces the challenge of building a comparable ecosystem and achieving widespread adoption.

-

Other Initiatives:

OpenCL, an earlier open standard for heterogeneous computing, remains relevant, particularly in mobile and embedded systems, but has struggled to gain traction in high-performance AI due to fragmentation, slow evolution, and performance limitations compared to CUDA.

Vulkan Compute, leveraging the Vulkan graphics API, offers low-level control and potential performance benefits but has a steeper learning curve and a less mature ecosystem for general-purpose compute.

Newer entrants like Modular Inc.'s Mojo programming language and MAX platform aim to combine Python's usability with C/CUDA performance, targeting AI hardware programmability directly.

-

Open Standards and Abstraction Layers:

-

A significant trend involves leveraging higher-level AI frameworks like PyTorch, TensorFlow, and JAX, which can potentially abstract away underlying hardware specifics.

If a model is written in PyTorch, the ideal scenario is that it can run efficiently on NVIDIA, AMD, or Intel hardware simply by targeting the appropriate backend (CUDA, ROCm, oneAPI/SYCL).

-

The development of PyTorch 2.0, featuring TorchDynamo for graph capture and TorchInductor as a compiler backend, represents a move towards greater flexibility.

TorchInductor can generate code for different backends, including using OpenAI Triton for GPUs or OpenMP/C++ for CPUs, potentially reducing direct dependence on CUDA libraries for certain operations.

-

OpenAI Triton itself is positioned as a Python-like language and compiler for writing high-performance custom GPU kernels, aiming to achieve performance comparable to CUDA C++ but with significantly improved developer productivity.

While currently focused on NVIDIA GPUs, its open-source nature holds potential for broader hardware support.

-

OpenXLA (Accelerated Linear Algebra), originating from Google's XLA compiler used in TensorFlow and JAX, is another initiative focused on creating a compiler ecosystem that can target diverse hardware backends.

-

However, these abstraction layers are not a panacea. The abstraction is often imperfect ("leaky"), many essential libraries within the framework ecosystems are still optimized primarily for CUDA or lack robust support for alternatives, performance parity is not guaranteed, and NVIDIA exerts considerable influence on the development roadmap of frameworks like PyTorch, potentially steering them in ways that favor CUDA.

Achieving true first-class support for alternative backends within these dominant frameworks remains a critical challenge.

-

Hyperscaler Initiatives: The largest consumers of AI hardware – cloud hyperscalers like Google (TPUs), AWS (Trainium, Inferentia), Meta, and Microsoft – have the resources and motivation to develop their own custom AI silicon and potentially accompanying software stacks. This strategy allows them to optimize hardware for their specific workloads, control their supply chain, reduce costs, and crucially, avoid long-term dependence on NVIDIA. Their decisions to adopt competitor hardware (like AMD MI300X ) or build in-house solutions represent perhaps the most significant direct threat to the CUDA moat's long-term durability.

-

Direct Low-Level Programming (PTX): For organizations seeking maximum performance and control, bypassing CUDA entirely and programming directly in NVIDIA's assembly-like Parallel Thread Execution (PTX) language is an option, as demonstrated by DeepSeek AI. PTX acts as an intermediate representation between high-level CUDA code and the GPU's machine code. While this allows for fine-grained optimization potentially exceeding standard CUDA libraries, PTX is only partially documented, changes between GPU generations, and is even more tightly locked to NVIDIA hardware, making it a highly complex and specialized approach unsuitable for most developers.

2.4 Implications of the Competitive Landscape

The analysis of CUDA's dominance and the strategies to counter it reveals several key points about the competitive dynamics. Firstly, the resilience of NVIDIA's market position stems less from insurmountable technical superiority in every aspect and more from the profound inertia within the software ecosystem. The vast investment in CUDA codebases, developer skills, and tooling creates significant friction against adopting alternatives. This suggests that successful competitors need not only technically competent hardware but also a superior developer experience, seamless migration paths, robust framework integration, and compelling value propositions (e.g., cost, specific features) to overcome this inertia.

Secondly, abstraction layers like PyTorch and compilers like Triton present a complex scenario. While they hold the promise of hardware agnosticism, potentially weakening the direct CUDA lock-in, NVIDIA's deep integration and influence within these ecosystems mean they can also inadvertently reinforce the moat. The best-supported, highest-performing path often remains via CUDA. The ultimate impact of these layers depends critically on whether alternative hardware vendors can achieve true first-class citizenship and performance parity within them.

Thirdly, the "Beyond CUDA" movement suffers from fragmentation. The existence of multiple competing alternatives (ROCm, oneAPI, OpenCL, Vulkan Compute, Mojo, etc.) risks diluting development efforts and hindering the ability of any single alternative to achieve the critical mass needed to effectively challenge the unified CUDA front. This mirrors the historical challenges faced by OpenCL due to vendor fragmentation and lack of unified direction. Overcoming this may require market consolidation or the emergence of clear winners for specific niches.

Finally, the hyperscale cloud providers represent a powerful disruptive force. Their immense scale, financial resources, and strategic imperative to avoid vendor lock-in position them uniquely to alter the market dynamics. Their adoption of alternative hardware or the development of proprietary silicon and software stacks could create viable alternative ecosystems much faster than traditional hardware competitors acting alone.

Table 2.1: CUDA Moat Components and Counter-Strategies

| Moat Component |

NVIDIA's Advantage |

Competitor Strategies |

Key Challenges for Competitors |

| Ecosystem Size |

Millions of developers, vast community, academic integration |

Build communities around ROCm/oneAPI/Mojo; Leverage open-source framework communities (PyTorch, TF) |

Reaching critical mass; Overcoming established network effects; Competing with NVIDIA's resources |

| Library Maturity |

Highly optimized, extensive libraries (cuDNN, cuBLAS, TensorRT) |

Develop competing libraries (ROCm libraries, oneAPI libraries); Contribute to framework-level ops |

Achieving feature/performance parity; Ensuring stability and robustness; Breadth of domain coverage |

| Developer Familiarity |

Decades of use, established workflows, available talent pool |

Simplify APIs (e.g., HIP similarity to CUDA); Provide migration tools (HIPIFY, SYCLomatic); Focus on usability |

Overcoming learning curves; Convincing developers of stability/benefits; Retraining workforce |

| Performance Optimization |

Tight hardware-software co-design; Low-level access; Tensor Core integration |

Optimize ROCm/oneAPI compilers; Improve framework backend performance; Develop specialized hardware |

Matching NVIDIA's optimization level; Accessing/optimizing specialized hardware features (like Tensor Cores) |

| Switching Costs |

High cost/risk of rewriting code, retraining, validating |

Provide automated porting tools; Ensure framework compatibility; Offer significant cost/performance benefits |

Imperfect porting tools; Ensuring functional equivalence and performance; Justifying the migration effort |

| Framework Integration |

Deep integration & influence in PyTorch/TF; Optimized paths |

Achieve native, high-performance support in frameworks; Leverage open-source contributions |

Competing with NVIDIA's influence; Ensuring timely support for new framework features; Library dependencies |

| Hyperscaler Dependence |

Major cloud providers are largest customers, rely on CUDA |

Hyperscalers adopt AMD/Intel; Develop custom silicon/software; Promote open standards |

Hyperscalers' internal efforts may not benefit broader market; Competing for hyperscaler design wins |

3. Pre-training Beyond CUDA

3.1 Challenges in Pre-training

The pre-training phase for state-of-the-art AI models, particularly large language models (LLMs) and foundation models, involves computations at an immense scale. This process demands not only massive parallel processing capabilities but also exceptional stability and reliability over extended periods, often weeks or months. Historically, the maturity, performance, and robustness of NVIDIA's hardware coupled with the CUDA ecosystem made it the overwhelmingly preferred choice for these demanding tasks, establishing a high bar for any potential alternatives.

3.2 Alternative Hardware Accelerators

Despite NVIDIA's dominance, several alternative hardware platforms are being positioned and increasingly adopted for large-scale AI pre-training:

-

AMD Instinct Series (MI200, MI300X/MI325):

AMD's Instinct line, particularly the MI300 series, directly targets NVIDIA's high-end data center GPUs like the A100 and H100.

These accelerators offer competitive specifications, particularly in areas like memory capacity and bandwidth, which are critical for large models. They have gained traction with major hyperscalers, including Microsoft Azure, Oracle Cloud, and Meta, who see them as a viable alternative to reduce reliance on NVIDIA and potentially lower costs.

Cloud platforms like TensorWave are also building services based on AMD Instinct hardware.

AMD emphasizes a strategy centered around open standards and cost-effectiveness compared to NVIDIA's offerings.

-

Intel Gaudi Accelerators (Gaudi 2, Gaudi 3):

Intel's Gaudi family represents dedicated ASICs designed specifically for AI training and inference workloads.

Intel markets Gaudi accelerators, such as the recent Gaudi 3, as a significantly more cost-effective alternative to NVIDIA's flagship GPUs, aiming to capture a segment of the market prioritizing value.

Gaudi accelerators feature integrated high-speed networking (Ethernet), facilitating the construction of large training clusters.

It's noteworthy that deploying models on Gaudi often relies on Intel's specific SynapseAI software stack, which may differ from the broader oneAPI initiative in some aspects.

-

Google TPUs (Tensor Processing Units):

Developed in-house by Google, TPUs are custom ASICs highly optimized for TensorFlow and JAX workloads.

They have been instrumental in training many of Google's largest models and are available through Google Cloud Platform. TPUs demonstrate the potential of domain-specific architectures tailored explicitly for machine learning computations.

-

Other Emerging Architectures:

The landscape is further diversifying with other players. Amazon Web Services (AWS) offers its Trainium chips for training.

Reports suggest OpenAI and Microsoft may be developing their own custom AI accelerators.

Startups like Cerebras Systems (with wafer-scale engines) and Groq (focused on low-latency inference, but indicative of architectural innovation) are exploring novel designs.

Huawei also competes with its Ascend AI chips, particularly in the Chinese market, based on its Da Vinci architecture.

This proliferation of hardware underscores the intense interest and investment in finding alternatives or complements to NVIDIA's GPUs.

3.3 Software Stacks for Large-Scale Training

Hardware alone is insufficient; robust software stacks are essential to harness these accelerators for pre-training:

-

ROCm Ecosystem:

Training on AMD Instinct GPUs primarily relies on the ROCm software stack, particularly its integration with major AI frameworks like PyTorch and TensorFlow.

While functional and improving, the ROCm ecosystem's maturity, ease of use, breadth of library support, and performance consistency have historically been points of concern compared to the highly refined CUDA ecosystem.

Success hinges on continued improvements in ROCm's stability and performance within these critical frameworks.

-

oneAPI and Supporting Libraries:

Intel's oneAPI aims to provide the software foundation for training on its diverse hardware portfolio (CPUs, GPUs, Gaudi accelerators).

It utilizes DPC++ (based on SYCL) as the core language and includes libraries optimized for deep learning tasks, integrating with frameworks like PyTorch and TensorFlow.

The goal is a unified programming experience across different Intel architectures, simplifying development for heterogeneous environments.

-

Leveraging PyTorch/JAX/TensorFlow with Alternative Backends:

Regardless of the underlying hardware (AMD, Intel, Google TPU), the primary interface for most researchers and developers conducting large-scale pre-training remains high-level frameworks like PyTorch, JAX, or TensorFlow.

The viability of non-NVIDIA hardware for pre-training is therefore heavily dependent on the quality, performance, and completeness of the respective framework backends (e.g., PyTorch on ROCm, JAX on TPU, TensorFlow on oneAPI).

-

The Role of Compilers (Triton, XLA):

Compilers play a crucial role in bridging the gap between high-level framework code and low-level hardware execution. OpenAI Triton, used as a backend component within PyTorch 2.0's Inductor, translates Python-based operations into efficient GPU code (currently PTX for NVIDIA, but potentially adaptable).

Similarly, XLA optimizes and compiles TensorFlow and JAX graphs for various targets, including TPUs and GPUs.

The efficiency and target-awareness of these compilers are critical for achieving high performance on diverse hardware backends.

-

Emerging Languages/Platforms (Mojo):

New programming paradigms like Mojo are being developed with the explicit goal of providing a high-performance, Python-syntax-compatible language for programming heterogeneous AI hardware, including GPUs and accelerators from various vendors.

If successful, Mojo could offer a fundamentally different approach to AI software development, potentially bypassing some limitations of existing C++-based alternatives or framework-specific backends.

-

Direct PTX Programming (DeepSeek Example):

The case of DeepSeek AI utilizing PTX directly on NVIDIA H800 GPUs to achieve highly efficient training for their 671B parameter MoE model demonstrates an extreme optimization strategy.

By bypassing standard CUDA libraries and writing closer to the hardware's instruction set, they reportedly achieved significant efficiency gains.

This highlights that even within the NVIDIA ecosystem, CUDA itself may not represent the absolute performance ceiling for sophisticated users willing to tackle extreme complexity, though it remains far beyond the reach of typical development workflows.

3.4 Implications for Pre-training Beyond CUDA

The pre-training landscape, while still dominated by NVIDIA, is showing signs of diversification, driven by cost pressures and strategic initiatives from competitors and hyperscalers. However, several factors shape the trajectory. Firstly, the sheer computational scale of pre-training necessitates high-end, specialized hardware. This means the battleground for pre-training beyond CUDA is primarily contested among major silicon vendors (NVIDIA, AMD, Intel, Google) and potentially large hyperscalers with custom chip programs, rather than being open to a wide array of lower-end hardware.

Secondly, software maturity remains the most significant bottleneck for alternative hardware platforms in the pre-training domain. While hardware like AMD Instinct and Intel Gaudi offer compelling specifications and cost advantages , their corresponding software stacks (ROCm, oneAPI/SynapseAI) are consistently perceived as less mature, stable, or easy to deploy at scale compared to the battle-hardened CUDA ecosystem. For expensive, long-duration pre-training runs where failures can be catastrophic, the proven reliability of CUDA often outweighs the potential benefits of alternatives, hindering faster adoption.

Thirdly, the reliance on high-level frameworks like PyTorch and JAX makes robust backend integration paramount. Developers interact primarily through these frameworks, meaning the success of non-NVIDIA hardware hinges less on the intricacies of ROCm or SYCL syntax itself, and more on the seamlessness, performance, and feature completeness of the framework's support for that hardware. This elevates the strategic importance of compiler technologies like Triton and XLA, which are responsible for translating framework operations into efficient machine code for diverse targets. Vendors must ensure their hardware is a first-class citizen within these framework ecosystems to compete effectively in pre-training.

4. Post-training Beyond CUDA: Optimization and Fine-tuning

4.1 Importance of Post-training

Once a large AI model has been pre-trained, further steps are typically required before it can be effectively deployed in real-world applications. These post-training processes include optimization – techniques to reduce the model's size, decrease inference latency, and improve computational efficiency – and fine-tuning – adapting the general-purpose pre-trained model to perform well on specific downstream tasks or datasets. These stages often have different computational profiles and requirements compared to the massive scale of pre-training, potentially opening the door to a broader range of hardware and software solutions.

4.2 Techniques and Tools Outside the CUDA Ecosystem

Several techniques and toolkits facilitate post-training optimization and fine-tuning on non-NVIDIA hardware:

-

Model Quantization: Quantization is a widely used optimization technique that reduces the numerical precision of model weights and activations (e.g., from 32-bit floating-point (FP32) to 8-bit integer (INT8) or even lower). This significantly shrinks the model's memory footprint and often accelerates inference speed, particularly on hardware with specialized support for lower-precision arithmetic.

-

OpenVINO NNCF:

Intel's OpenVINO toolkit includes the Neural Network Compression Framework (NNCF), a Python package offering various optimization algorithms.

NNCF supports post-training quantization (PTQ), which optimizes a model after training without requiring retraining, making it relatively easy to apply but potentially causing some accuracy degradation.

It also supports quantization-aware training (QAT), which incorporates quantization into the training or fine-tuning process itself, typically yielding better accuracy than PTQ at the cost of requiring additional training data and computation.

NNCF can process models from various formats (OpenVINO IR, PyTorch, ONNX, TensorFlow) and targets deployment on Intel hardware (CPUs, integrated GPUs, discrete GPUs, VPUs) via the OpenVINO runtime.

-

Other Approaches:

While less explicitly detailed for ROCm or oneAPI in the provided materials, quantization capabilities are often integrated within AI frameworks themselves or through supporting libraries. The BitsandBytes library, known for enabling quantization techniques like QLoRA, recently added experimental multi-backend support, potentially enabling its use on AMD and Intel GPUs beyond CUDA.

Frameworks running on ROCm or oneAPI backends might leverage underlying hardware support for lower precisions.

-

Pruning and Compression: Model pruning involves removing redundant weights or connections within the neural network to reduce its size and computational cost. NNCF also provides methods for structured and unstructured pruning, which can be applied during training or fine-tuning.

-

Fine-tuning Frameworks on ROCm/oneAPI: Fine-tuning typically utilizes the same high-level AI frameworks employed during pre-training, such as PyTorch, TensorFlow, or JAX, along with libraries like Hugging Face Transformers and PEFT (Parameter-Efficient Fine-Tuning).

-

ROCm Example:

The process of fine-tuning LLMs using techniques like LoRA (Low-Rank Adaptation) on AMD GPUs via ROCm is documented.

Examples demonstrate using PyTorch, the Hugging Face

transformers

library, and

peft

with the

SFTTrainer

on ROCm-supported hardware, indicating that standard parameter-efficient fine-tuning workflows can be executed within the ROCm ecosystem.

-

Intel Platforms:

Fine-tuning can also be performed on Intel hardware, such as Gaudi accelerators

or potentially GPUs supported by oneAPI, leveraging the respective framework integrations.

The choice of hardware depends on the scale of the fine-tuning task.

-

Role of Hugging Face Optimum: Libraries like Hugging Face Optimum, particularly Optimum Intel, play a crucial role in simplifying the post-training workflow. Optimum Intel integrates OpenVINO and NNCF capabilities directly into the Hugging Face ecosystem, allowing users to easily optimize and quantize models from the Hugging Face Hub for deployment on Intel hardware. This integration streamlines the process for developers already working within the popular Hugging Face environment.

4.3 Hardware Considerations for Efficient Post-training

Unlike pre-training, which often necessitates clusters of the most powerful and expensive accelerators, fine-tuning and optimization tasks can sometimes be accomplished effectively on a wider range of hardware. Depending on the size of the model being fine-tuned and the specific task, single high-end GPUs (including professional or even consumer-grade NVIDIA or AMD cards ), Intel Gaudi accelerators , or potentially even powerful multi-core CPUs might suffice. This broader hardware compatibility increases the potential applicability of non-NVIDIA solutions in the post-training phase.

4.4 Implications for Post-training Beyond CUDA

The post-training stage presents distinct opportunities and challenges for CUDA alternatives. A key observation is the apparent strength of Intel's OpenVINO ecosystem in the optimization domain. The detailed documentation and tooling around NNCF for quantization and pruning provide a relatively mature pathway for optimizing models specifically for Intel's diverse hardware portfolio (CPU, iGPU, dGPU, VPU). This specialized toolkit gives Intel a potential advantage over AMD in this specific phase, as ROCm's dedicated optimization tooling appears less emphasized in the provided research beyond its core framework support.

Furthermore, the success of fine-tuning on alternative platforms like ROCm hinges critically on the robustness and feature completeness of the framework backends. As demonstrated by the LoRA example on ROCm, fine-tuning workflows rely directly on the stability and capabilities of the PyTorch (or other framework) implementation for that specific hardware. Any deficiencies in the ROCm or oneAPI backends will directly impede efficient fine-tuning, reinforcing the idea that mature software support is as crucial as raw hardware power.

Finally, there is a clear trend towards integrating optimization techniques directly into higher-level frameworks and libraries, exemplified by Hugging Face Optimum Intel. This suggests that developers may increasingly prefer using these integrated tools within their familiar framework environments rather than engaging with standalone, vendor-specific optimization toolkits. This trend further underscores the strategic importance for hardware vendors to ensure seamless and performant integration of their platforms and optimization capabilities within the dominant AI frameworks.

Table 4.1: Non-CUDA Model Optimization & Fine-tuning Tools

| Tool/Platform |

Key Techniques |

Target Hardware |

Supported Frameworks/Formats |

Ease of Use/Maturity (Qualitative) |

| OpenVINO NNCF |

PTQ, QAT, Pruning (Structured/Unstructured) |

Intel CPU, iGPU, dGPU, VPU |

OpenVINO IR, PyTorch, TF, ONNX |

Relatively mature and well-documented for Intel ecosystem; Integrated with HF Optimum Intel |

| ROCm + PyTorch/PEFT |

Fine-tuning (e.g., LoRA, Full FT) |

AMD GPUs (Instinct, Radeon) |

PyTorch, HF Transformers |

Relies on ROCm backend maturity for PyTorch; Examples exist, but ecosystem maturity concerns remain |

| oneAPI Libraries |

Likely includes optimization/quantization libraries (details limited in snippets) |

Intel CPU, GPU, Gaudi |

PyTorch, TF (via framework integration) |

Aims for unified model, but specific optimization tool maturity less clear from snippets compared to NNCF |

| BitsandBytes (Multi-backend) |

Quantization (e.g., for QLoRA) |

NVIDIA, AMD, Intel (Experimental) |

PyTorch |

Experimental support for non-NVIDIA; Requires specific installation/compilation |

| Intel Gaudi + SynapseAI |

Fine-tuning |

Intel Gaudi Accelerators |

PyTorch, TF (via SynapseAI) |

Specific stack for Gaudi; Maturity relative to CUDA less established |

5. Inference Beyond CUDA

5.1 The Inference Landscape: Diversity and Optimization

The inference stage, where trained and optimized models are deployed to make predictions on new data, presents a significantly different set of requirements compared to training. While training often prioritizes raw throughput and the ability to handle massive datasets and models, inference deployment frequently emphasizes low latency, high throughput for concurrent requests, cost-effectiveness, and power efficiency. This diverse set of optimization goals leads to a wider variety of hardware platforms and software solutions being employed for inference, creating more opportunities for non-NVIDIA technologies.

5.2 Diverse Hardware for Deployment

The hardware landscape for AI inference is notably heterogeneous:

-

CPUs & Integrated GPUs (Intel):

Standard CPUs and the integrated GPUs found in many systems (particularly from Intel) are common inference targets, especially when cost and accessibility are key factors. Toolkits like Intel's OpenVINO are specifically designed to optimize model execution on this widely available hardware.

-

Dedicated Inference Chips (ASICs):

Application-Specific Integrated Circuits (ASICs) designed explicitly for inference offer high performance and power efficiency for specific types of neural network operations. Examples include AWS Inferentia

and Google TPUs (which are also used for inference).

-

FPGAs (Field-Programmable Gate Arrays):

FPGAs offer hardware reprogrammability, providing flexibility and potentially very low latency for certain inference tasks. They can be adapted to specific model architectures and evolving requirements.

-

Edge Devices & NPUs:

The proliferation of AI at the edge (in devices like smartphones, cameras, vehicles, and IoT sensors) drives demand for efficient inference on resource-constrained hardware.

This often involves specialized Neural Processing Units (NPUs) or optimized software running on low-power CPUs or GPUs. Intel's Movidius Vision Processing Units (VPUs), accessible via OpenVINO, are an example of such edge-focused hardware.

-

AMD/Intel Data Center & Consumer GPUs:

Data center GPUs from AMD (Instinct series) and Intel (Data Center GPU Max Series), as well as consumer-grade GPUs (AMD Radeon, Intel Arc), are also viable platforms for inference workloads.

Software support comes via ROCm, oneAPI, or cross-platform runtimes like OpenVINO and ONNX Runtime.

5.3 Software Frameworks and Inference Servers

Deploying models efficiently requires specialized software frameworks and servers:

-

OpenVINO Toolkit & Model Server:

Intel's OpenVINO plays a significant role in the non-CUDA inference space. It provides tools (like NNCF) to optimize models trained in various frameworks and a runtime engine to execute these optimized models efficiently across Intel's hardware portfolio (CPU, iGPU, dGPU, VPU).

OpenVINO also integrates with ONNX Runtime as an execution provider

and potentially offers its own Model Server for deployment.

While some commentary questions its popularity relative to alternatives like Triton

, it provides a clear path for inference on Intel hardware.

-

ROCm Inference Libraries (MIGraphX):

AMD provides inference optimization libraries within the ROCm ecosystem, such as MIGraphX. These likely function as compilation targets or backends for higher-level frameworks or standardized runtimes like ONNX Runtime when deploying on AMD GPUs.

-

ONNX Runtime:

The Open Neural Network Exchange (ONNX) format and its corresponding ONNX Runtime engine are crucial enablers of cross-platform inference. ONNX Runtime acts as an abstraction layer, allowing models trained in frameworks like PyTorch or TensorFlow and exported to the ONNX format to be executed on a wide variety of hardware backends through its Execution Provider (EP) interface.

Supported EPs include CUDA, TensorRT (NVIDIA), OpenVINO (Intel), ROCm (AMD), DirectML (Windows), CPU, and others.

This significantly enhances model portability beyond the confines of a single vendor's ecosystem.

-

NVIDIA Triton Inference Server:

While developed by NVIDIA, Triton is an open-source inference server designed for flexibility.

It supports multiple model formats (TensorRT, TensorFlow, PyTorch, ONNX) and backends (including OpenVINO, Python custom backends, ONNX Runtime).

This architecture theoretically allows Triton to serve models using non-CUDA backends if appropriately configured.

There is active discussion and development work on enabling backends like ROCm (via ONNX Runtime) for Triton

, which could further position it as a more hardware-agnostic serving solution. However, its primary adoption and optimization focus remain heavily associated with NVIDIA GPUs.

-

Alternatives/Complements to NVIDIA Triton:

The inference serving landscape includes several other solutions. vLLM has emerged as a highly optimized library specifically for LLM inference, utilizing techniques like PagedAttention and Continuous Batching, and reportedly offering better throughput and latency than Triton in some LLM scenarios.

Other options include Kubernetes-native solutions like KServe (formerly KFServing), framework-specific servers like TensorFlow Serving and TorchServe, and integrated cloud provider platforms such as Amazon SageMaker Inference Endpoints

and Google Vertex AI Prediction.

The choice often depends on the specific model type (e.g., LLM vs. vision), performance requirements, scalability needs, and existing infrastructure.

-

DirectML (Microsoft):

For Windows environments, DirectML provides a hardware-accelerated API for machine learning that leverages DirectX 12. It can be accessed via ONNX Runtime or other frameworks and supports hardware from multiple vendors, including Intel and AMD, offering another path for non-CUDA acceleration on Windows.

5.4 Implications for Inference Beyond CUDA

The inference stage represents the most fragmented and diverse part of the AI workflow, offering the most significant immediate opportunities for solutions beyond CUDA. The varied hardware targets and optimization priorities (cost, power, latency) create numerous niches where NVIDIA's high-performance, CUDA-centric approach may not be the optimal or only solution. Toolkits explicitly designed for heterogeneity, like OpenVINO and ONNX Runtime, are pivotal in enabling this diversification.

OpenVINO, in particular, provides a mature and well-defined pathway for optimizing and deploying models efficiently on the vast installed base of Intel CPUs and integrated graphics, making AI inference accessible without requiring specialized accelerators. ONNX Runtime acts as a crucial interoperability layer, effectively serving as a universal translator that allows models developed in one framework to run on hardware supported by another vendor's backend (ROCm, OpenVINO, DirectML, etc.). The adoption and continued development of these two technologies significantly lower the barrier for deploying models outside the traditional CUDA/TensorRT stack.

While NVIDIA's Triton Inference Server is powerful and widely used, its potential as a truly hardware-agnostic server remains partially realized. Although its architecture supports multiple backends, including non-CUDA ones like OpenVINO and ONNX Runtime , its primary association, optimization efforts, and community focus are still heavily centered around NVIDIA GPUs and the TensorRT backend. The active exploration of alternatives like vLLM for specific workloads (LLMs) and the ongoing efforts to add robust support for other backends like ROCm suggest that the market perceives a need for solutions beyond what Triton currently offers optimally for non-NVIDIA or highly specialized use cases.

Table 5.1: Inference Solutions Beyond CUDA

| Solution (Hardware + Software Stack/Server) |

Target Use Case |

Key Features/Optimizations |

Framework/Format Compatibility |

Relative Performance/Cost Indicator (Qualitative) |

| Intel CPU/iGPU + OpenVINO |

Edge, Client, Cost-sensitive Cloud |

PTQ/QAT (NNCF), Latency/Throughput modes, Auto-batching, Optimized for Intel Arch |

OpenVINO IR, ONNX, TF, PyTorch |

Lower cost, wide availability; Performance depends heavily on CPU/iGPU generation and optimization |

| AMD GPU + ROCm / ONNX Runtime |

Cloud, Workstation Inference |

MIGraphX optimization, HIP, ONNX Runtime ROCm EP |

ONNX, PyTorch, TF (via ROCm backend) |

Potential cost savings vs NVIDIA; Performance dependent on GPU tier and ROCm maturity |

| Intel dGPU/VPU + OpenVINO |

Edge AI, Visual Inference |

Optimized for Intel dGPU/VPU hardware, Leverages NNCF |

OpenVINO IR, ONNX, TF, PyTorch |

Power-efficient options for edge; Performance competitive in target niches |

| AWS Inferentia + Neuron SDK |

Cloud Inference (AWS) |

ASIC optimized for inference, Low cost per inference, Neuron SDK compiler |

TF, PyTorch, MXNet, ONNX |

High throughput, low cost on AWS; Limited to AWS environment |

| Generic CPU/GPU + ONNX Runtime |

Cross-platform deployment |

Hardware abstraction via Execution Providers (CPU, OpenVINO, ROCm, DirectML, etc.) |

ONNX (from TF, PyTorch, etc.) |

Highly portable; Performance varies significantly based on chosen EP and underlying hardware |

| NVIDIA/AMD GPU + vLLM |

High-throughput LLM Inference |

PagedAttention, Continuous Batching, Optimized Kernels |

PyTorch (HF Models) |

Potentially higher LLM throughput/lower latency than Triton in some cases; Primarily GPU-focused |

| FPGA + Custom Runtime |

Ultra-low latency, Specialized tasks |

Hardware reconfigurability, Optimized data paths |

Custom / Specific formats |

Very low latency possible; Higher development effort, niche applications |

| Windows Hardware + DirectML / ONNX Runtime |

Windows-based applications |

Hardware acceleration via DirectX 12 API, Supports Intel/AMD/NVIDIA |

ONNX, Frameworks with DirectML support |

Leverages existing Windows hardware acceleration; Performance varies with GPU |

6. Industry Outlook and Conclusion

6.1 Market Snapshot: Current Share and Growth Trends

The AI hardware market, particularly for data center compute, remains heavily dominated by NVIDIA. Current estimates place NVIDIA's market share for AI accelerators and data center GPUs in the 80% to 92% range. Despite this dominance, competitors are present and making some inroads. AMD has seen its data center GPU share grow slightly, reaching approximately 4% in 2024, driven by adoption from major cloud providers. Other players like Huawei hold smaller shares (around 2% ), and Intel aims to capture market segments with its Gaudi accelerators and broader oneAPI strategy.

The overall market is experiencing explosive growth. Projections for the AI server hardware market suggest growth from around $157 billion in 2024 to potentially trillions by the early 2030s, with a compound annual growth rate (CAGR) estimated around 38%. Similarly, the AI data center market is projected to grow from roughly $14 billion in 2024 at a CAGR of over 28% through 2030. The broader AI chip market is forecast to surpass $300 billion by 2030. Within these markets, GPUs remain the dominant hardware component for AI , inference workloads constitute the largest function segment , cloud deployment leads over on-premises , and North America is the largest geographical market.

6.2 Adoption Progress and Remaining Hurdles for CUDA Alternatives

Significant efforts are underway to build viable alternatives to the CUDA ecosystem. AMD's ROCm has matured, gaining crucial support within PyTorch and securing design wins with hyperscalers for its Instinct accelerators. Intel's oneAPI offers a comprehensive vision for heterogeneous computing backed by the UXL Foundation, and its OpenVINO toolkit provides a strong solution for inference optimization and deployment on Intel hardware. Abstraction layers and compilers like PyTorch 2.0, OpenAI Triton, and OpenXLA are evolving to provide more hardware flexibility.

Despite this progress, substantial hurdles remain for widespread adoption of CUDA alternatives. The primary challenge continues to be the maturity, stability, performance consistency, and breadth of the software ecosystems compared to CUDA. Developers often face a steeper learning curve, more complex debugging, and potential performance gaps when moving away from the well-trodden CUDA path. The sheer inertia of existing CUDA codebases and developer familiarity creates significant resistance to change. Furthermore, the alternative landscape is fragmented, lacking a single, unified competitor to CUDA, which can dilute efforts and slow adoption. While the high cost of NVIDIA hardware is a strong motivator for exploring alternatives , these software and ecosystem challenges often temper the speed of transition, especially for mission-critical training workloads.

6.3 The Future Trajectory: Towards a More Heterogeneous AI Compute Landscape?

The future of AI compute appears poised for increased heterogeneity, although the pace and extent of this shift remain subject to competing forces. On one hand, NVIDIA continues to innovate aggressively, launching new architectures like Blackwell, expanding its CUDA-X libraries, and building comprehensive platforms like DGX systems and NVIDIA AI Enterprise. Its deep ecosystem integration and performance leadership, particularly in high-end training, provide a strong defense for its market share.

On the other hand, the industry push towards openness, cost reduction, and strategic diversification is undeniable. Events like the Beyond CUDA Summit , initiatives like the AI Alliance (including AMD, Intel, Meta, etc. ), the UXL Foundation , and the significant investments by hyperscalers in custom silicon or alternative suppliers all signal a concerted effort to reduce dependence on NVIDIA's proprietary stack. Geopolitical factors and supply chain vulnerabilities, particularly the heavy reliance on TSMC for cutting-edge chip manufacturing, also represent potential risks for NVIDIA's long-term dominance and could further incentivize diversification.

The most likely trajectory involves a gradual diversification, particularly noticeable in the inference space where hardware requirements are more varied and cost/power efficiency are paramount. Toolkits like OpenVINO and runtimes like ONNX Runtime will continue to facilitate deployment on non-NVIDIA hardware. In training, while NVIDIA is expected to retain its lead in the highest-performance segments in the near term, competitors like AMD and Intel will likely continue to gain share, especially among cost-sensitive enterprises and hyperscalers actively seeking alternatives. The success of emerging programming models like Mojo could also influence the landscape if they gain significant traction.

6.4 Concluding Remarks on the Viability and Impact of the "Beyond CUDA" Movement

Synthesizing the analysis of the GTC video's focus on compute beyond CUDA and the broader industry research, it is clear that NVIDIA's CUDA moat remains formidable. Its strength lies not just in performant hardware but, more critically, in the deeply entrenched software ecosystem, developer inertia, and high switching costs accumulated over nearly two decades. Overcoming this requires more than just competitive silicon; it demands mature, stable, easy-to-use software stacks, seamless integration with dominant AI frameworks, and compelling value propositions.

However, the "Beyond CUDA" movement is not merely aspirational; it is a tangible trend driven by significant investment, strategic necessity, and a growing ecosystem of alternatives. Progress is evident across hardware (AMD Instinct, Intel Gaudi, TPUs, custom silicon) and software (ROCm, oneAPI, OpenVINO, Triton, PyTorch 2.0, ONNX Runtime). While a complete upheaval of CUDA's dominance appears unlikely in the immediate future, the landscape is undeniably shifting towards greater heterogeneity. Inference deployment is diversifying rapidly, and competition in the training space is intensifying. The ultimate pace and extent of this transition will depend crucially on the continued maturation and convergence of alternative software ecosystems and their ability to provide a developer experience and performance level that can effectively challenge the CUDA incumbency. The coming years will be critical in determining whether the industry successfully cultivates a truly multi-vendor, open, and competitive AI compute environment.