Motivation

- The original UNIX operating system was FFS's forerunner and was straightforward and user-friendly. But there were some serious issues with it:

- Poor performance was the central issue: The disk bandwidth utilization in some circumstances, as measured in this paper, was only 3%. Moreover, the throughput was poor.

- Low locality was the cause of this: Inodes and data blocks were dispersed around the disk because the original UNIX file system viewed the disk as a random-access memory.

- Fragmentation of the file system was another issue due to careless management of the available space. The file system became fragmented as more and more data came in and went out; as a result, the free list eventually pointed to blocks scattered around the disk, making it challenging to access logically contiguous files without repeatedly traversing the disk. Tools for disk defragmentation initially provided a solution to this issue.

- A more petite size was excellent for eliminating internal fragmentation (waste within the block) but poor for transfers because each block required a placement overhead. The original block size was too small (512 bytes).

Solution

FFS guarantees that accessing two files simultaneously won't necessitate extensive disk search times by grouping

- The superblock's location is rotated, replicated, and stored in several cylinder groups. This lessens the possibility of data loss as a result of superblock corruption.

Layout guidelines

Simple is the basic mantra: Keep similar things close together and irrelevant things separate.

- FFS looks for the cylinder group with the freest inodes and the fewest allocated directories to place directories.

- Setting up files: A file's data blocks are first allocated to the same group as its inode. In the same cylinder group as other files within the same directory, file inodes are also given.

- A file system block now has a 4096-byte size instead of the previous 512-byte size (4 KB). This raises performance due to

- More data is accessed with each disk transfer.

- It is possible to describe more files without having to visit indirect blocks (since the direct blocks now contain more data)

Block Size

A file system block now has a 4096-byte size instead of the previous 512-byte size (4 KB). This raises performance due to more data being accessed with each disk transfer. It is possible to describe more files without having to visit indirect blocks (since the direct blocks now contain more data)

Experiments

Table 2b shows the reads and writes are around 3% to 47% faster in disk bandwidth.

Besides, FFS provided specific functionality improvements to the file system that have become standard in modern operating systems and will probably help FFS expand its user base:

- Long file names: File names are now almost limitless in length (previously: 8 characters. now: 255 characters)

- Programs can now lock files using advised shared or exclusive locks down to the individual file level.

- Symbolic links: Users now have considerably greater flexibility because they may construct "aliases" for any other file or directory on a system.

- Renaming files: Operation of atomic rename()

- Quotas: Limit the number of inodes and disk blocks a user may receive from the file system.

- Crash Consistency: FFS relies on the clean unmount of the filesystem to avoid consistency issues. In case of crash or power shortages, file system users have to invoke and wait for the lengthy file system consistency checker,

fsck,which will detect consistency issues and attempt to recover without guarantee.

Evaluation effectiveness

I generally believe the paper since it adds up to 47% of the performance compared to the previous paper. I have a question for this paper 1. read-write lock performance compared with mandatory lock in different workloads 2. block wear leveling. 3. directory inode slow traverse. (here EXT4 fast start resolves this HERMES)

Novelty

Spreading file blocks on disk can hurt performance, especially when accessing files sequentially. But this problem can be solved by carefully choosing the block size. Specifically, if the block size is large enough, the file system will spend most of its time transferring data from disk and only (relatively) little time looking for data between blocks of blocks. This process of reducing overhead by increasing the amount of work per operation is called amortization and is a common technique in computer systems.

The second clever mechanism is a disk layout that is optimized for performance. The problem occurs when files are placed in contiguous sectors. Suppose FFS first reads block 0, and when the read is complete, requests the first block, but by this time, the head has already crossed over block 1, and if it wants to read block one, it must reevaluate again. FFS solves this problem with a different data layout. By spreading the data out, FFS has enough time to request the next block before the head crosses the block. FFS can calculate for different disks how many blocks should be jumped for placement to avoid additional spins; this technique is called parametrization. Such a layout gets only 50% of the peak bandwidth since you have to bypass each track twice to read each block once. Fortunately, modern disks are smarter: the disk reads the entire way as a cache, and then, for subsequent reads of the track, only the required data is returned from the store. So the file system doesn't have to worry about these low-level details.

SoTA connection

The latest paper, HTMFS in FAST 22, still cites this paper for discussing a clean fcsk. FFS has no consistency guarantee if a crash happens before a clean unmount.

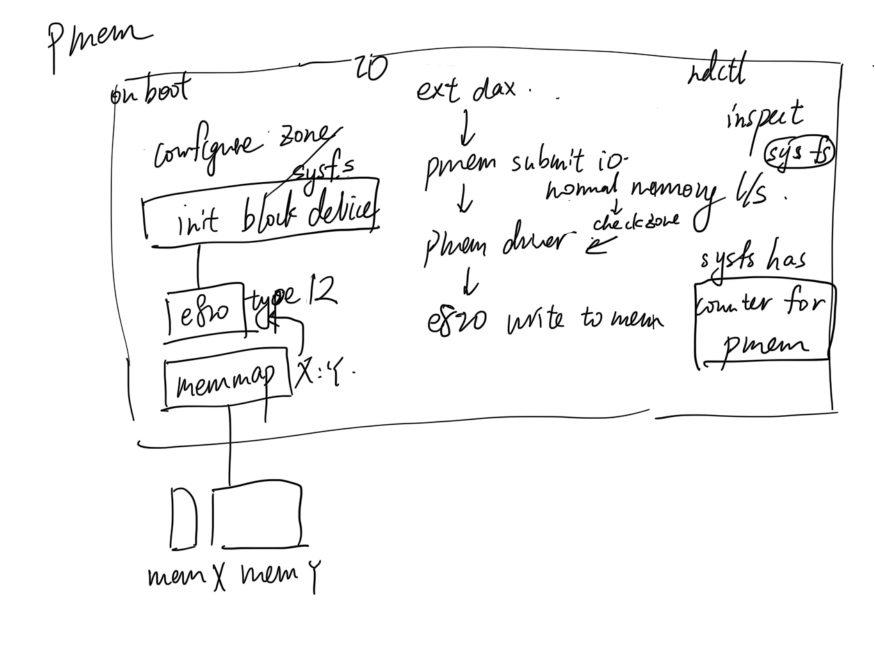

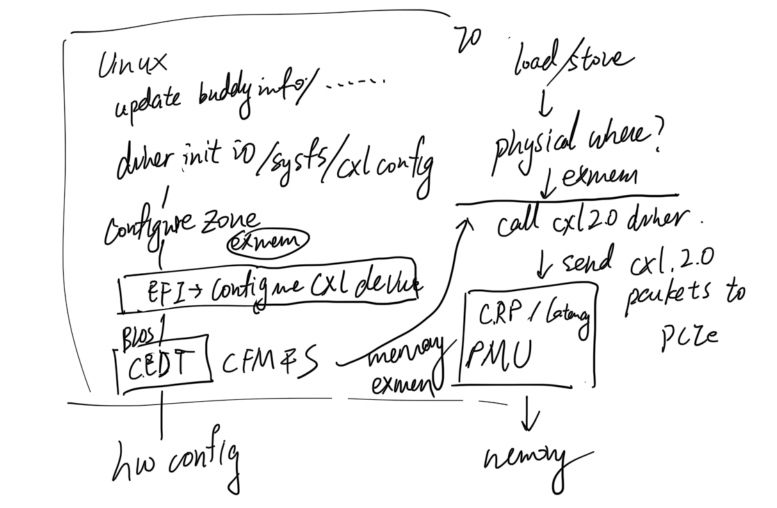

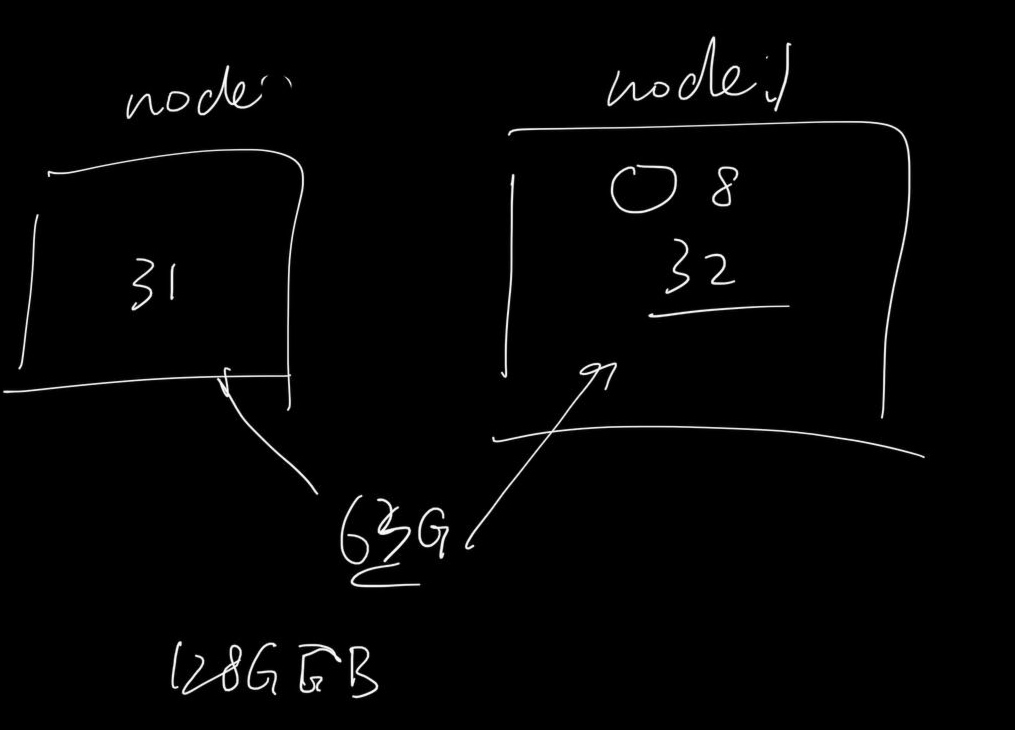

EXT4, F2FS, and so many PM FSes are obviously the descendent of FFS. However, the current FS more focus on the performance of the software with regard to the fast media of storage CXL attached persistent memory. We've seen hacks in software stacks like mapping all the operations like writing combining operations to firmware or wraps into a fast data structure like ART. We see the SoTA FS stack more referring to LFS or PLFS rather than FFS in terms of journaling and firmware FS.

Reference

- HTMFS: Strong Consistency Comes for Free with Hardware TransactionalMemory in Persistent Memory File Systems

- https://ext4.wiki.kernel.org/index.php/Main_Page

- https://gavv.net/articles/file-locks/

- https://pages.cs.wisc.edu/~remzi/OSTEP/file-ffs.pdf