文章目录[隐藏]

Problem Setup

I found in the linux manual that if I want to divide memory by X:Y on 2 NUMA nodes. I can leverage the policy of cpuset that has a higher priority of cgroup memory policy. A formal description of this lies here and there.

Bad attempt using cgroup

So setting up the following cgconfig.conf

group daemons {

cpuset {

cpuset.mems = 0,1;

cpuset.cpus = 0-30,31-63;

}

memory {

memory.limit_in_bytes = 63G;

}

}

cgcreate -t uid:gid -a uid:gid -g subsystems:path

with command with the same effect

mkdir /sys/fs/cgroup/memory/numa

mkdir /sys/fs/cgroup/cpuset/numa

taskset -c 0 ./a.out &

pid1=$!

echo $pid1 > /sys/fs/cgroup/memory/numa/cgroup.procs

echo $((10 * 1024 * 1024 * 1024)) > /sys/fs/cgroup/memory/numa/memory.limit_in_bytes

echo $pid1 > /sys/fs/cgroup/cpuset/numa/cgroup.procs

echo 0-1 > /sys/fs/cgroup/cpuset/numa/cpuset.mems

echo 0-12 > /sys/fs/cgroup/cpuset/numa/cpuset.cpus

Evaluation of assumption

Let's test if the assumption is correct. Seeing through when malloc a 12 GB stuff inside the cgroup and do numastat to get the numa_map, the result lies here.

taskset -c 15 cpuset 15-17 7:4 taskset -c 15 cpuset 15-23 6:5 taskset -c 23 cpuset 15-23 0:12

I'm considering only one point the OS allocates as X:Y does; the following will follow the page transfer mechanism for taskset CPU, which may be the outcome of the QPI/UPI QoS. Also, the BSS section seems unable to migrate, which will continuously malloc on one NUMA node after starting the process.

DPDK solution

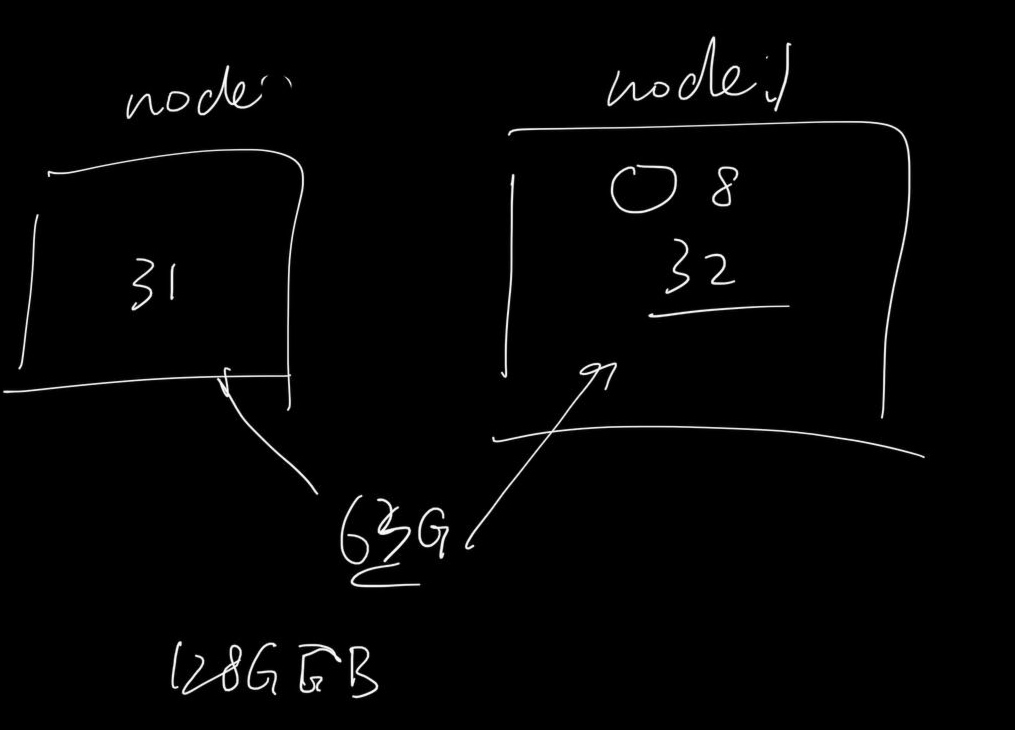

DPDK has the memory pool maintained in their so-called memory pool. You can use memory management and run ./app --socket-mem 0,31,1,32

Hardware disability on memory channels

victoryang00@ares:~$ ls /sys/devices/system/memory/memory*

/sys/devices/system/memory/memory0:

node0 online phys_device phys_index power removable state subsystem uevent valid_zones

...

/sys/devices/system/memory/memory33:

node1 online phys_device phys_index power removable state subsystem uevent valid_zones

...

The physical echo 0 to online is just configured like CPU HT disabled. That resolves the problem. However, the state for one memory channel block(2GB). Because the manual specifies that the memory allocator assigns a page on the blocks(2GB).

Last Solution

Now you should leverage my solution using eBPF or Bede linux.