文章目录[隐藏]

Collective communication

- Important as the basis for many parallel algorithms.

- Implemented using multiple point to point communications, possibly in parallel.

- Assume communicating m words (without contention) between a source and destination takes \(t_{s} + m t_{w}\) time.

- Ignore the the distance of the message, since per hop latency th is usually small.

- With contention c, time becomes \(t_{s} + c m t_{w}\).

- Implementations depend on communication hardware architecture.

- Operations

- Broadcast and reduction.

- All-to-all broadcast and reduction.

- All-reduce, prefix sum.

- Scatter and gather.

- All-to-all scatter and reduce.

- Circular shift.

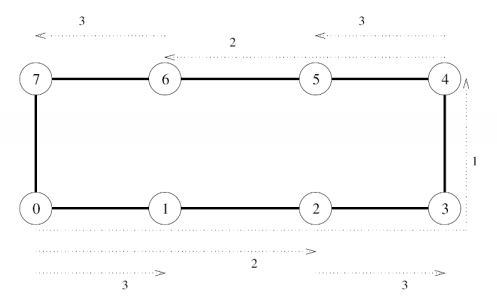

Broadcast and reduction on ring

just cut them into halves.

cost

- With p processors, log p steps.

- In each step, a processor sends a size m message.

- Total time$ (t_{s} + m t_{w}) log p$ take \((t_{s} + m t_{w})\) as const

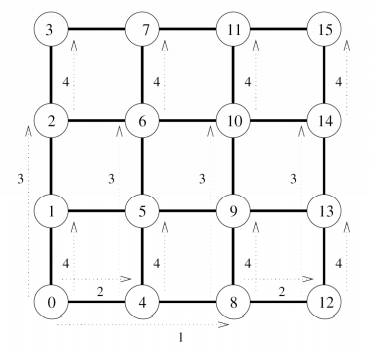

Broadcast on mesh

algorithm

- Root first broadcasts along its row using ring algorithm.

- The nodes of root’s row then broadcast along their columns using ring algorithm.

cost

- \(log \sqrt{p} = (log p) / 2\) steps along row, same along columns.

- Total time (ts + m tw) log p.log

- p = (log p) / 2 steps along row, same along columns.

- Total time (ts + m tw) log p.

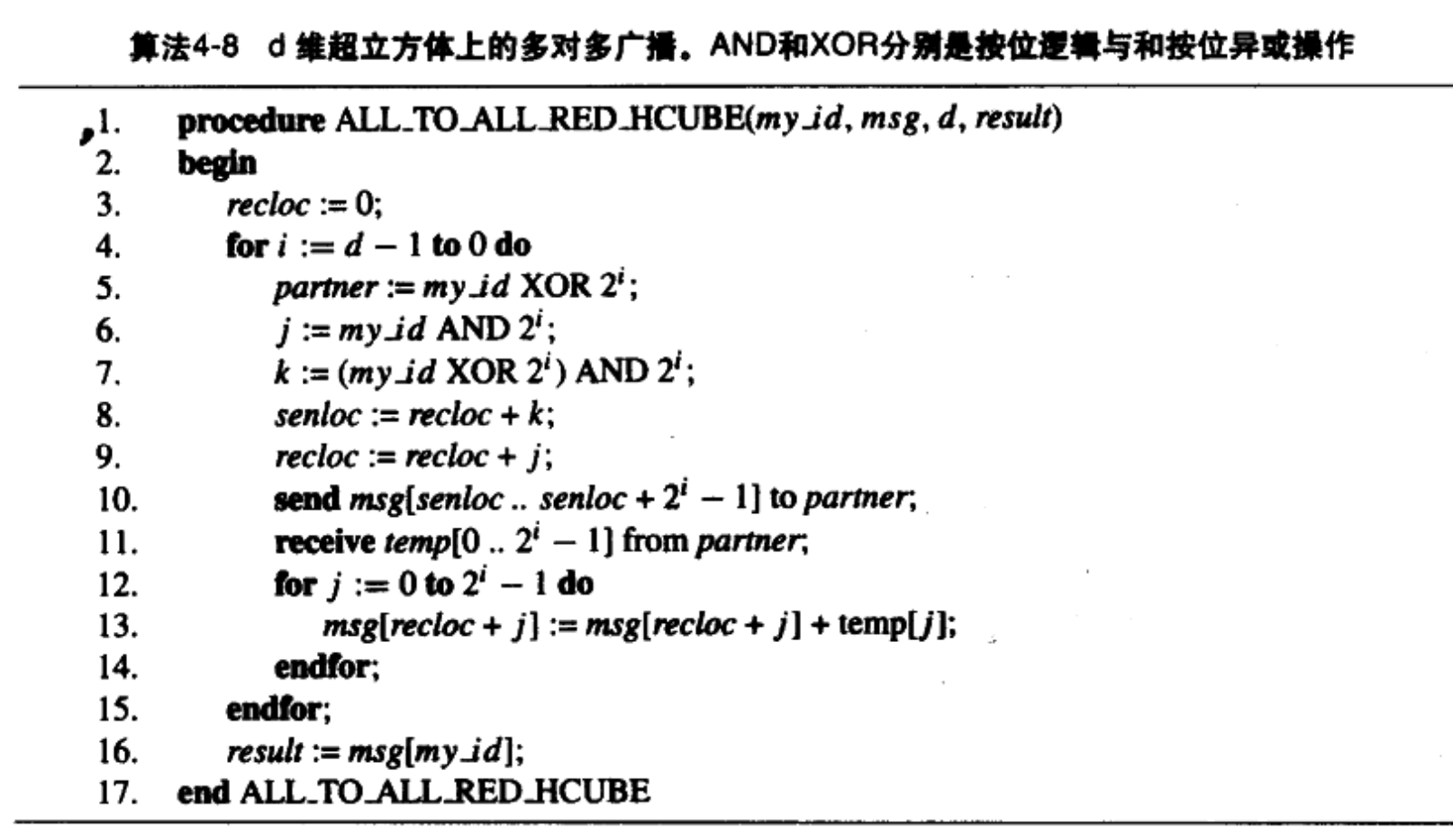

Broadcast on hypercube

algorithm

RING CAN BE PROJECTED TO THE HYPERCUBE but with more congestion.

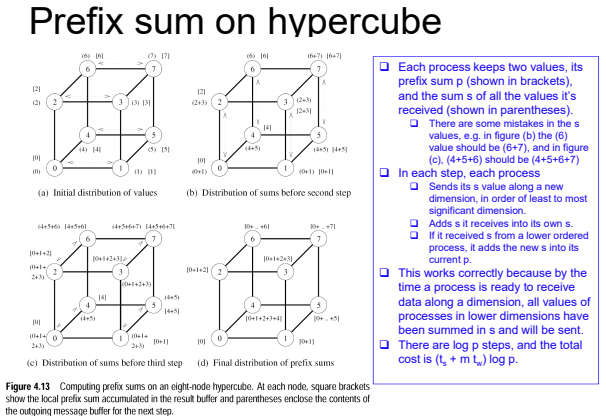

prefix sum on hypercube