❯ curlie https://alx.sh | sudo sh (base)

HTTP/2 200

server: nginx/1.21.1

date: Sat, 19 Mar 2022 15:19:56 GMT

content-type: text/plain; charset=utf-8

content-length: 967

cache-control: max-age=300

content-security-policy: default-src 'none'; style-src 'unsafe-inline'; sandbox

etag: "af389cf3253fe3f924350c99c293434d1c78883b3c51676bf954211c7bb0872b"

strict-transport-security: max-age=31536000

x-content-type-options: nosniff

x-frame-options: deny

x-xss-protection: 1; mode=block

x-github-request-id: 821E:6DAB:83C117:8E8F7F:623518DF

accept-ranges: bytes

via: 1.1 varnish

x-served-by: cache-fra19151-FRA

x-cache: HIT

x-cache-hits: 1

x-timer: S1647703197.985480,VS0,VE1

vary: Authorization,Accept-Encoding,Origin

access-control-allow-origin: *

x-fastly-request-id: 7ac18bfda3e671762cc598d7521b9663e583d871

expires: Sat, 19 Mar 2022 15:24:56 GMT

source-age: 195

strict-transport-security: max-age=31536000;

Bootstrapping installer:

Checking version...

Version: v0.3.5

Downloading...

Extracting...

Initializing...

Welcome to the Asahi Linux installer!

This installer is in an alpha state, and may not work for everyone.

It is intended for developers and early adopters who are comfortable

debugging issues or providing detailed bug reports.

Please make sure you are familiar with our documentation at:

https://alx.sh/w

Press enter to continue.

By default, this installer will hide certain advanced options that

are only useful for developers. You can enable expert mode to show them.

» Enable expert mode? (y/N): y

Collecting system information...

Product name: MacBook Pro (16-inch, 2021)

SoC: Apple M1 Max

Device class: j316cap

Product type: MacBookPro18,2

Board ID: 0xa

Chip ID: 0x6001

System firmware: iBoot-7459.101.2

Boot UUID: 57F7E9CF-77CE-4D0F-83A4-15967BA49F69

Boot VGID: 57F7E9CF-77CE-4D0F-83A4-15967BA49F69

Default boot VGID: 57F7E9CF-77CE-4D0F-83A4-15967BA49F69

Boot mode: macOS

OS version: 12.3 (21E230)

System rOS version: 12.3 (21E230)

No Fallback rOS

Login user: yiweiyang

Collecting partition information...

System disk: disk0

Collecting OS information...

Partitions in system disk (disk0):

1: APFS [Macintosh HD] (850.00 GB, 6 volumes)

OS: [B*] [Macintosh HD] macOS v12.3 [disk3s1, 57F7E9CF-77CE-4D0F-83A4-15967BA49F69]

2: (free space: 144.66 GB)

3: APFS (System Recovery) (5.37 GB, 2 volumes)

OS: [ ] recoveryOS v12.3 [Primary recoveryOS]

[B ] = Booted OS, [R ] = Booted recovery, [? ] = Unknown

[ *] = Default boot volume

Using OS 'Macintosh HD' (disk3s1) for machine authentication.

Choose what to do:

f: Install an OS into free space

r: Resize an existing partition to make space for a new OS

q: Quit without doing anything

» Action (f): f

Choose an OS to install:

1: Asahi Linux Desktop

2: Asahi Linux Minimal (Arch Linux ARM)

3: UEFI environment only (m1n1 + U-Boot + ESP)

4: Tethered boot (m1n1, for development)

» OS: 1

Downloading OS package info...

.

Minimum required space for this OS: 15.00 GB

Available free space: 144.66 GB

How much space should be allocated to the new OS?

You can enter a size such as '1GB', a fraction such as '50%',

the word 'min' for the smallest allowable size, or

the word 'max' to use all available space.

» New OS size (max): max

The new OS will be allocated 144.66 GB of space,

leaving 167.94 KB of free space.

Enter a name for your OS

» OS name (Asahi Linux):

Choose the macOS version to use for boot firmware:

(If unsure, just press enter)

1: 12.3

» Version (1): 1

Using macOS 12.3 for OS firmware

Downloading macOS OS package info...

.

Creating new stub macOS named Asahi Linux

Installing stub macOS into disk0s5 (Asahi Linux)

Preparing target volumes...

Checking volumes...

Beginning stub OS install...

++

Setting up System volume...

Setting up Data volume...

Setting up Preboot volume...

++++++++++

Setting up Recovery volume...

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++

Wrapping up...

Stub OS installation complete.

Adding partition EFI (500.17 MB)...

Formatting as FAT...

Adding partition Root (144.16 GB)...

Collecting firmware...

Installing OS...

Copying from esp into disk0s4 partition...

+

Copying firmware into disk0s4 partition...

Extracting root.img into disk0s7 partition...

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++Error downloading data (IncompleteRead(10240000 bytes read, 1294336 more expected)), retrying... (1/5)

+Error downloading data (IncompleteRead(1130496 bytes read, 10403840 more expected)), retrying... (2/5)

+++++++++++++++++++++++++++++++++++++Error downloading data (IncompleteRead(5979282 bytes read, 5555054 more expected)), retrying... (1/5)

+++++++

Preparing to finish installation...

Collecting installer data...

To continue the installation, you will need to enter your macOS

admin credentials.

Password for yiweiyang:

Setting the new OS as the default boot volume...

Installation successful!

Install information:

APFS VGID: 052A3A5A-E253-4B46-AF6A-F9615F39844B

EFI PARTUUID: 487bfda1-77ae-4a4c-8462-3cba91f073a7

To be able to boot your new OS, you will need to complete one more step.

Please read the following instructions carefully. Failure to do so

will leave your new installation in an unbootable state.

Press enter to continue.

When the system shuts down, follow these steps:

1. Wait 15 seconds for the system to fully shut down.

2. Press and hold down the power button to power on the system.

* It is important that the system be fully powered off before this step,

and that you press and hold down the button once, not multiple times.

This is required to put the machine into the right mode.

3. Release it once 'Entering startup options' is displayed,

or you see a spinner.

4. Wait for the volume list to appear.

5. Choose 'Asahi Linux'.

6. You will briefly see a 'macOS Recovery' dialog.

* If you are asked to 'Select a volume to recover',

then choose your normal macOS volume and click Next.

You may need to authenticate yourself with your macOS credentials.

7. Once the 'Asahi Linux installer' screen appears, follow the prompts.

Press enter to shut down the system.

Twitter card `ERROR: Failed to fetch page due to: ChannelClosed` pitfalls behind Nginx proxy

Twitter seems not to accept my newly composed wordpress behind nginx with error code ERROR: Failed to fetch page due to: ChannelClosed

Using tcpdump -i eth1 -X -e -w test.cap on my psychz VPS. it gets

Sounds the handshakes return with different SSL version. ssl_ciphers 'ECDHE-RSA-AES256-GCM-SHA512:DHE-RSA-AES256-GCM-SHA512:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:!3DES'; from https://wordpress.org/support/topic/twitter-card-images-not-showing/ did not work. So, eventually, I found I didn't switch on the Cloudflare full encryption. Everything is good.

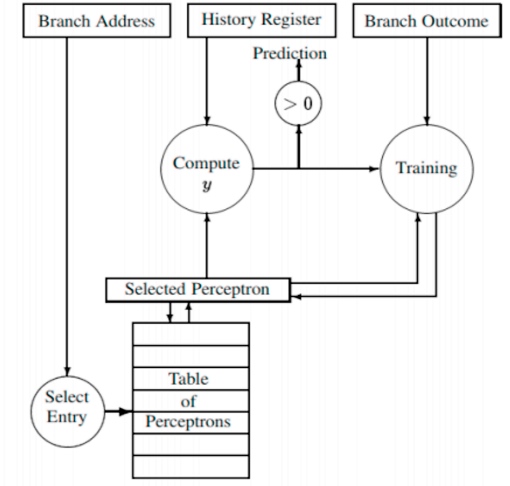

[Computer Architecture] Sniper lab2

The code is at http://victoryang00.xyz:5012/victoryang/sniper_test/tree/lab2.

The lab was to implement the Hash perceptron according to the paper "Dynamic-Branch-Prediction-with-Perceptrons".

The cache implementation is in common/performance_model/branch_predictor.cc

#include "perceptron_branch_predictor.h"

...

else if (type == "perceptron")

{

return new PerceptronBranchPredictor("branch_predictor", core_id);

}

The modification in the cfg

[perf_model/branch_predictor]

type = perceptron # add Perceptron

mispredict_penalty=8 # Reflects just the front-end portion (approx) of the penalty for Interval Simulation

The constructor is passed to the perceptron_branch_predictor.h, we have to maintain the member variable as below:

struct PHT {

bool taken; //jump or not

IntPtr target; //64 history target address.

PHT(bool bt, IntPtr it) : taken(bt), target(it) {}

};//The pattern history table, set like a round linked list to avoid the memory copy

//To store the history result.

SInt32 history_sum;

UInt32 history_bias_index;

std::vector<UInt32> history_indexes;

std::vector<PHT> history_path;

UInt32 history_path_index;

std::vector<PHT> path;

UInt32 path_index;

UInt32 path_length;

UInt32 num_entries;

UInt32 num_bias_entries;//1024

UInt32 weight_bits;

std::vector<std::vector<SInt32>> weights;

std::vector<SInt32> perceptron; //Update the perceptron table to 1024 entries

UInt32 theta; //Threshold, to determine the train is good

SInt64 count; //To store the count

UInt32 block_size; //block sides initialize to perseption

// initialize the branch history register and perceptron table to 0

PerceptronBranchPredictor::PerceptronBranchPredictor(String name, core_id_t core_id)

: BranchPredictor(name, core_id), history_sum(0), history_bias_index(0), history_indexes(64 / 8, 0), history_path(64, PHT(false, 0)), history_path_index(0), path(64, PHT(false, 0)), path_index(0), path_length(64), num_entries(256), num_bias_entries(1024), weight_bits(7), weights(256, std::vector<SInt32>(64, 0)), perceptron(1024, 0), theta(296.64), count(0), block_size(8), coefficients(64, 0)

The prediction method

The Base neral predictor is based on the equation $y=w_{0}+\sum_{i=1}^{n} x_{i} w_{i}$, We have that

// if the prediction is wrong or the weight value is smaller than the threshold THETA,

// then update the weight to train the perceptron

weights = floor(1.93 * blocksize + 14)

Hashed Perceptron Multiple

- set of branches assigned to same weights

- More than one set of information can be used to hash into the weight table

- Diferent hashing function can be used such as : XOR, Concatenation etc.

- Diferent indices can be assigned to diferent weights

Algorithm

The input is ip, and first come to a computation of (ip >> 4) % num_bias_entries;

This give the seed to the weight table lines:

table of perception will get update to te coefficient*weight and

and weight table will update to weights[index[i]][i*8...(i+1)*8]*coefficients[i*8...(i+1)*8]*h

if the result >0 the prediction is right then jump, or not jump.

Then update the history and insert the round linked list.

bool PerceptronBranchPredictor::predict(IntPtr ip, IntPtr target)

{

double sum = 0;

//hash method

UInt32 bias_index = (ip >> 4) % num_bias_entries;

sum += bias_coefficient * perceptron[bias_index];

history_bias_index = bias_index;

//update the weight

for (UInt32 i = 0; i < path_length; i += block_size)

{

IntPtr z = ip >> 4;

for (UInt32 j = 0; j < block_size; j++)

{

z ^= (path[(path_index - i - j + path_length - 1) % path_length].target >> 4);

}

UInt32 index = z % num_entries;

history_indexes[i / block_size] = index;

for (UInt32 j = 0; j < block_size; j++)

{

SInt32 h = path[(path_index - i - j + path_length - 1) % path_length].taken ? 1 : -1;

sum += h * weights[index][i + j] * coefficients[i + j];

}

}

bool result = ((SInt32)sum) >= 0;

history_sum = (SInt32)sum;

history_path.assign(path.begin(), path.end());

history_path_index = path_index;

path[path_index] = PHT(result, target);

path_index = (path_index + 1) % path_length;

return result;

}

The train process

Algorithm

Input parameters: predicted, actual, ip

auxiliary parameters: history_sum(predicted value), history_bias_index, history_indexes(index). history_path(history), history_path_index(history subscript)

for each bit in parallel

if t=xi then

wi=wi+1

else

wi=wi-1

end if

Realization

for (UInt32 i = 0; i < path_length; i += block_size)

{

index = history_indexes[i / block_size];

for (UInt32 j = 0; j < block_size; j++)

{

bool taken = history_path[(history_path_index - i - j + path_length - 1) % path_length].taken;

if (taken == actual)

{

if (weights[index][i + j] < (1 << weight_bits) - 1)

weights[index][i + j]++;

}

else

{

if (weights[index][i + j] > -(1 << weight_bits))

weights[index][i + j]--;

}

}

}

The result

FFT with Blocking Transpose

1024 Complex Doubles

1 Processors

65536 Cache lines

16 Byte line size

4096 Bytes per page

| pentium_m | perceptron | |

|---|---|---|

| num correct | 63112 | 65612 |

| num incorrect | 4342 | 2112 |

| IPC | 1.36 | 1.37 |

| mpki | 2.71 | 1.39 |

| misprediction rate | 6.436% | 3.118% |

| Elapsed time | 4.46s | 5.23s |

| cycles | 1.2M | 1.2M |

| Instructions | 1.6M | 1.6M |

Reference

[Computer Architecture] Sniper lab3

The code can be viewed on http://victoryang00.xyz:5012/victoryang/sniper_test/tree/lab3

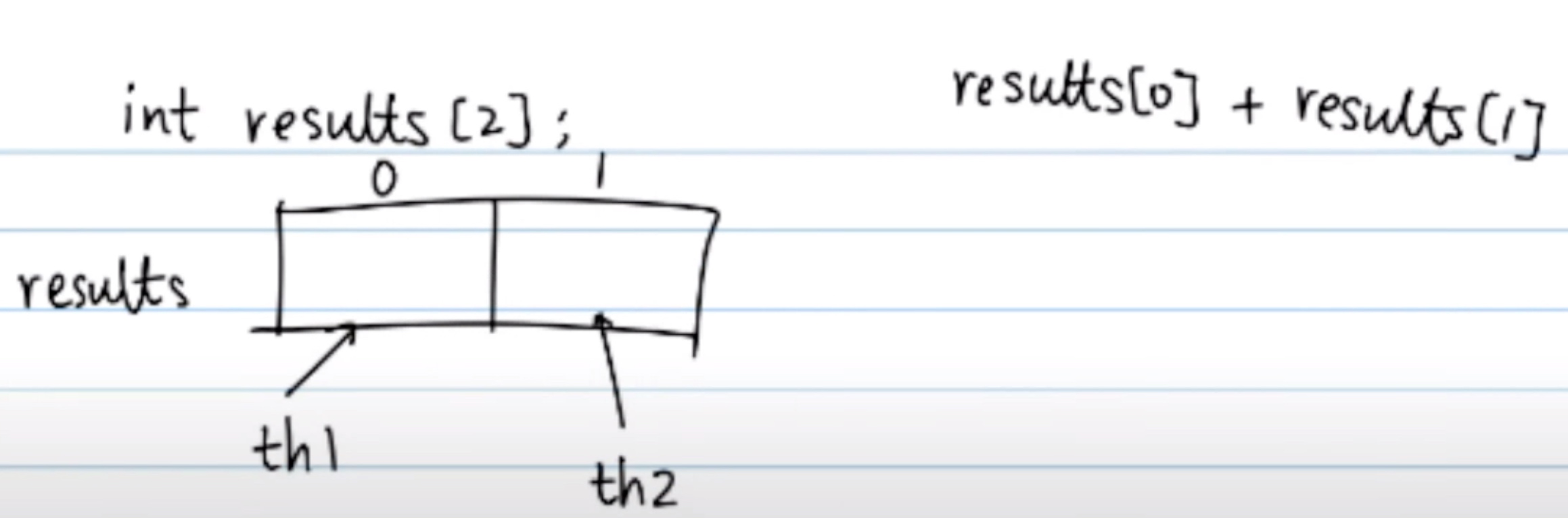

false sharing

For the false sharing test cases. We've given the lab3 cfg file that the cache line is 64B. So that we just need to set the false sharing variable under that cache size.

In the false_sharing_bad.c, we open 2 thread to store global variable results with first part th1 visits and the second part th2 visits.

void* myfunc(void *args){

int i;

MY_ARGS* my_args=(MY_ARGS*)args;

int first = my_args->first;

int last = my_args->last;

int id = my_args->id;

// int s=0;

for (i=first;i<last;i++){

results[id]=results[id]+arr[i];

}

return NULL;

}

The result of this in the sniper.

[SNIPER] Warning: Unable to use physical addresses for shared memory simulation.

[SNIPER] Start

[SNIPER] --------------------------------------------------------------------------------

[SNIPER] Sniper using SIFT/trace-driven frontend

[SNIPER] Running full application in DETAILED mode

[SNIPER] --------------------------------------------------------------------------------

[SNIPER] Enabling performance models

[SNIPER] Setting instrumentation mode to DETAILED

[RECORD-TRACE] Using the Pin frontend (sift/recorder)

[TRACE:1] -- DONE --

[TRACE:2] -- DONE --

s1 = 5003015

s2= 5005373

s1+s2= 10008388

[TRACE:0] -- DONE --

[SNIPER] Disabling performance models

[SNIPER] Leaving ROI after 627.88 seconds

[SNIPER] Simulated 445.0M instructions, 192.9M cycles, 2.31 IPC

[SNIPER] Simulation speed 708.8 KIPS (177.2 KIPS / target core - 5643.3ns/instr)

[SNIPER] Setting instrumentation mode to FAST_FORWARD

[SNIPER] End

[SNIPER] Elapsed time: 627.79 seconds

In the false_sharing.c, we open 2 thread to store different local variable s with th1 visits and th2 visits.

void* myfunc(void *args){

int i;

MY_ARGS* my_args=(MY_ARGS*)args;

int first = my_args->first;

int last = my_args->last;

// int id = my_args->id;

int s=0;

for (i=first;i<last;i++){

s=s+arr[i];

}

my_args->result=s;

return NULL;

}

The result of this in the sniper.

[SNIPER] Warning: Unable to use physical addresses for shared memory simulation.

[SNIPER] Start

[SNIPER] --------------------------------------------------------------------------------

[SNIPER] Sniper using SIFT/trace-driven frontend

[SNIPER] Running full application in DETAILED mode

[SNIPER] --------------------------------------------------------------------------------

[SNIPER] Enabling performance models

[SNIPER] Setting instrumentation mode to DETAILED

[RECORD-TRACE] Using the Pin frontend (sift/recorder)

[TRACE:2] -- DONE --

[TRACE:1] -- DONE --

s1 = 5003015

s2= 5003015

s1+s2= 10006030

[TRACE:0] -- DONE --

[SNIPER] Disabling performance models

[SNIPER] Leaving ROI after 533.95 seconds

[SNIPER] Simulated 415.1M instructions, 182.1M cycles, 2.28 IPC

[SNIPER] Simulation speed 777.3 KIPS (194.3 KIPS / target core - 5145.9ns/instr)

[SNIPER] Setting instrumentation mode to FAST_FORWARD

[SNIPER] End

[SNIPER] Elapsed time: 533.99 seconds

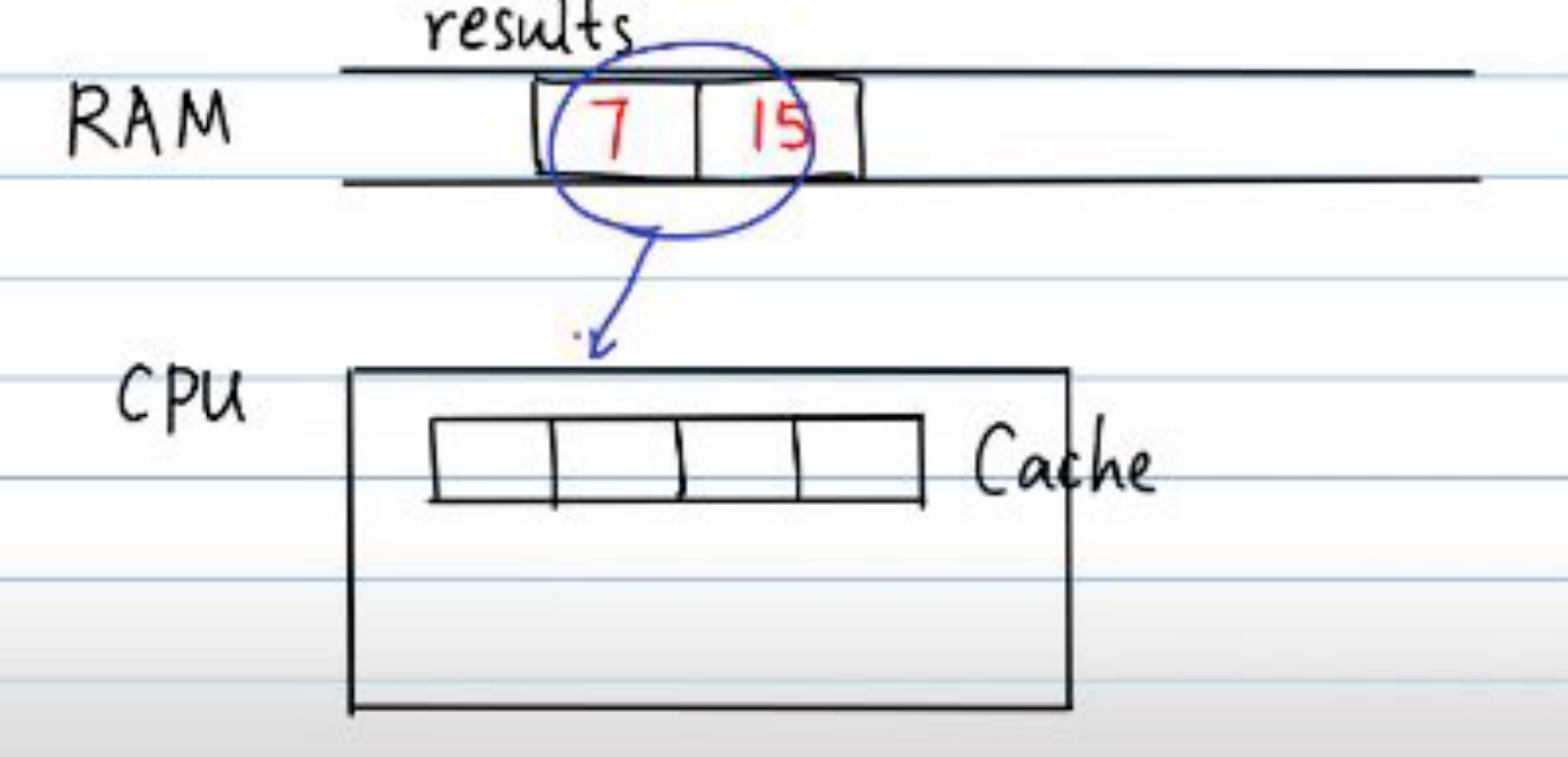

The reason of false sharing:

Every time the thread may let the results to get into the CPU cache.

The Cache may check whether the cache part and memory are the same or not, thus trigger the latency, which is false sharing.

The solution of the false sharing:

Just let the adjacent data's distance larger than the one cache line, say 64B. so set FALSE_ARR to 200000.

The result changed to:

[SNIPER] Warning: Unable to use physical addresses for shared memory simulation.

[SNIPER] Start

[SNIPER] --------------------------------------------------------------------------------

[SNIPER] Sniper using SIFT/trace-driven frontend

[SNIPER] Running full application in DETAILED mode

[SNIPER] --------------------------------------------------------------------------------

[SNIPER] Enabling performance models

[SNIPER] Setting instrumentation mode to DETAILED

[RECORD-TRACE] Using the Pin frontend (sift/recorder)

[TRACE:1] -- DONE --

[TRACE:2] -- DONE --

s1 = 5003015

s2= 5005373

s1+s2= 10008388

[TRACE:0] -- DONE --

[SNIPER] Disabling performance models

[SNIPER] Leaving ROI after 512.28 seconds

[SNIPER] Simulated 445.1M instructions, 158.1M cycles, 2.82 IPC

[SNIPER] Simulation speed 868.8 KIPS (217.2 KIPS / target core - 4604.2ns/instr)

[SNIPER] Setting instrumentation mode to FAST_FORWARD

[SNIPER] End

[SNIPER] Elapsed time: 512.22 seconds

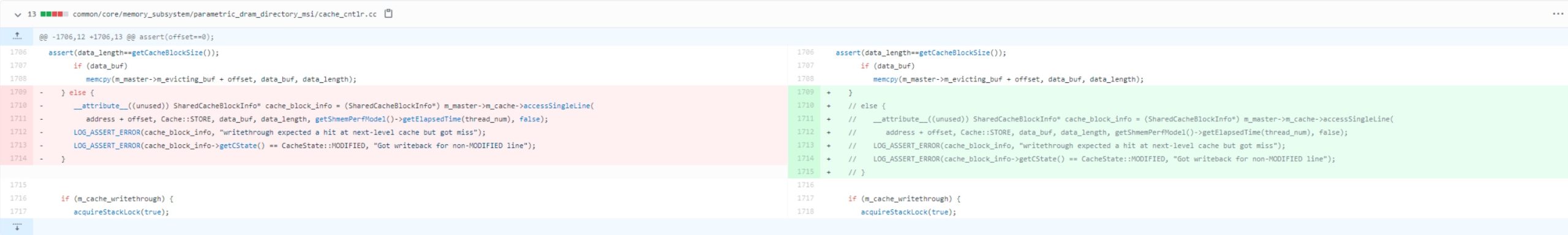

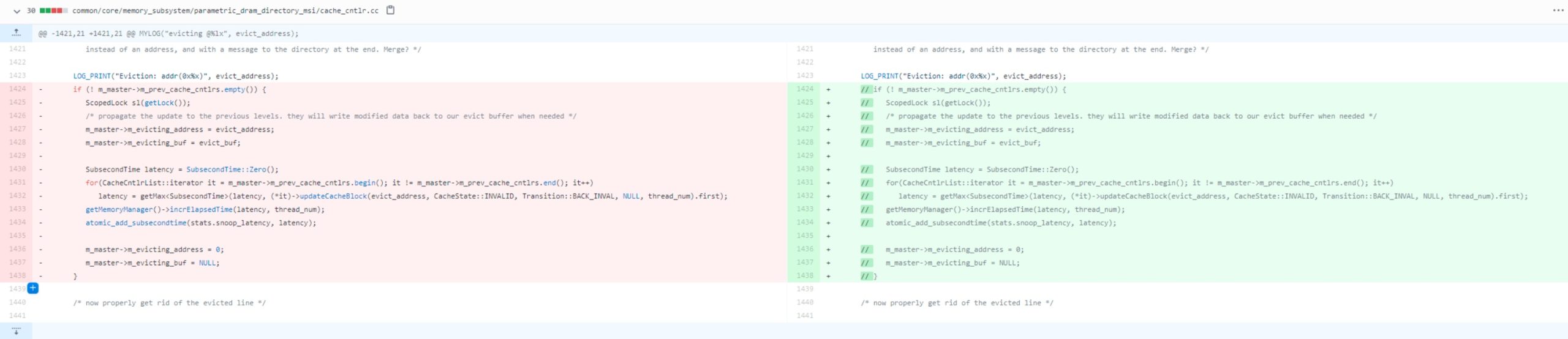

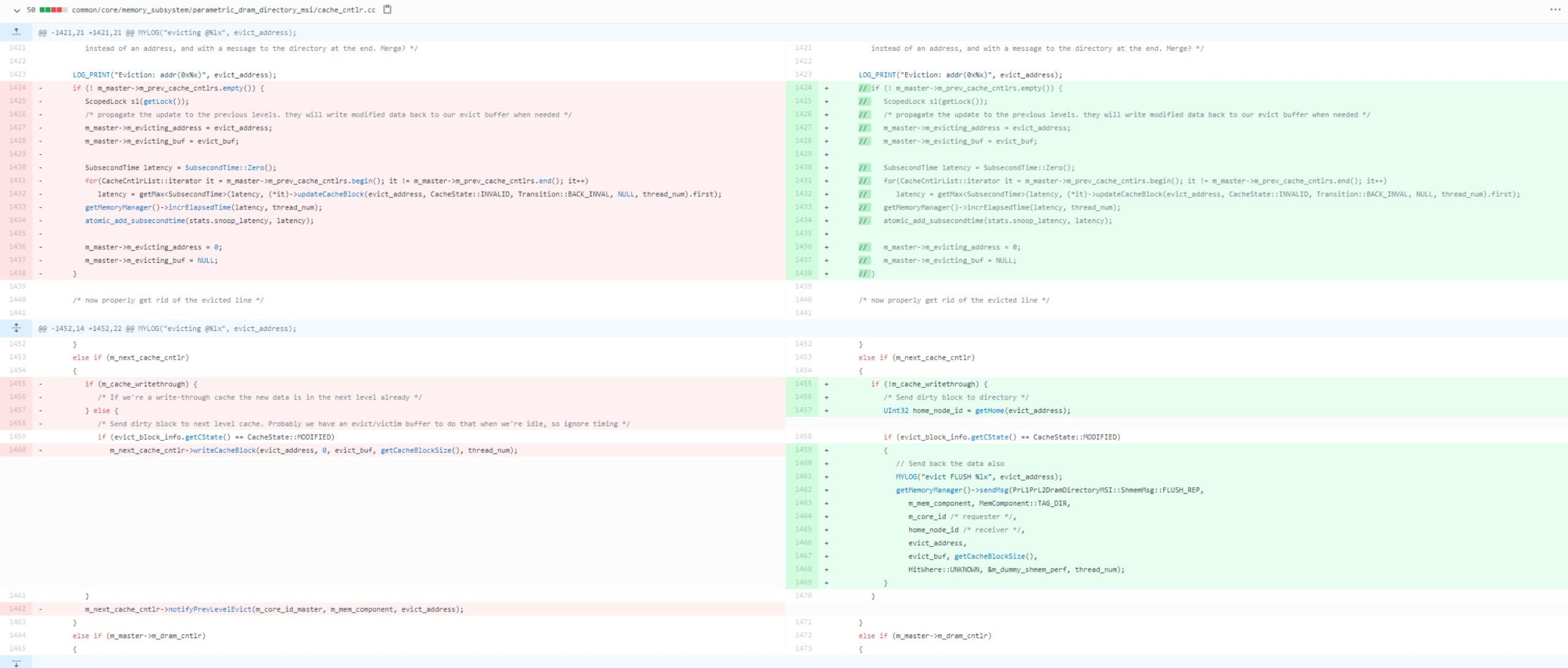

multi-level cache

The non-inclusive cach is to remove the back-invalidation and fix one.

Then I found https://groups.google.com/g/snipersim/c/_NJu8DXCVVs/m/uL3Vo24OAAAJ. That the non-inclusive cache intends to directly write back to the memory. We have to fix some bugs for inclusive cache during the L1 eviction and L2 have to evict, but L2 may not be found, thus the L1 should WB to memory, in this time.

I add a new configuration in the cfg. to make the L1 non-inclusive or not and deployed different Protocol in cfg.

[caching_protocol]

type = parametric_dram_directory_msi

variant = mesif # msi, mesi or mesif

I didn't do a result with a small granularity but with lock_add lock_fill_bucket reader_writer and bfs, I got num of write backs and some WB test for inclusive and non inclusive caches.

Test

Lock Add: In this test all the threads try to add ‘1’ to a global counter using locks. We see lower number of memory writebacks in MOSI because of the presence of the owner state.

Lock Fill Bucket: This tests makes buckets for numbers, so that it can count how many times each number is present in the array. This is done using locks. The dragon protocol is performing much worse here compared to others. This is probably because updates to the buckets are not always needed by other processors, hence the updates on the writes do not help.

Result

Protocol

| Protocol\IPC | lock_add | lock_fill_bucket | reader_writer | bfs |

|---|---|---|---|---|

| MSI | 1.31 | 1.32 | 1.27 | 1.39 |

| MESI | 1.35 | 1.36 | 1.29 | 1.39 |

| MESIF | 1.35 | 1.36 | 1.30 | 1.39 |

The MESIF protocol enhances the performance for teh multicore systems. MESIF protocal enhances the performance for multicore system. It may be aware of the micro arch.

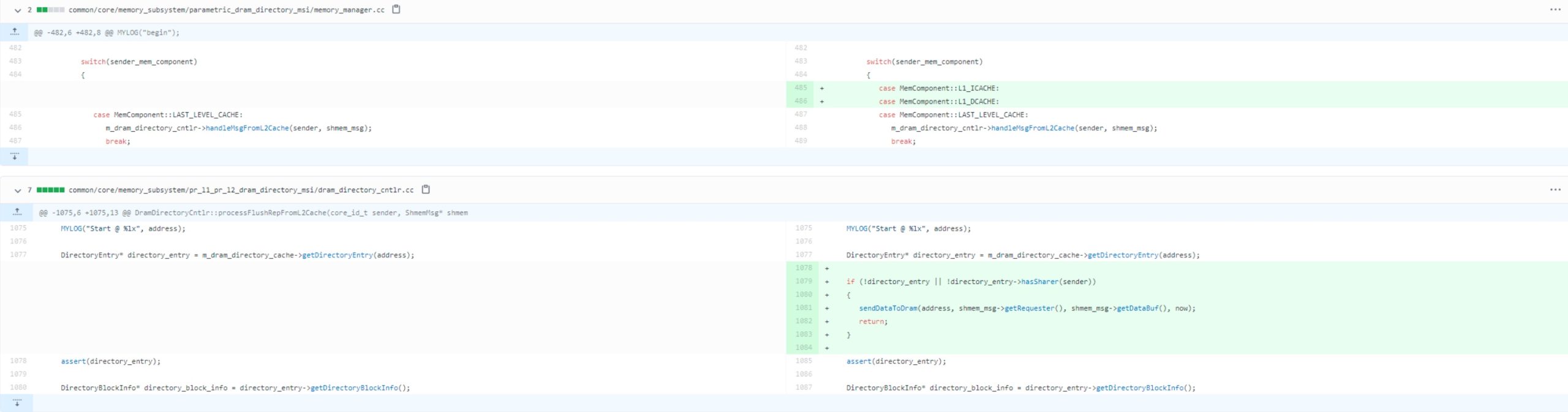

CPI stack

The CPI stack can quantify the cycle gone due to memory branch or sync. literally all of the protocal has similar graph as above. The MESIF shows the lowest time in mem and sync.

| Protocol\L2 miss rate | lock_add | lock_fill_bucket | reader_writer | bfs |

|---|---|---|---|---|

| MSI | 49.18 | 20.13 | 27.12 | 42.24 |

| MSI (with non-inclusive cache) | 47.98 | 20.01 | 29.12 | 42.13 |

| MESI | 46.21 | 21.13 | 31.29 | 41.31 |

| MESI (with non-inclusive cache) | 45.13 | 21.41 | 26.41 | 42.15 |

| MESIF | 45.71 | 20.12 | 25.14 | 41.39 |

| MESIF (with non-inclusive cache) | 46.35 | 23.14 | 24.14 | 41.13 |

The non-inclusive cache have a better score than inclusive ones in L2 miss rate and MESIF & MESI are better than the MSI.

Summary

Interesting Conclusions

Adding ‘E’ state:

To measure the performance differences caused by adding the Exclusive state to the protocols, we can look at the differences in metrics in MSI vs MESI and MESIF. The main benefit of the Exclusive state is in reducing the number of snooping bus transactions required. If we consider a parallel program where each thread works on a chunk of an array and updates only that chunk, or if we assume a sequential program that has a single thread, then in these cases, there will be a lot of cases where a program is reading a cacheline and updating it. In MSI, this would translate to first loading the cacheline using a BusRd moving to the S state, and then performing a BusRdX and moving to the M state. This requires two snooping bus transactions. In the case of MESI, this can be done in a single transaction. The cache would perform a BusRd moving to the E state and then since no other cache has the cacheline, there is no need of a BusRdX transaction to move to the M state. It can just silently change the status of the cacheline to Modified.

This gives a significant boost in programs which access and modify unique memory addresses.

Write-Invalidation vs Write-Update

Since we have implemented both write invalidation and write update protocols, our simulator can also tell whether for a given program or memory trace, write invalidation protocols will be better or write update.

For a write-invalidation protocol, when a processor writes to a memory location, other processor caches need to invalidate that cacheline. In a write-update protocol, instead of invalidating the cachelines, it sends the updated cacheline to the other caches. Therefore, in cases where the other processors will need to read those values in the future, write-update performs well, but if the other processors are not going to be needing those values, then the updates are not going to be of any use, and will just cause extra bus transactions. Therefore, the effects of the protocol would be completely dependent.

From our tests, we saw lesser number of bus transactions This would explain why updating rather than invalidating reduced the number of bus transactions.

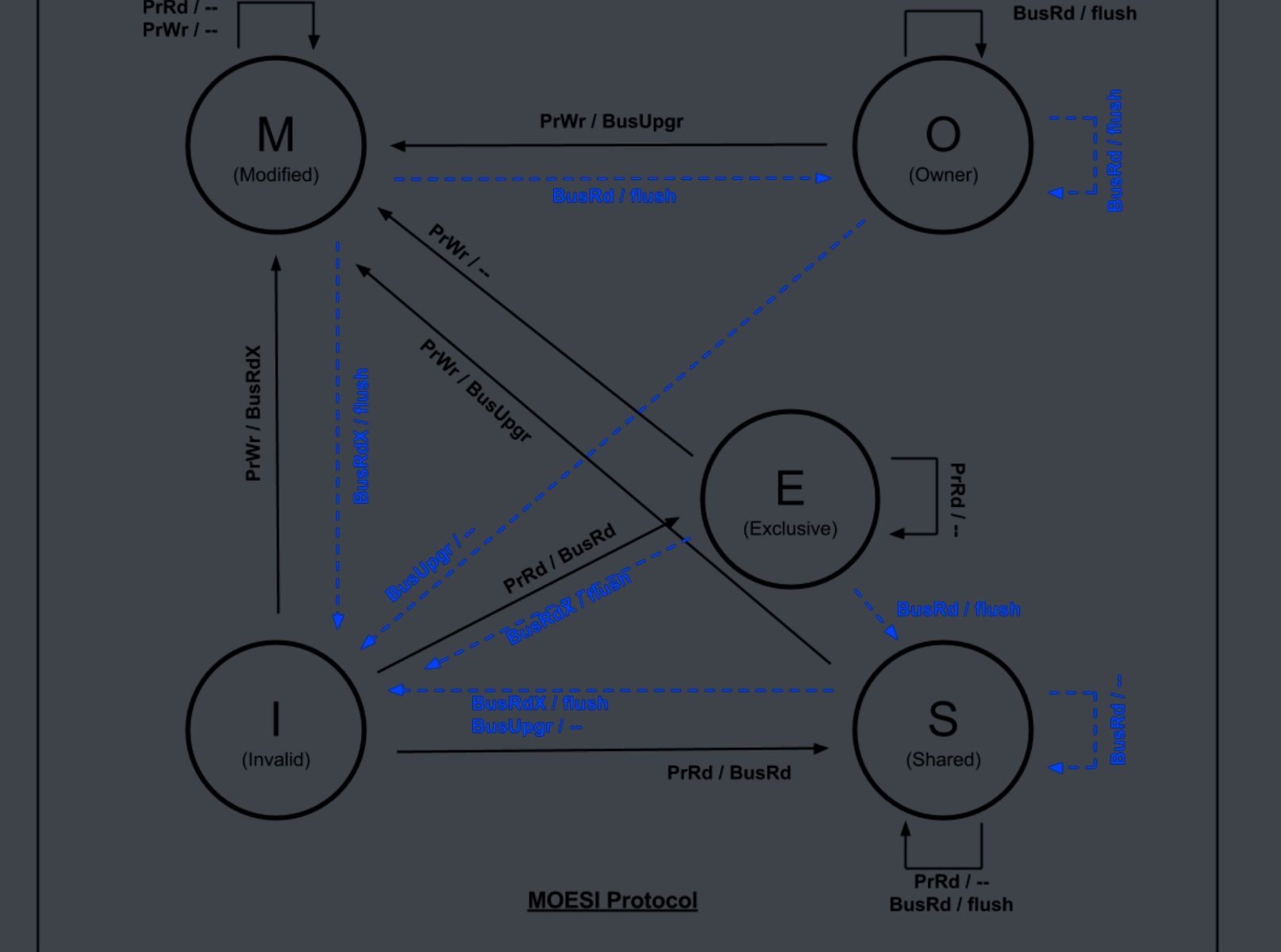

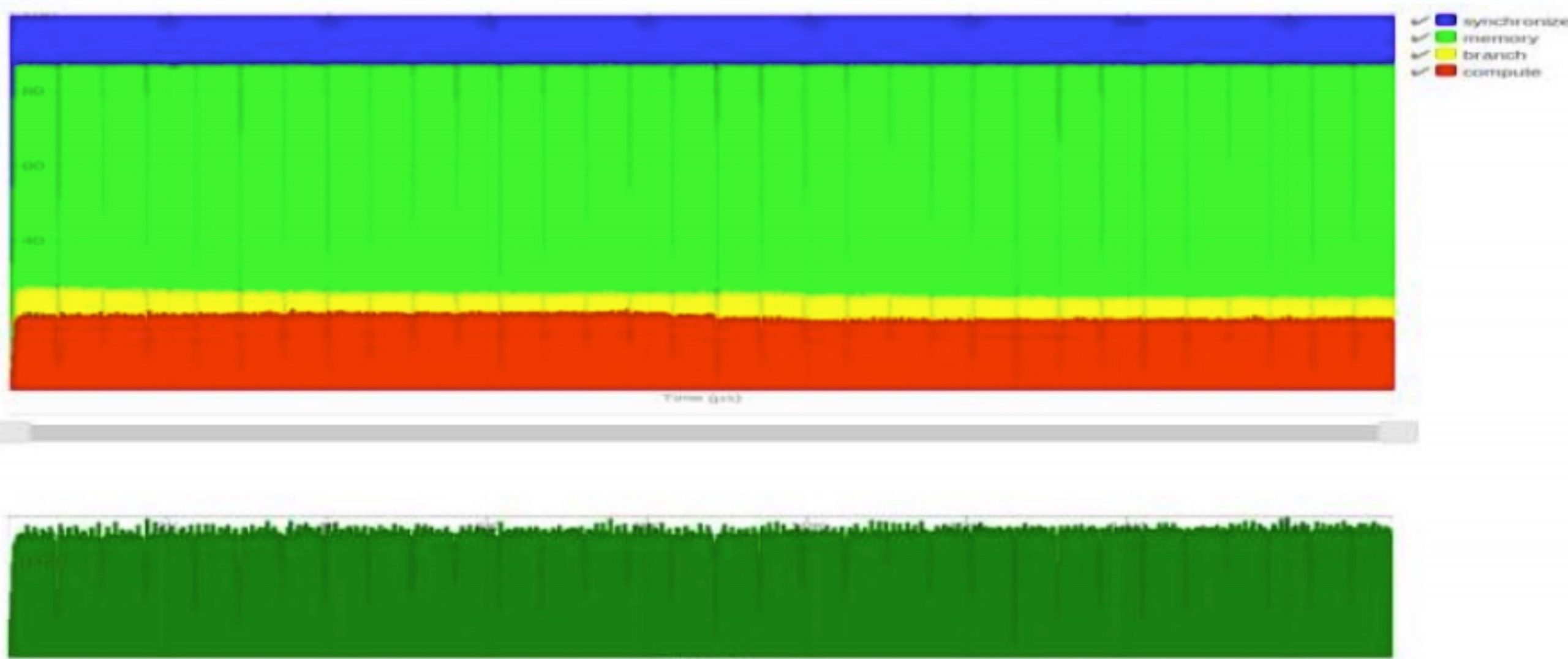

MOESI (Unchecked)

I refer to the graph of

The code is in dir_moesi directory with one state added.

I just make it runnable and not yet tested.

Reference

ASPLOS 22 Attendency

这次被狠狠的砍了60🔪,由于早上还要上课,晚上还要玩一玩isc/asc,陪npy,所以是没什么时间读paper,都是直接现场听的,只听了自己感兴趣的,同事和未来老板聊了聊他提问了的talk。

Continue reading "ASPLOS 22 Attendency"VEE Workshop ‘2022

Haibo

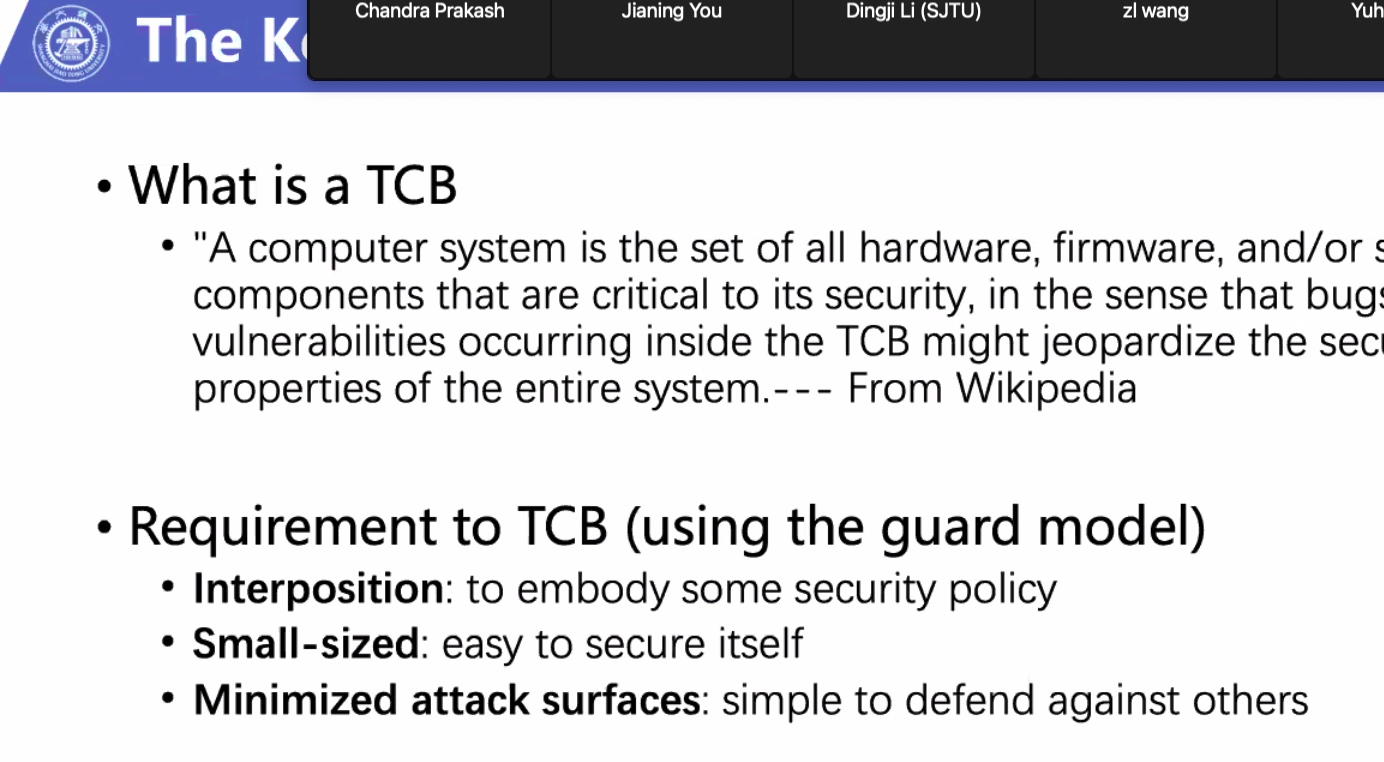

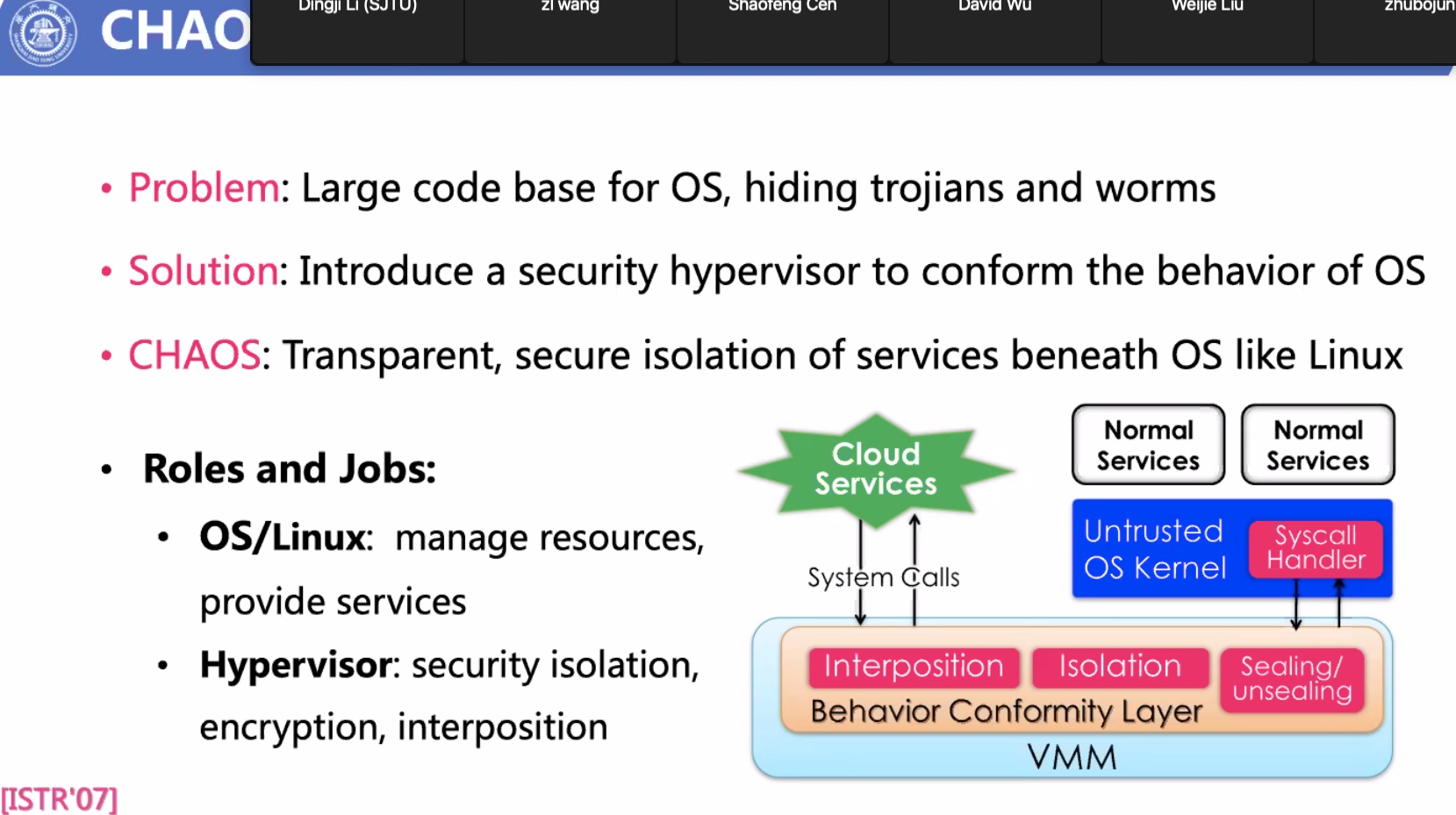

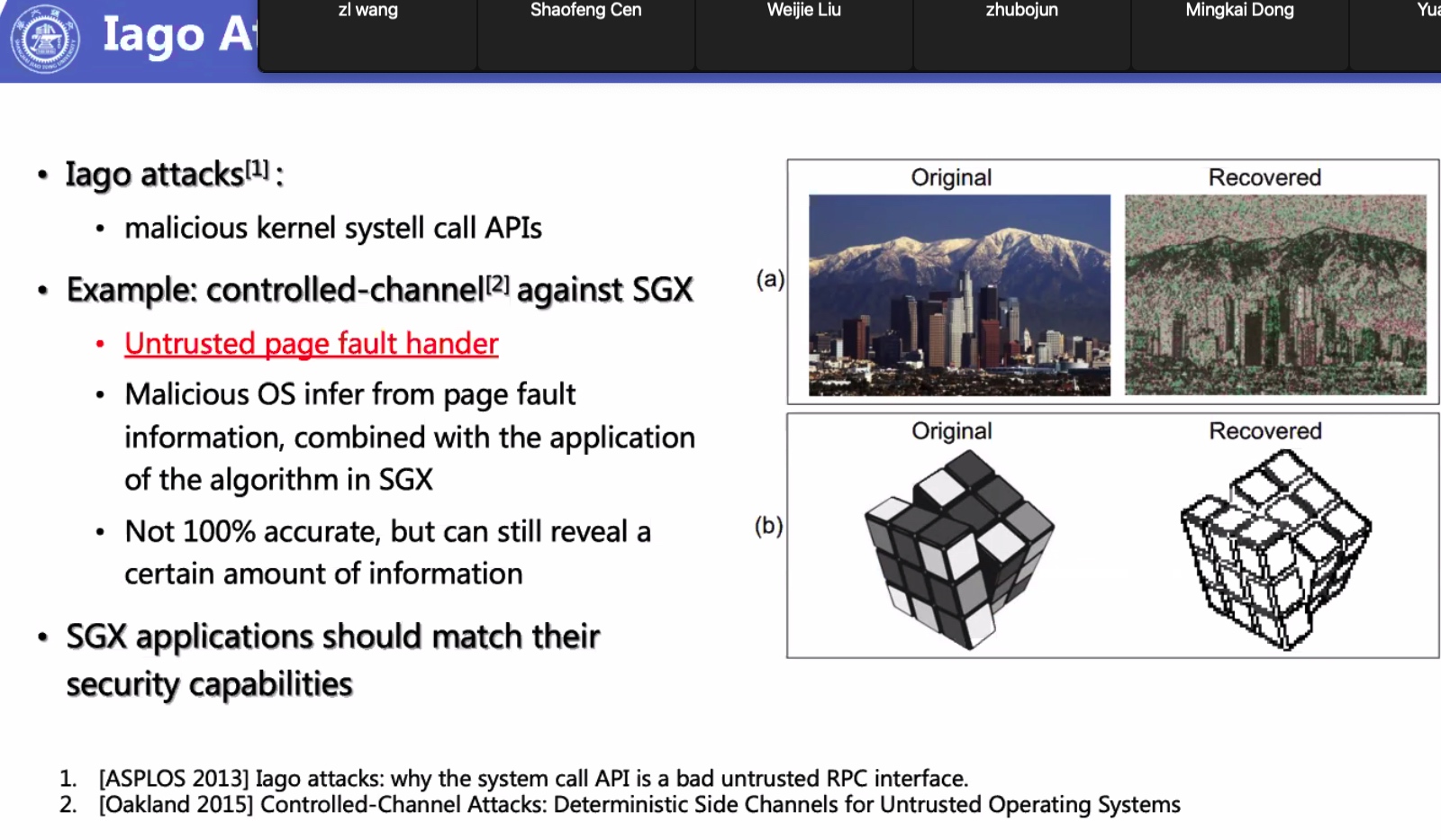

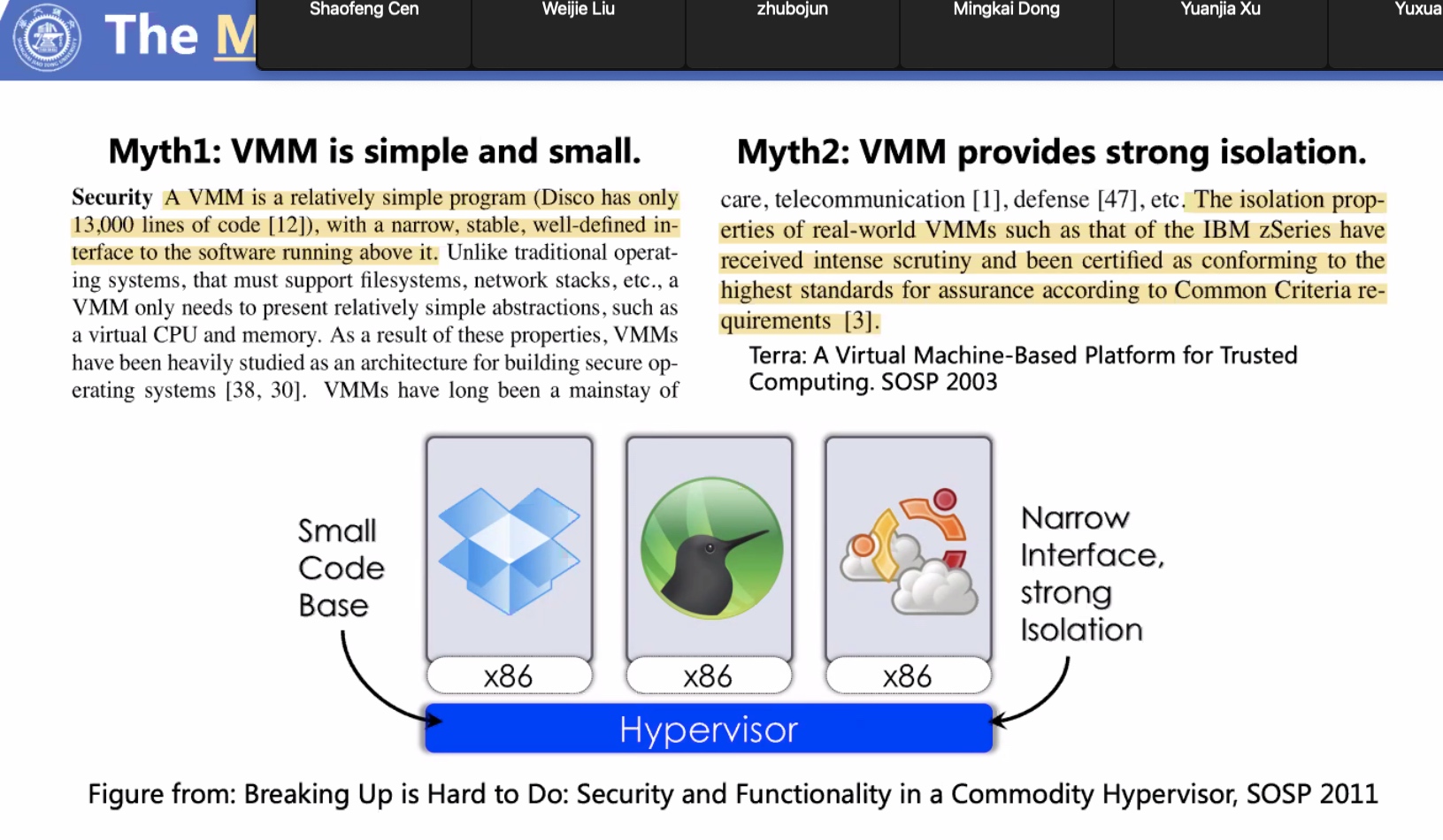

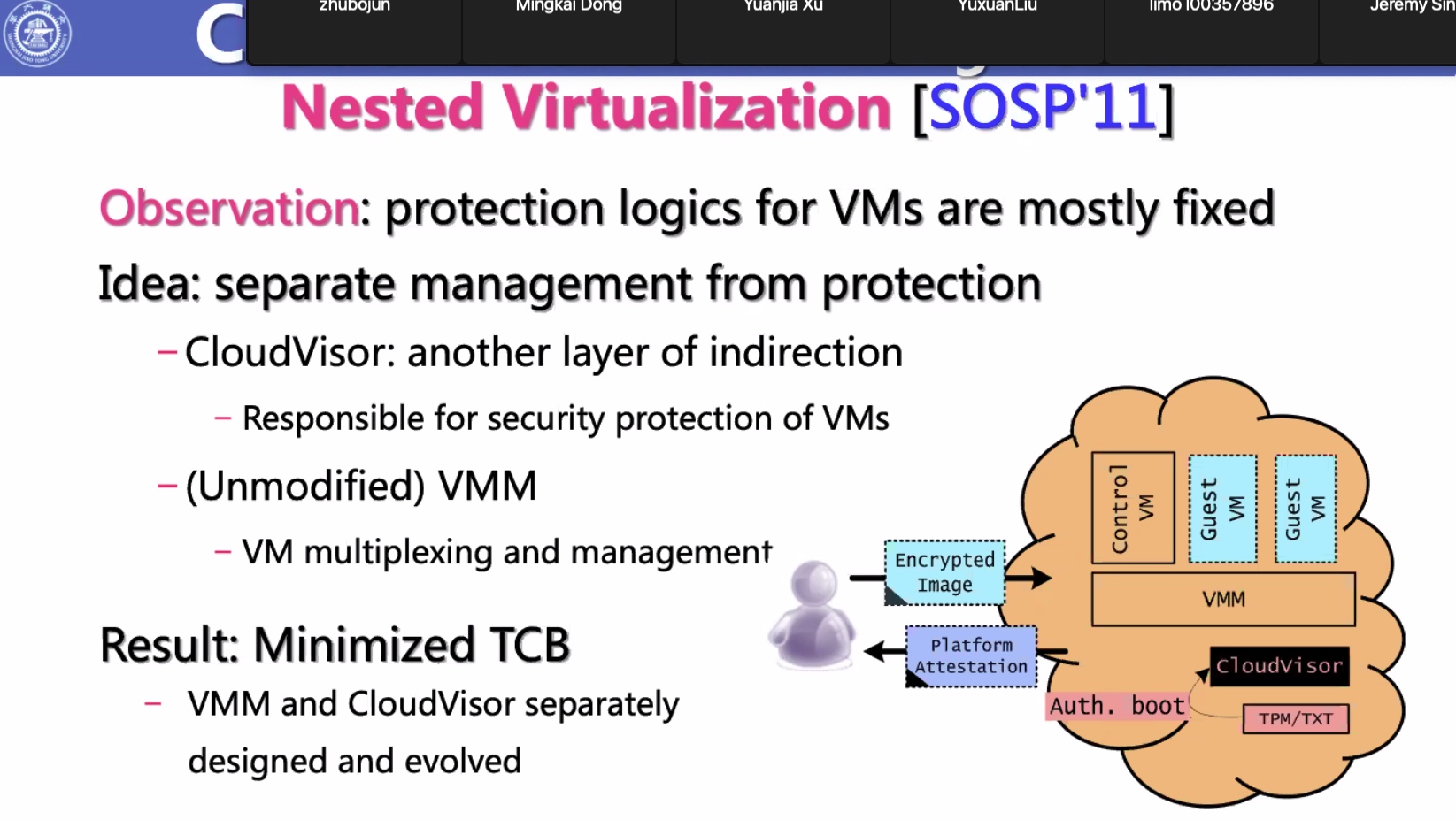

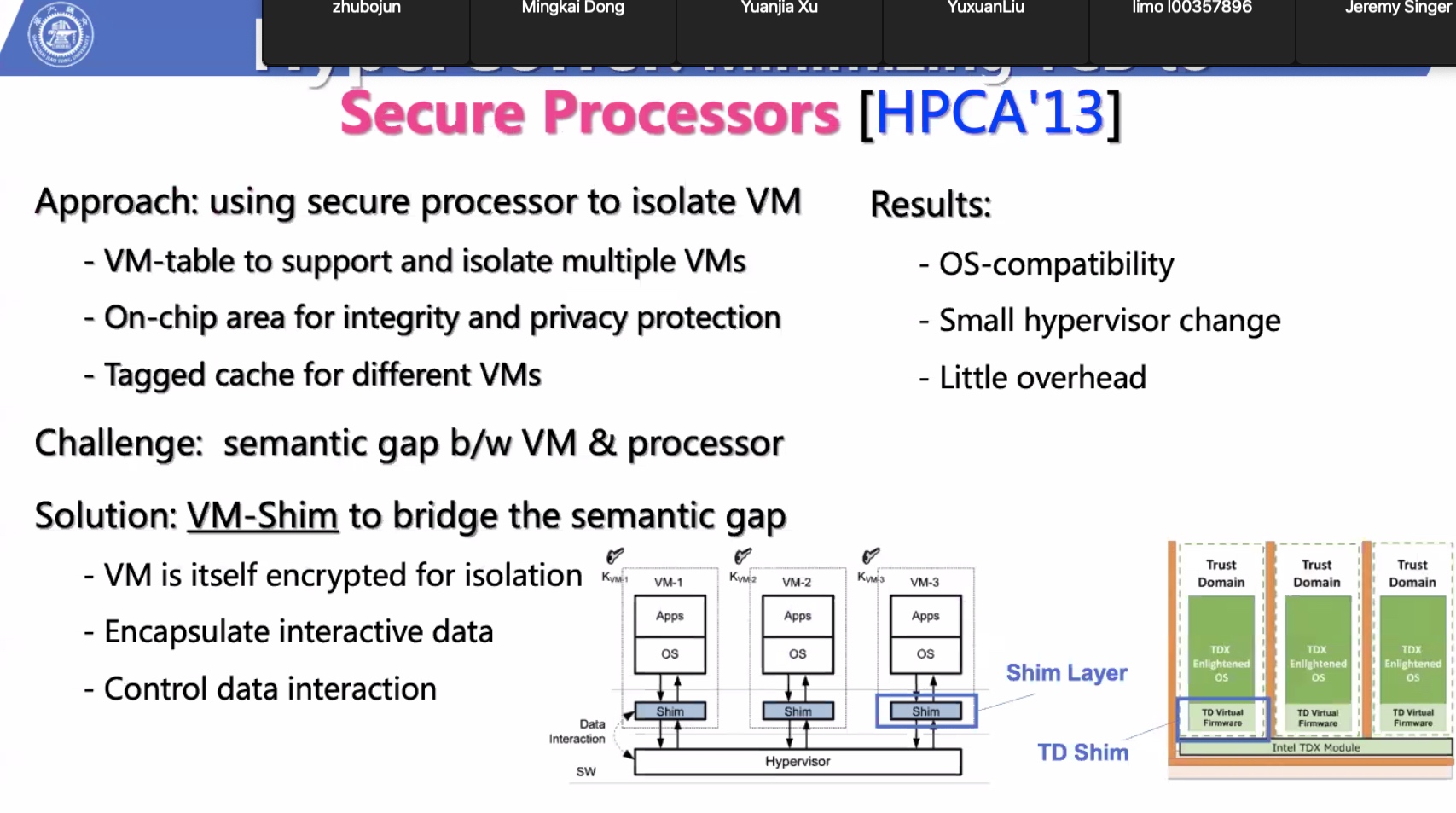

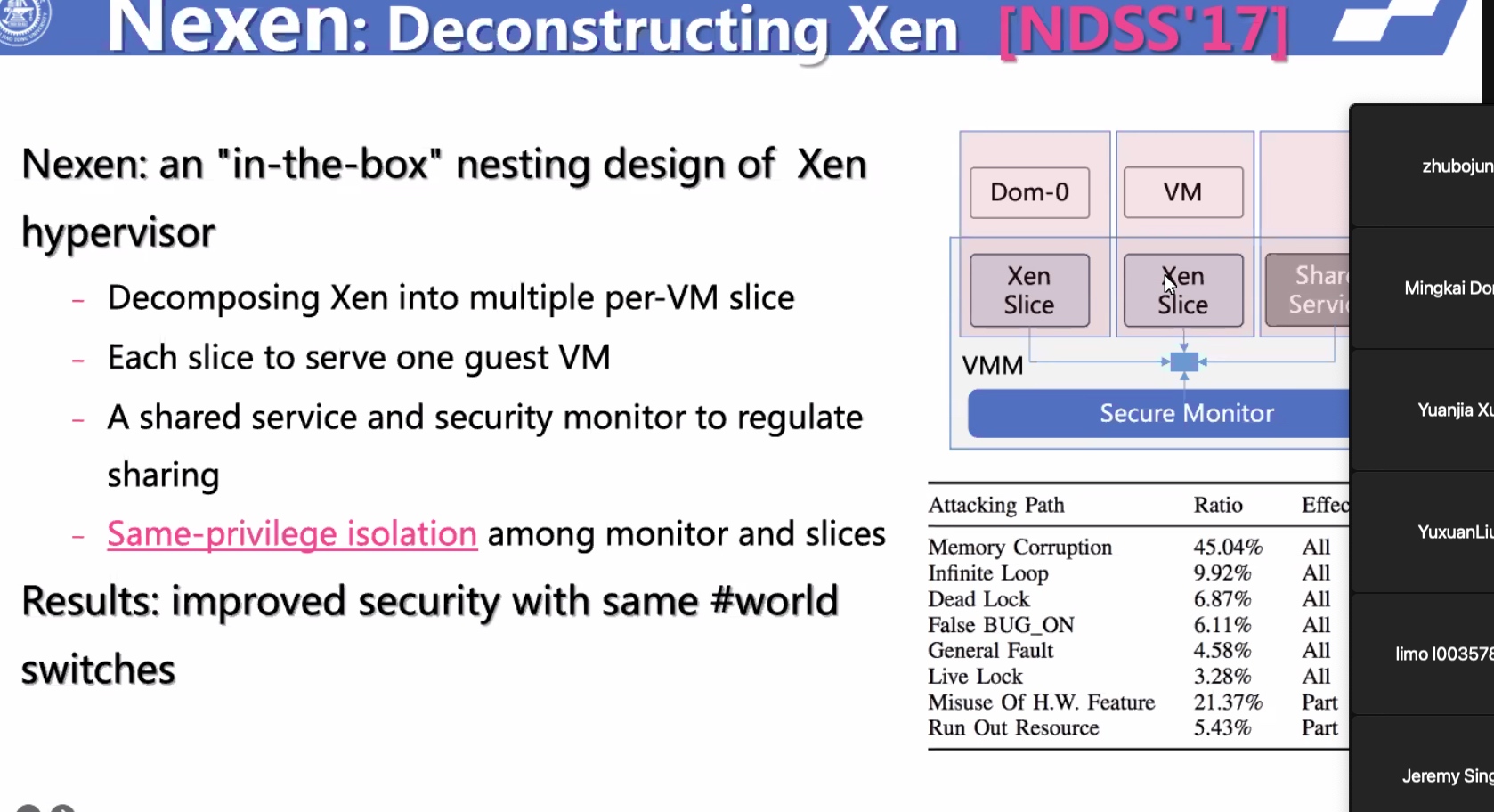

History of virtualization

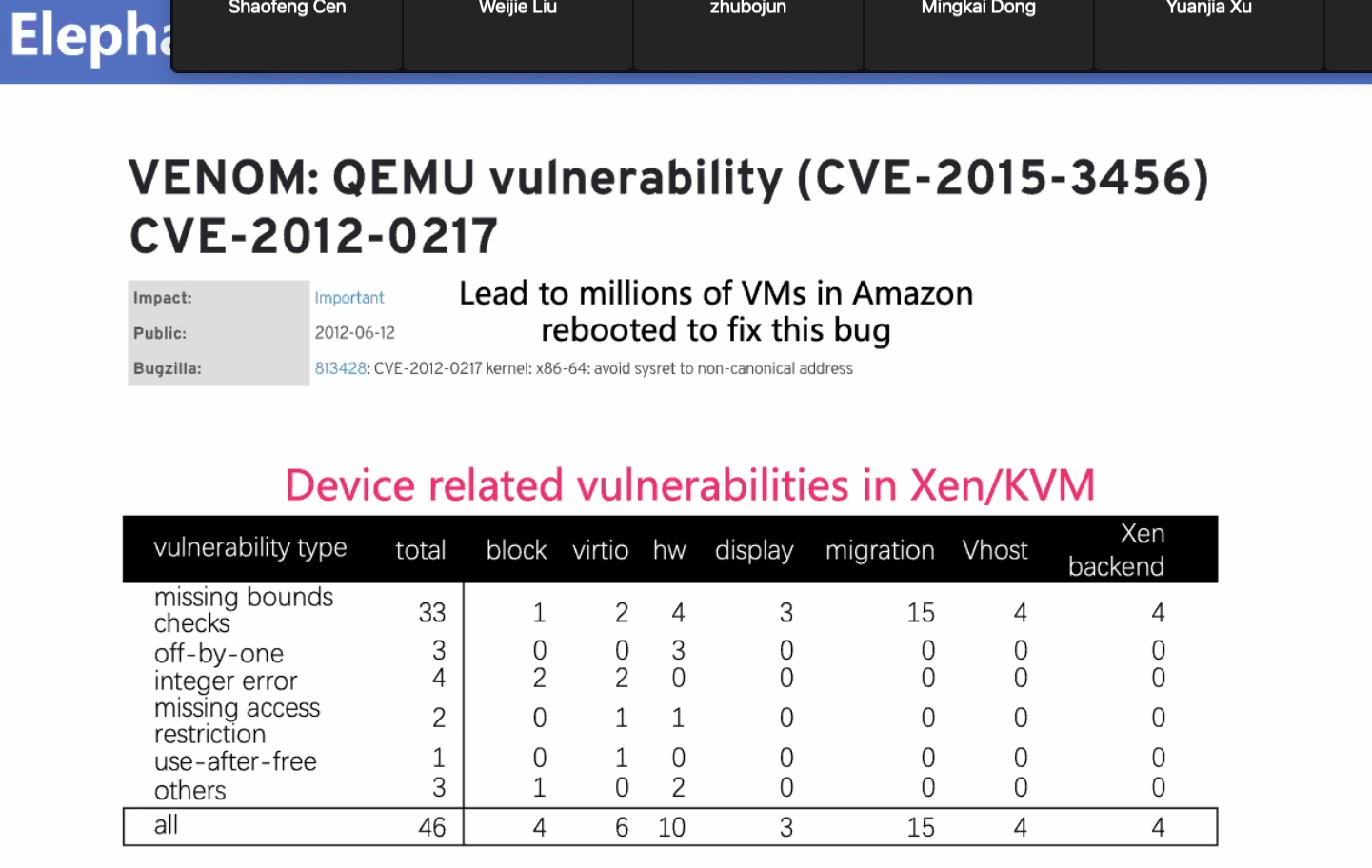

Increasing complxity and security vulnerabilities.

Example of AWS vulnerability

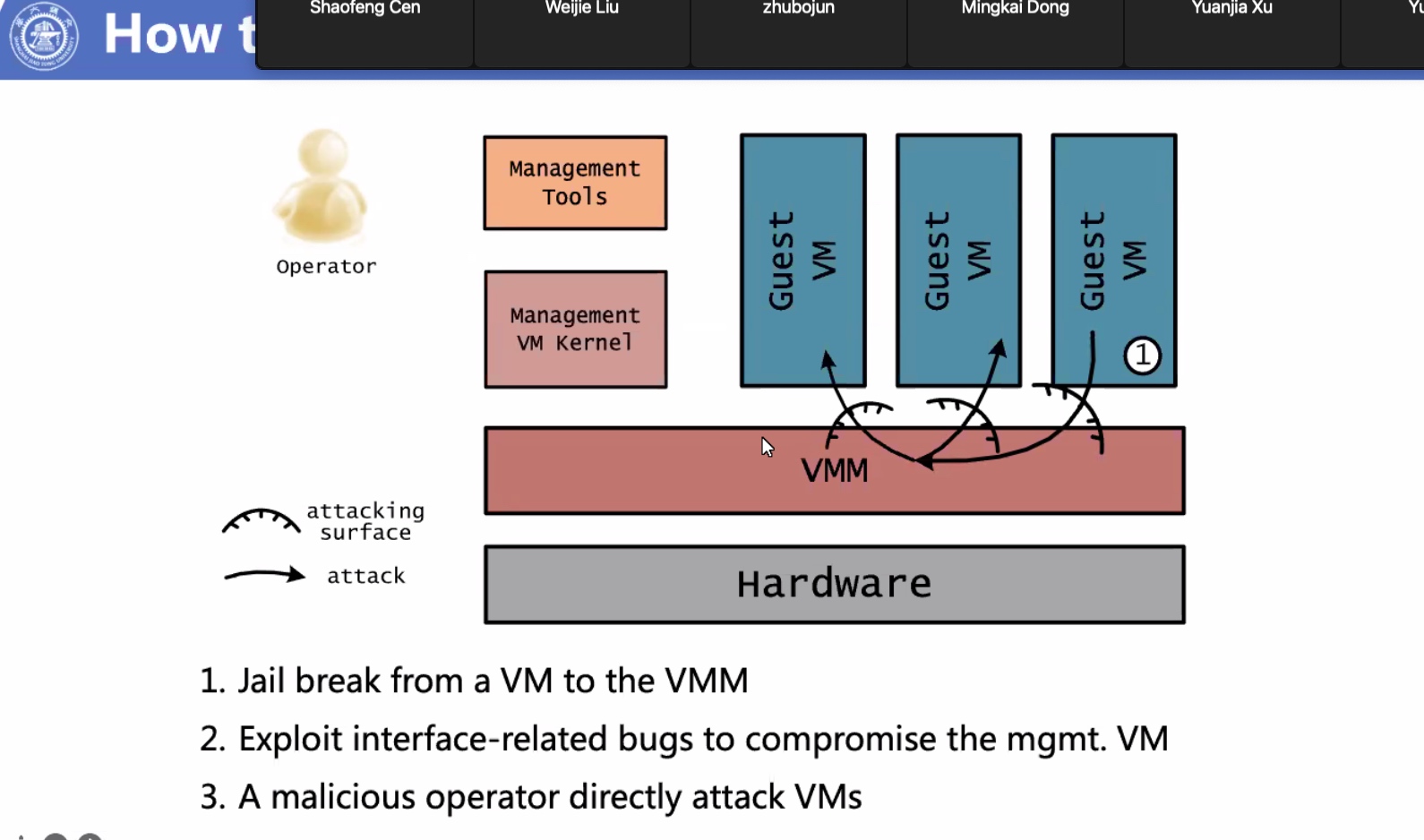

Attack Model

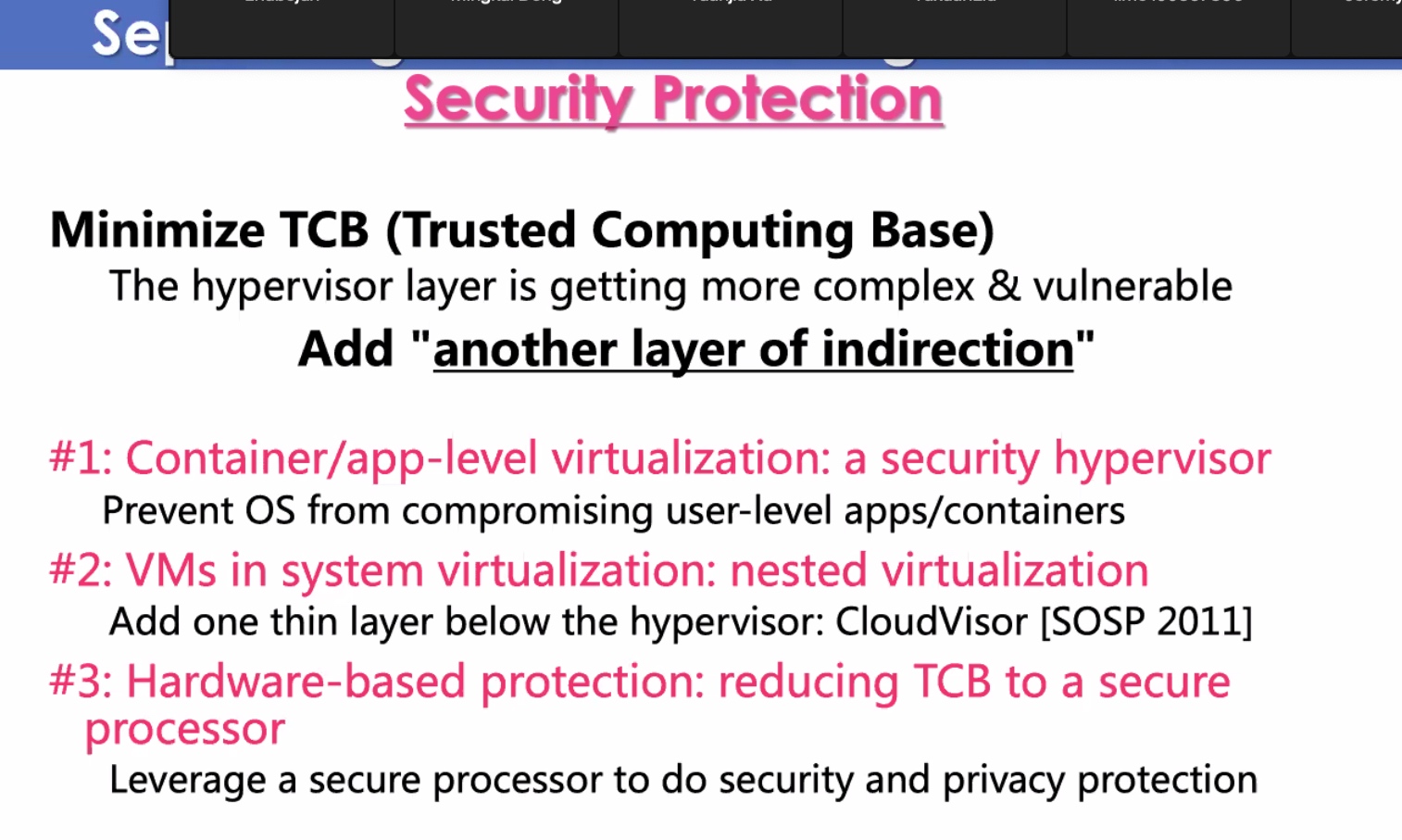

Possible Protection

Crossover

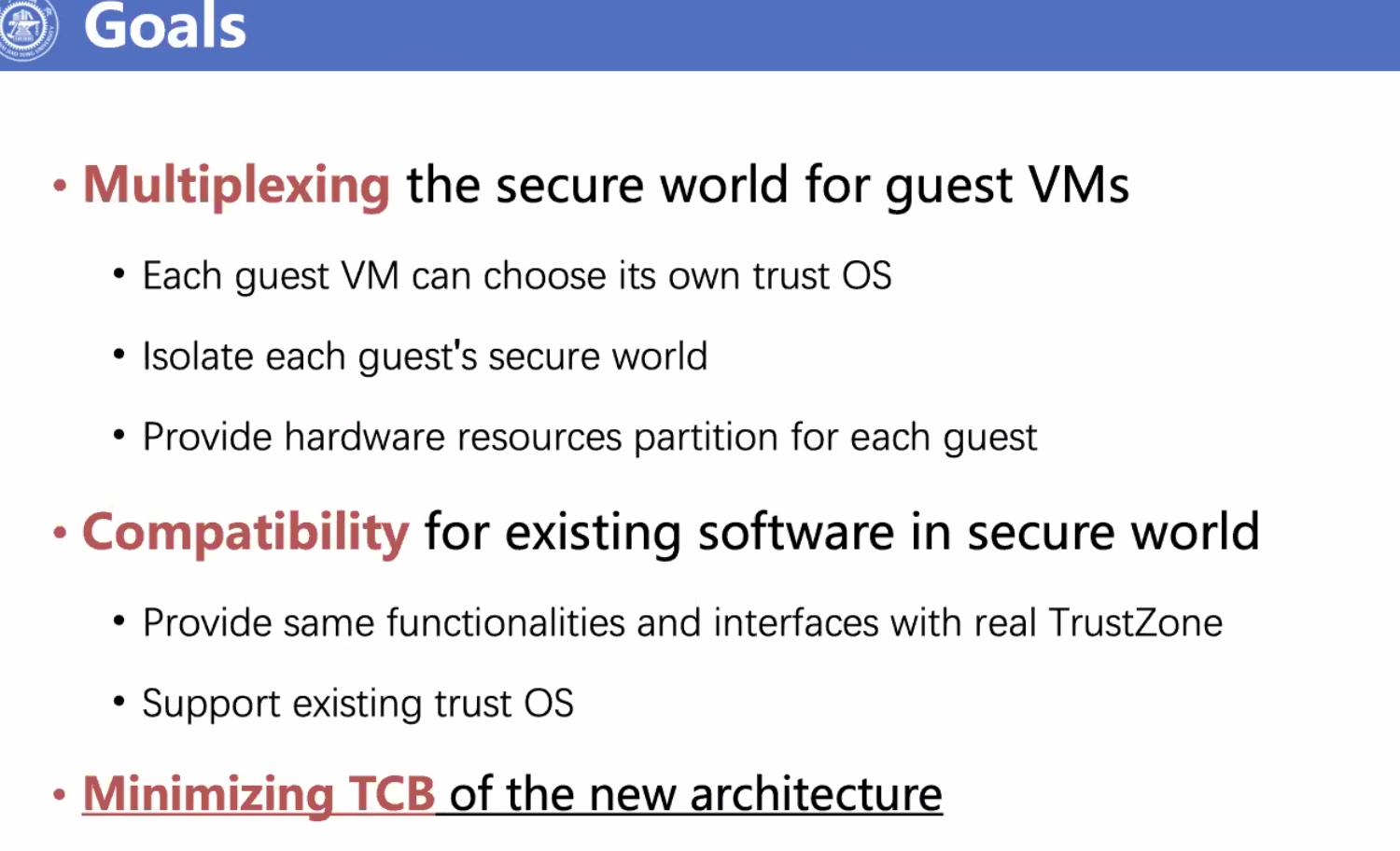

TrustZone on Arm + VMM

Use SW-HW Codesign

Session 1