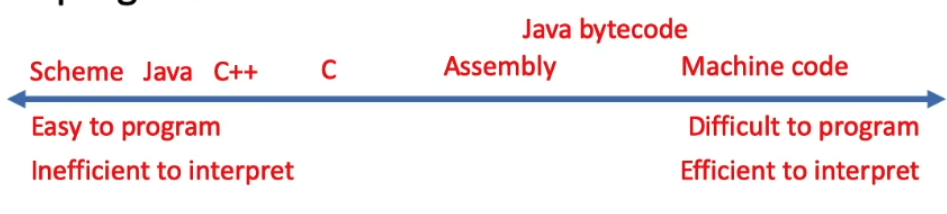

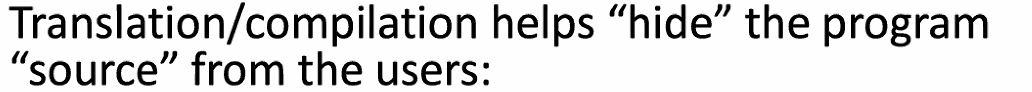

intro - where are we now?

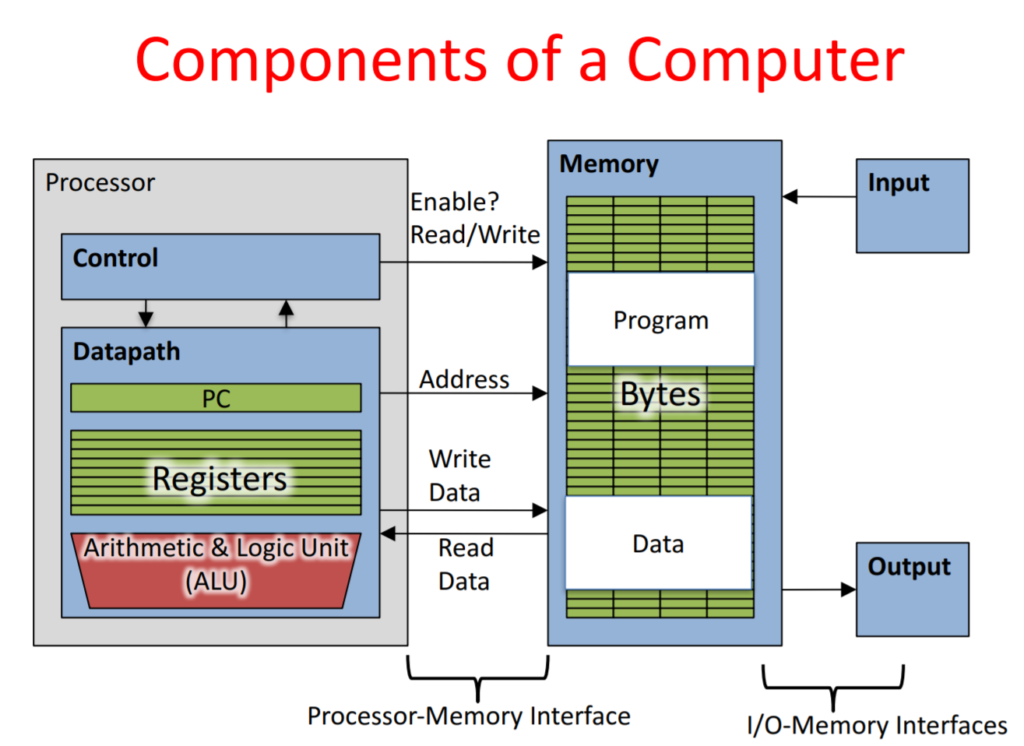

the cpu

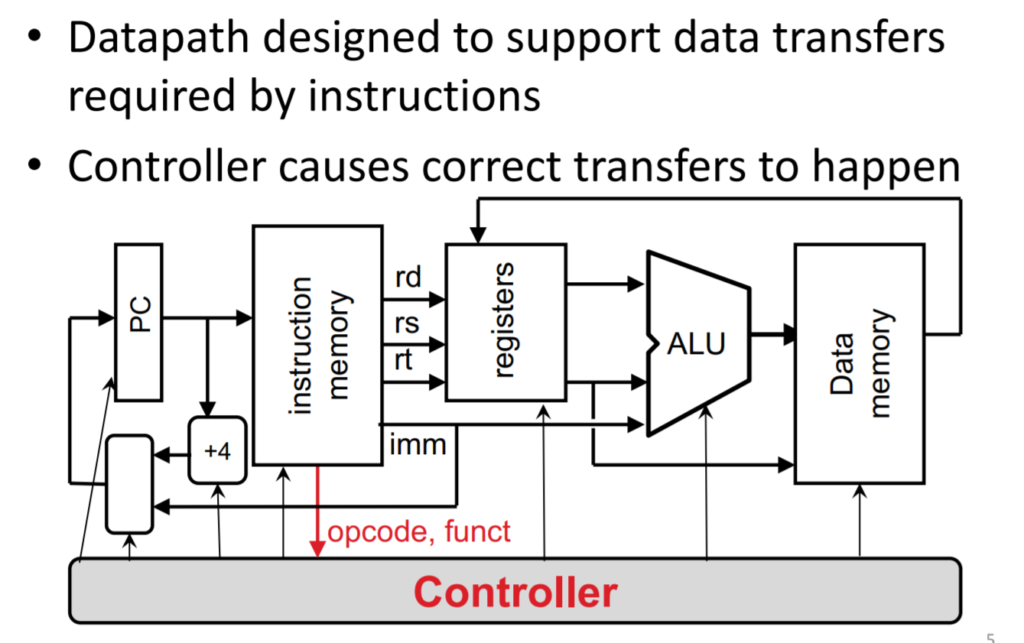

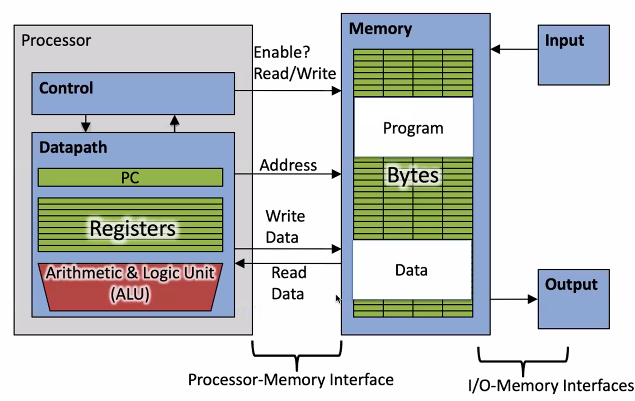

Processor - datapath - control

cenario in RISC-V machine

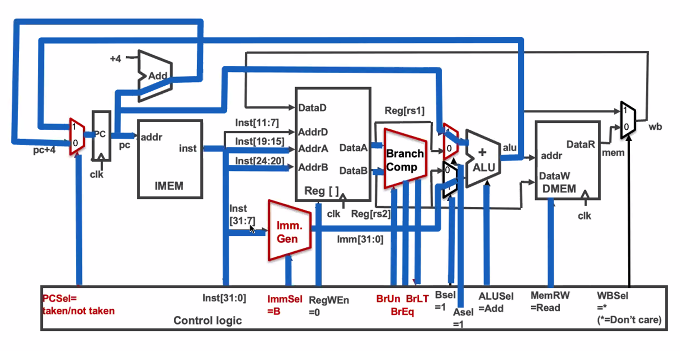

The datapath and control

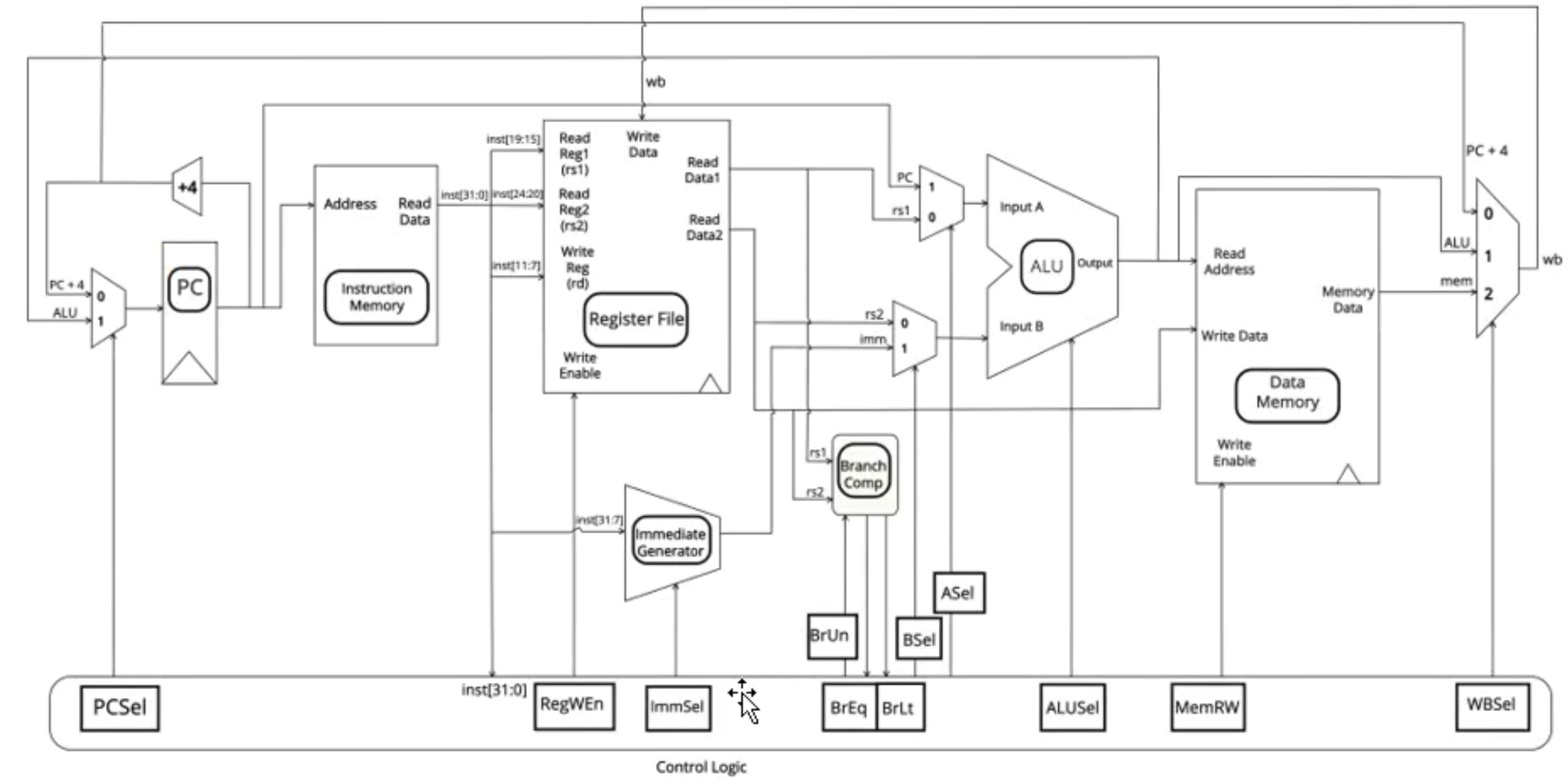

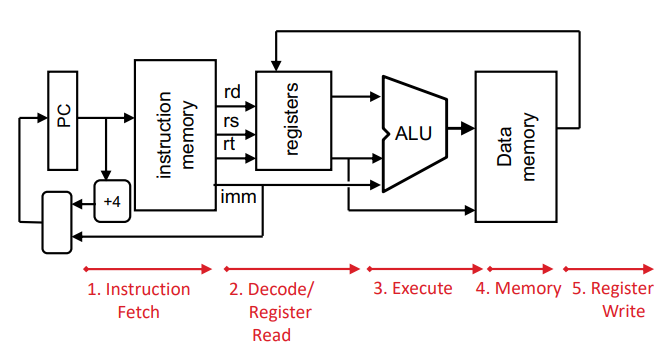

Overview

- problem: single monolithic bloc

- solution: break up the process of excuting an instruction into stages

- smaller stages are easier to design –

- easy to optimize &modularity

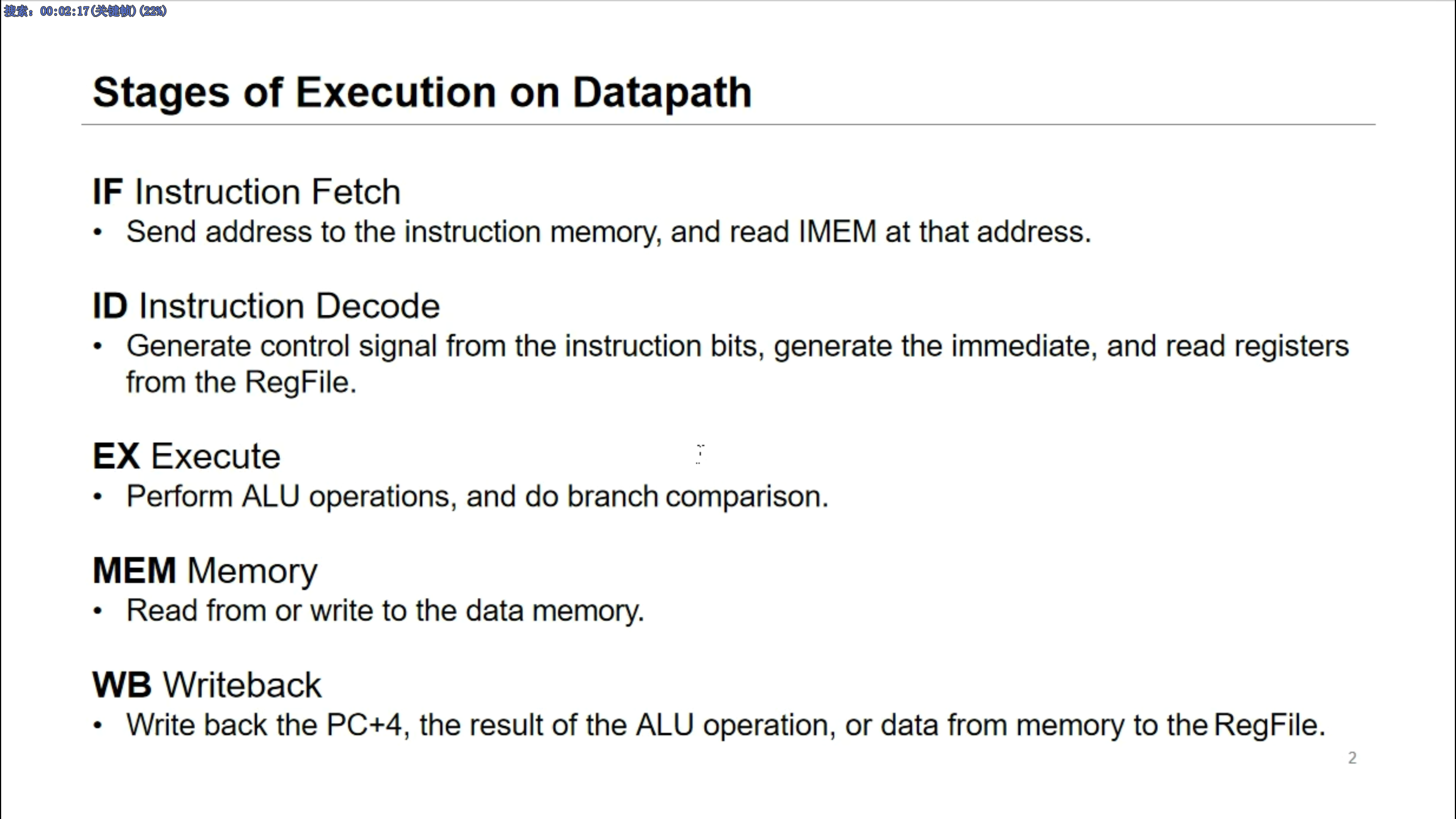

five stages

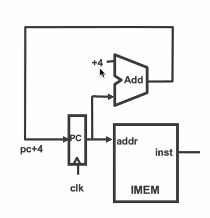

- Instruction Fetch

- pc+=4

- take full use of mem hierarchy

- Instruction Decode

- read the opcode to determine instruction type and field lengths

- second, (at the same time!) read in data from all necessary registers

- for add, read two registers

- for addi, read one register

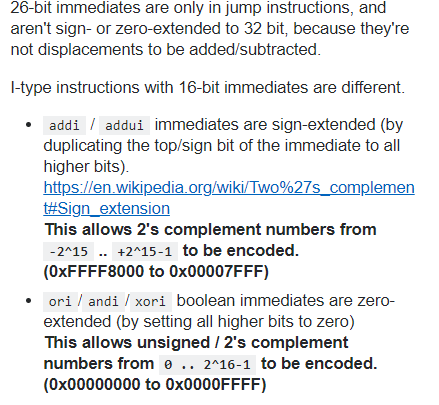

- third, generate the immediates

- ALU

- the real work of most instructions is done here: arithmetic (+, -, *, /), shifting, logic (&, |)

- For instance, it's load and store

- lw t0, 40(t1)

- the address we are accessing in memory = the value in t1 PLUS the value 40

- so we do this addition in this stage

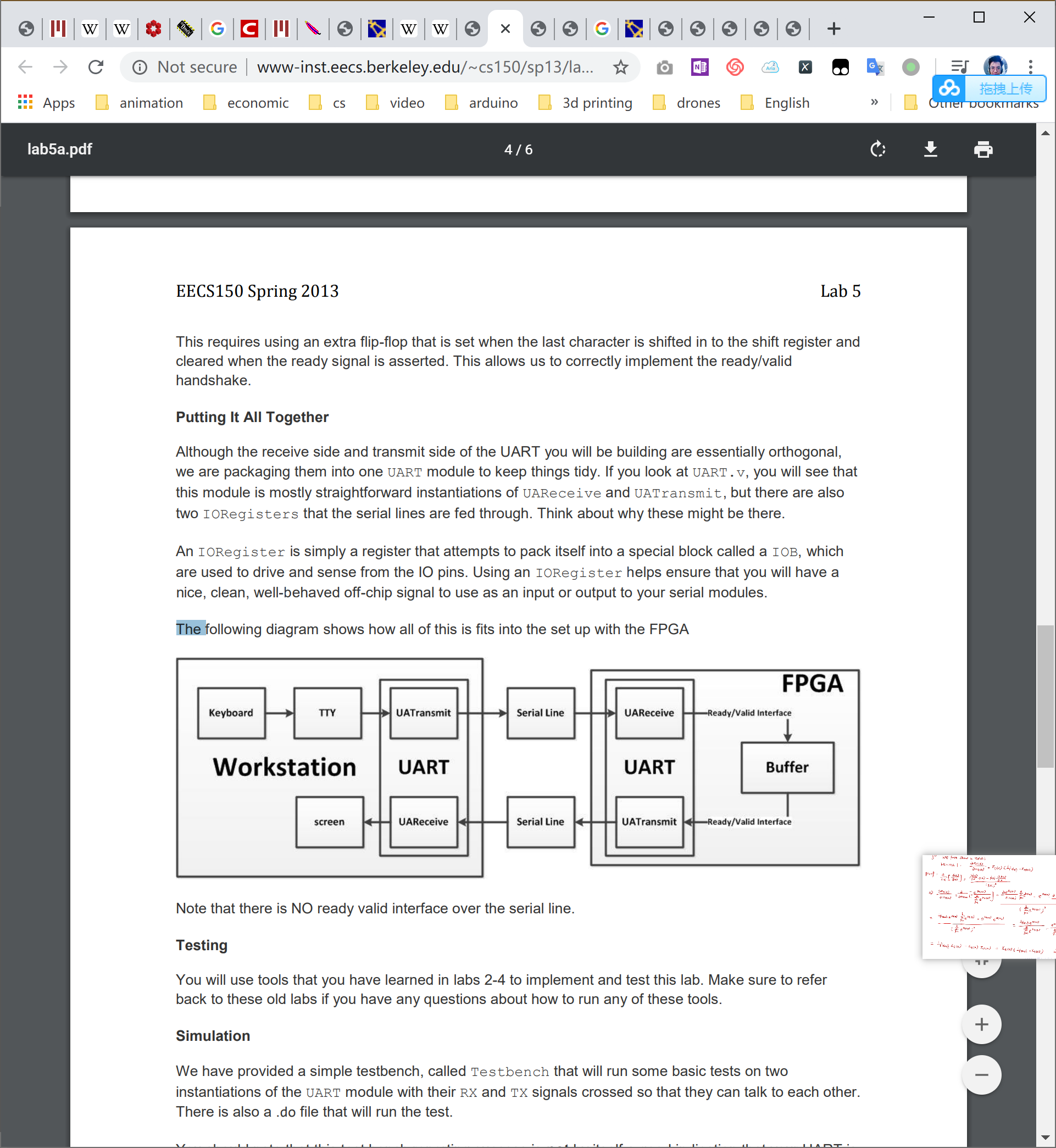

- Mem access

- Actually only the load and store instruction do anything during this stage.

- it's fast but unavoidable

- Register write

- write the result of some computation into a register.

- for stores and brances idle

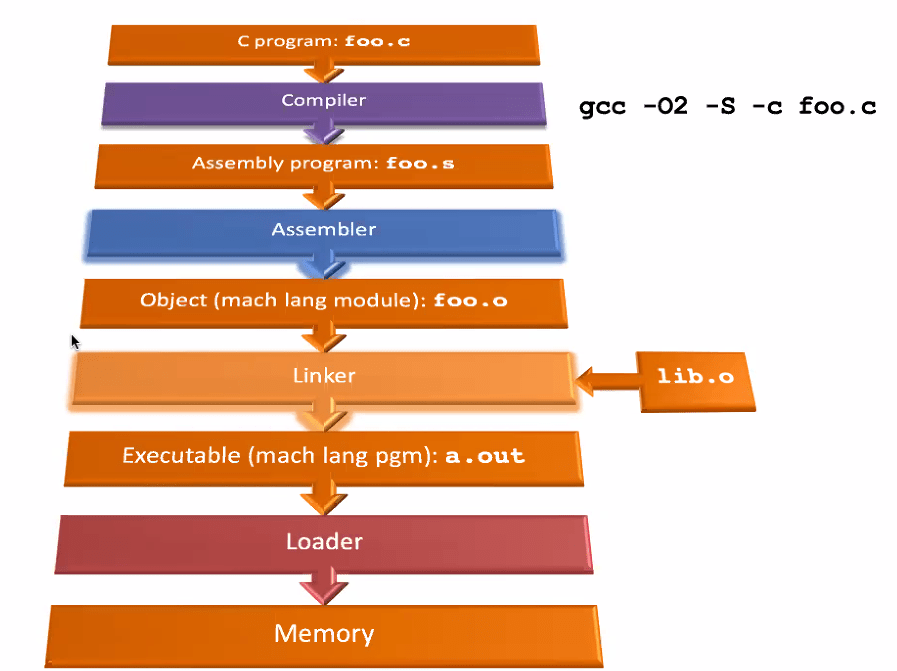

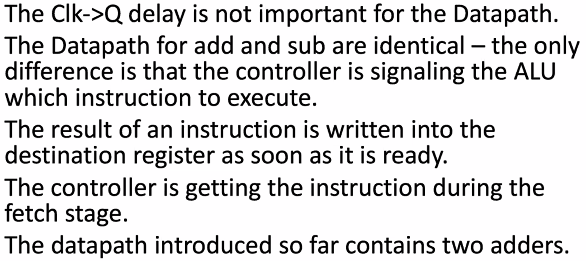

misc

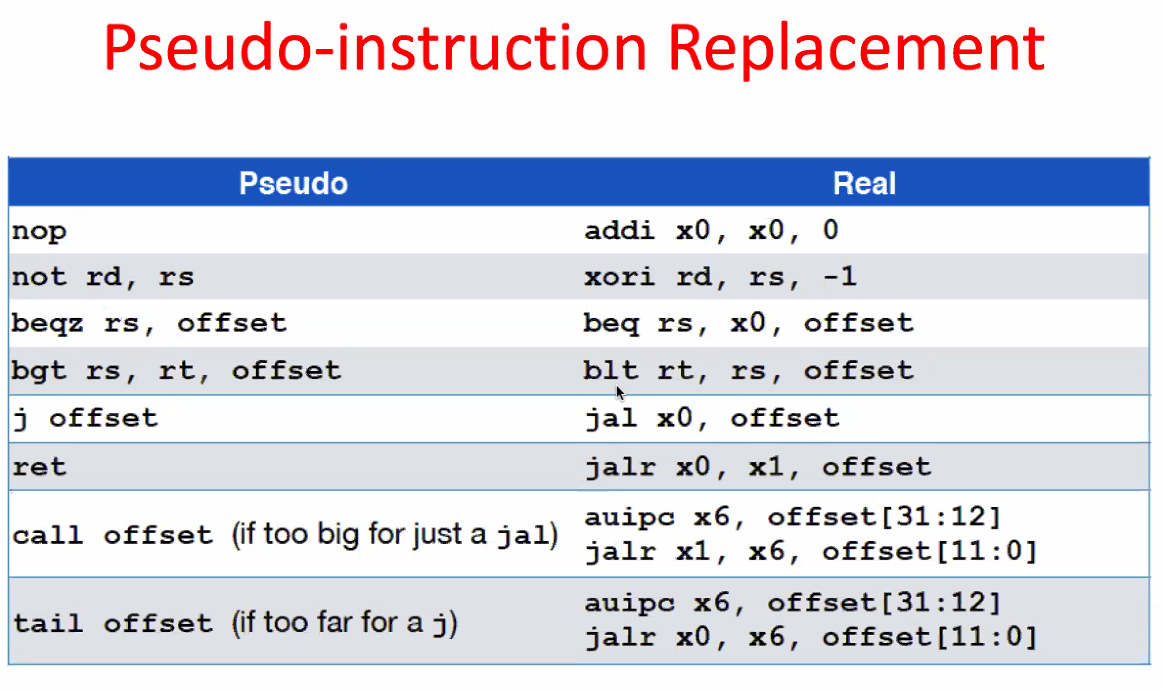

memory alignment

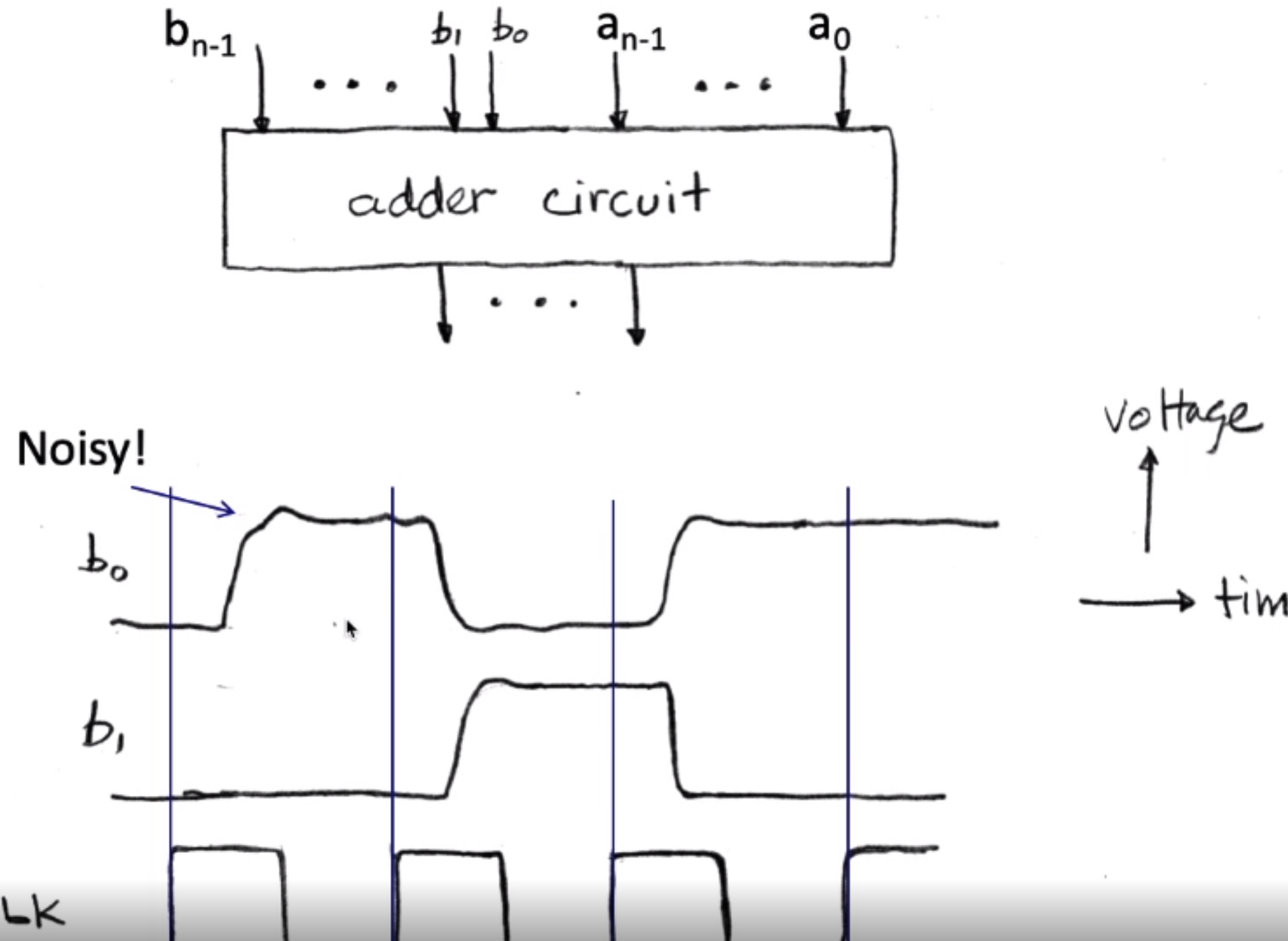

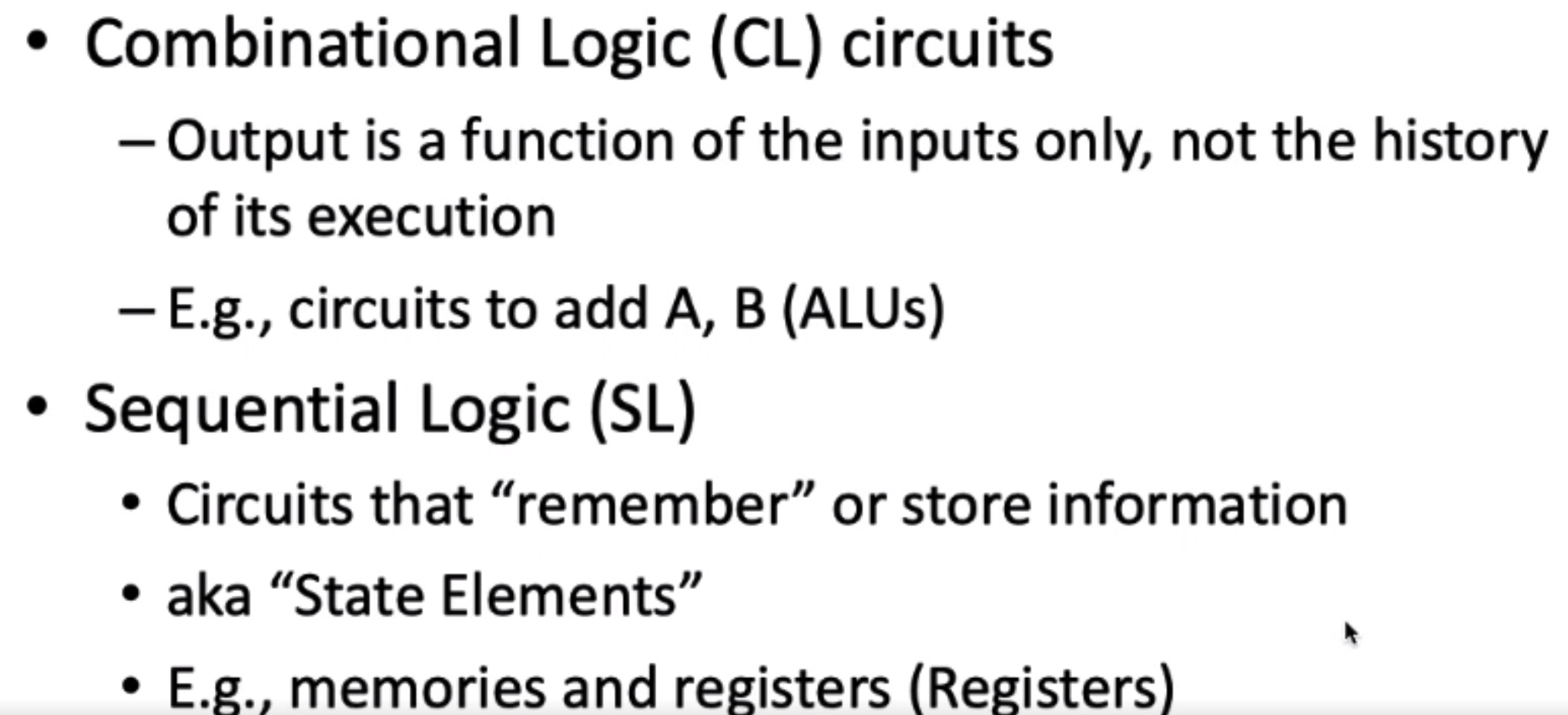

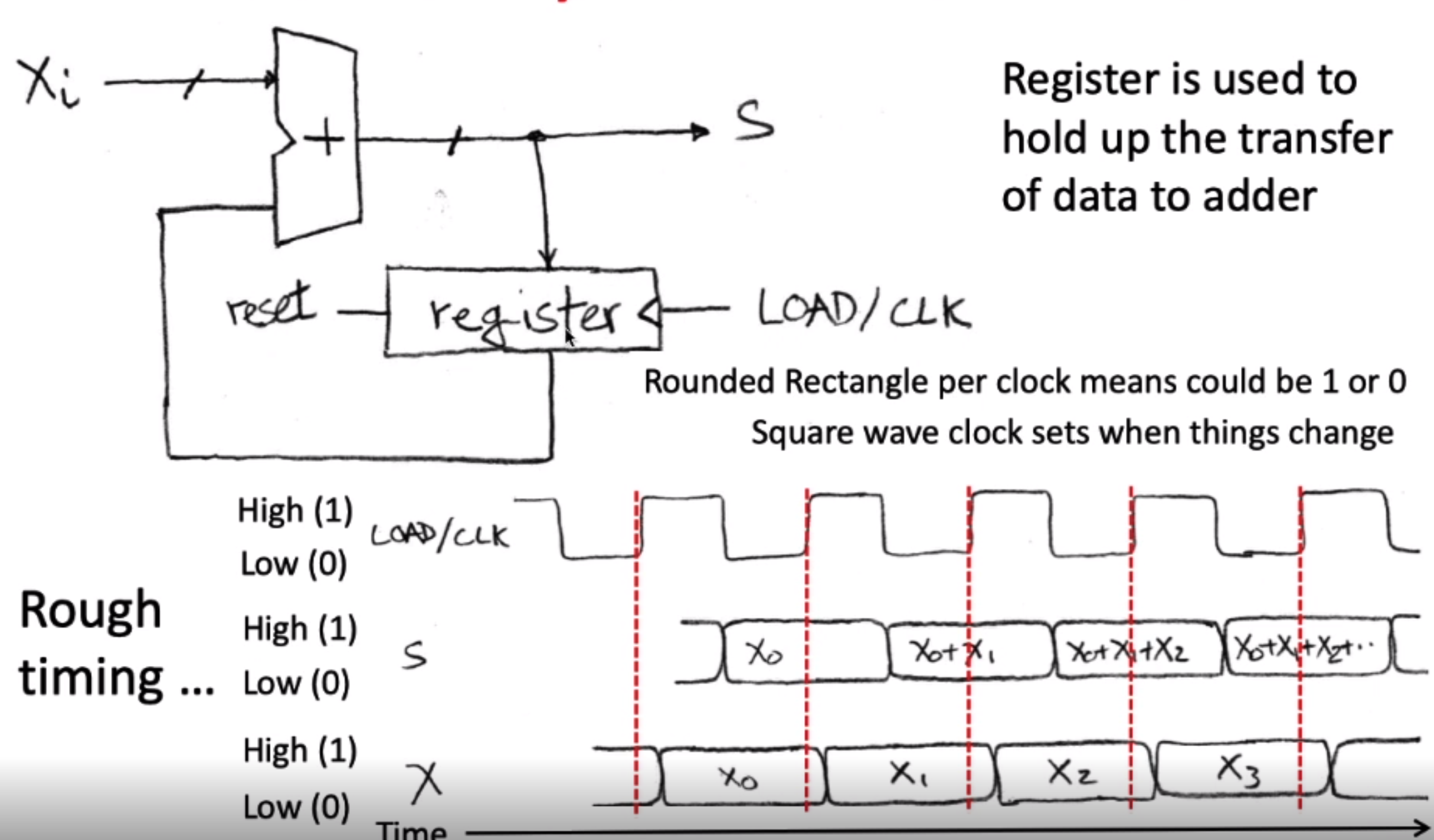

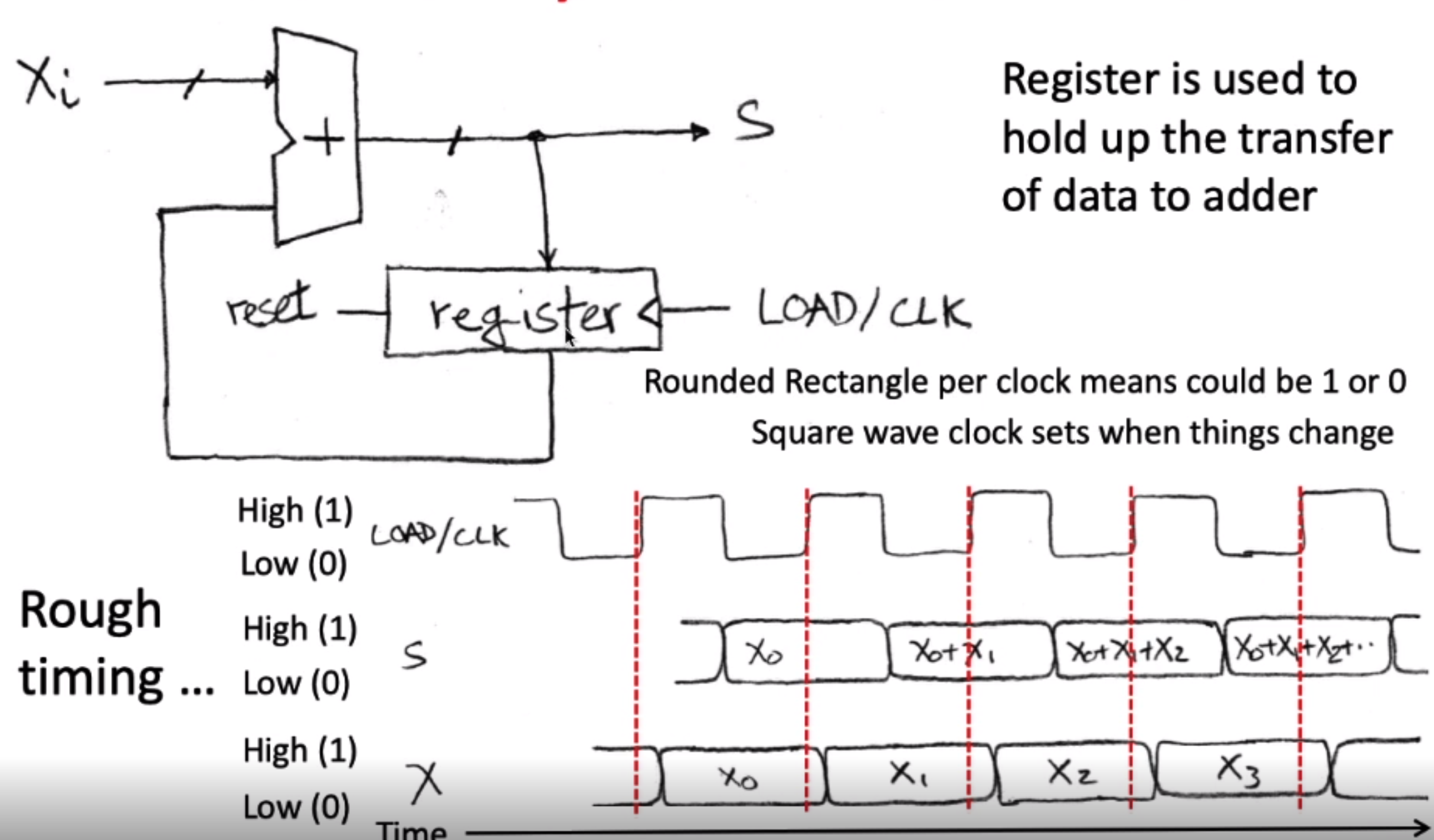

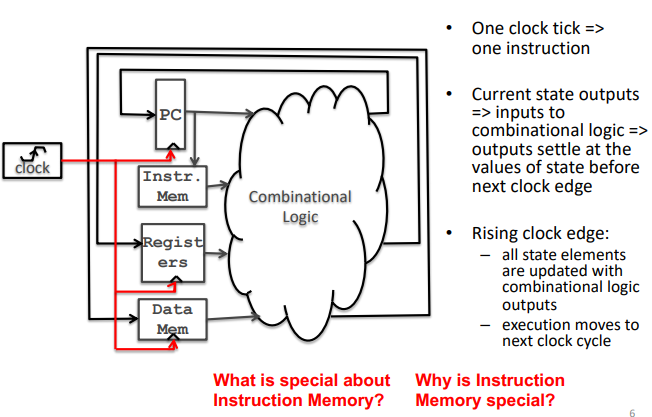

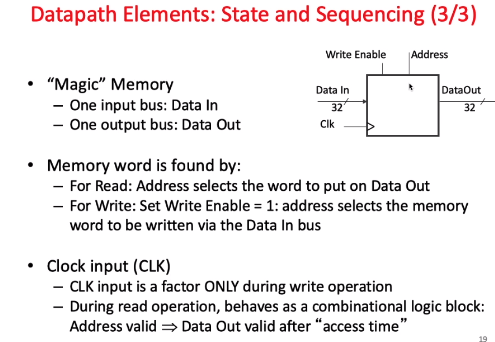

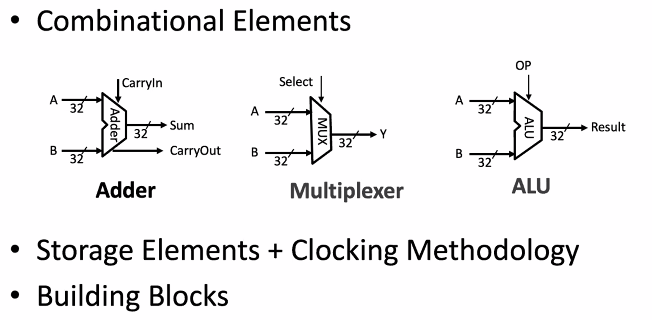

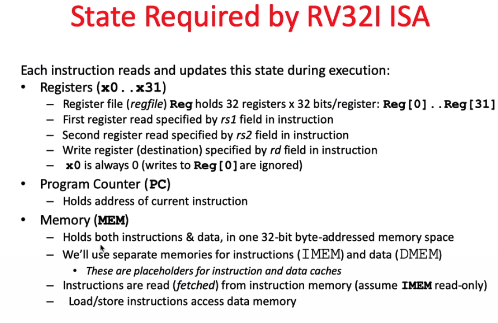

data path elements state and sequencing

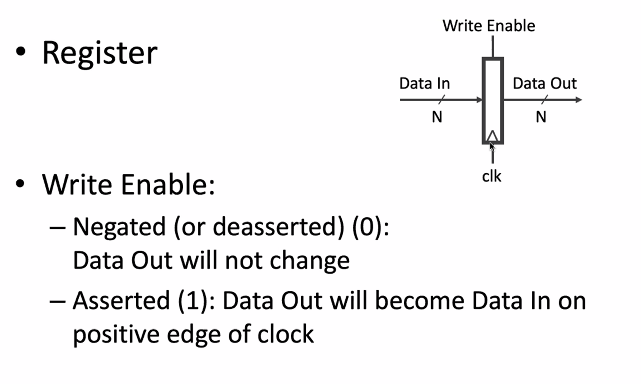

register

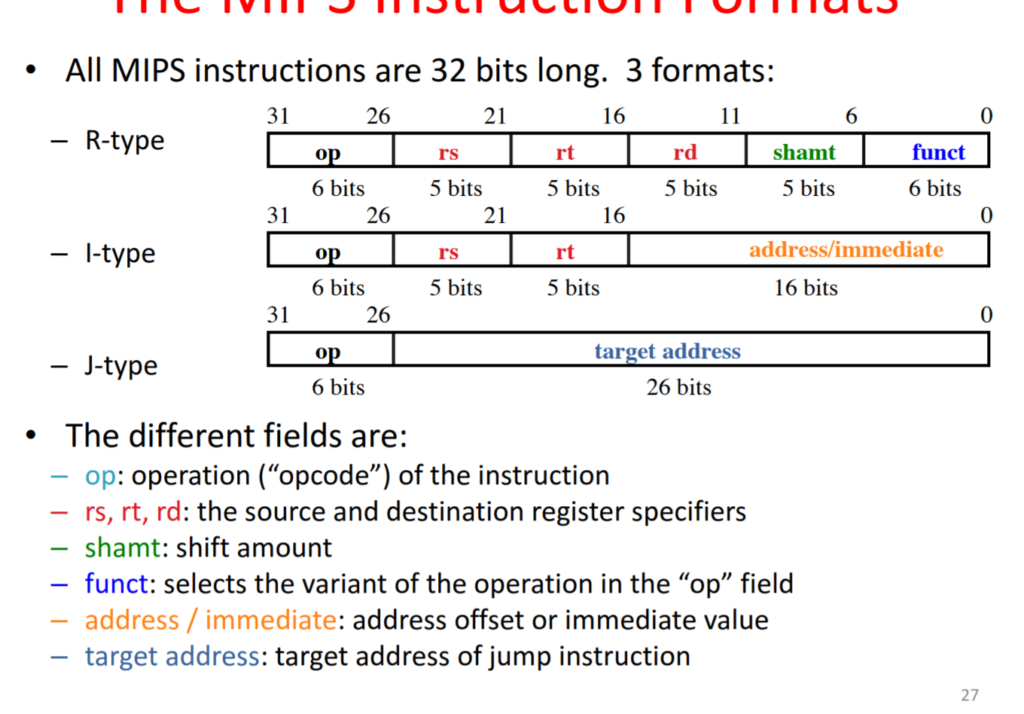

instruction level

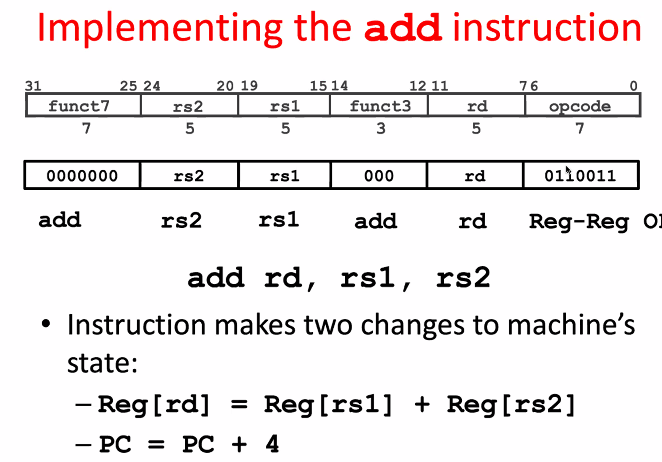

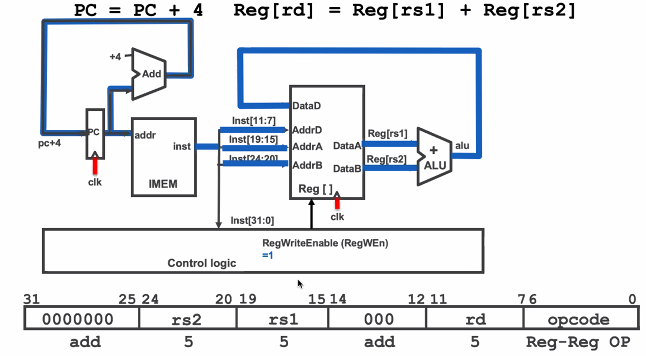

add

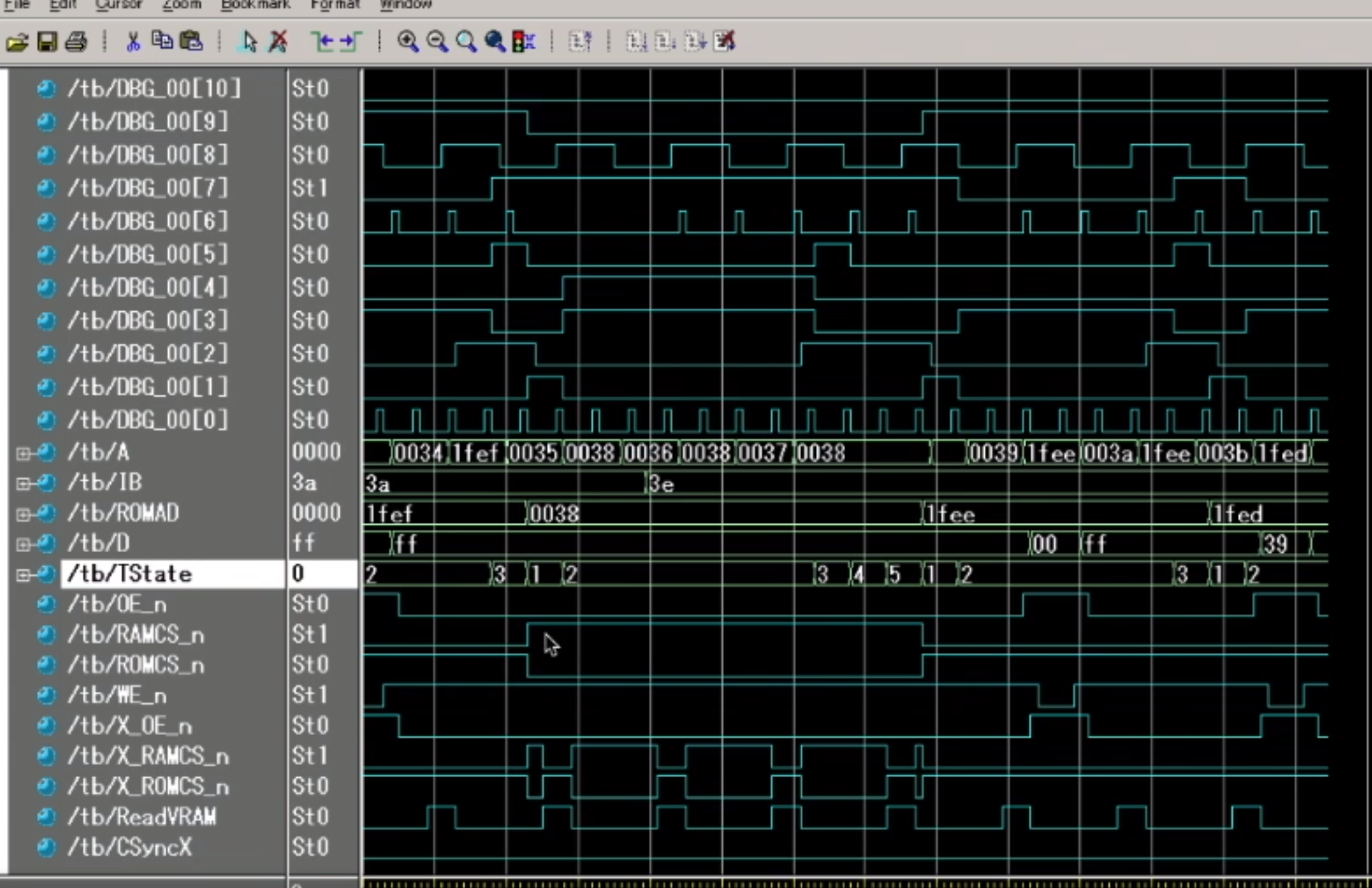

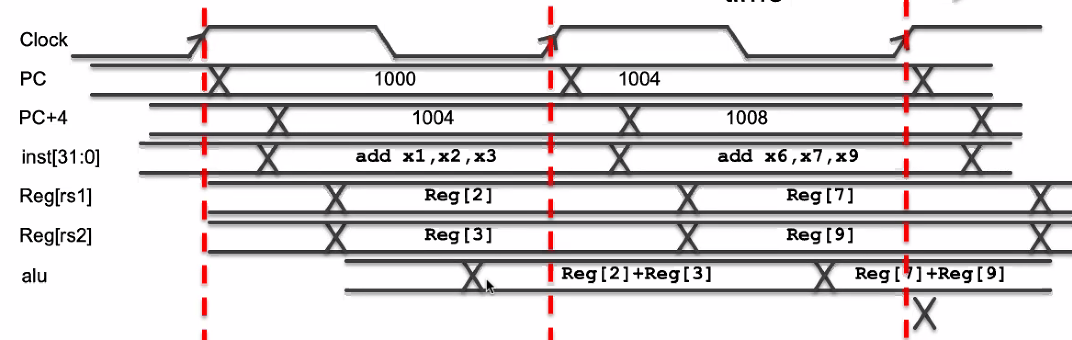

time diagram for add

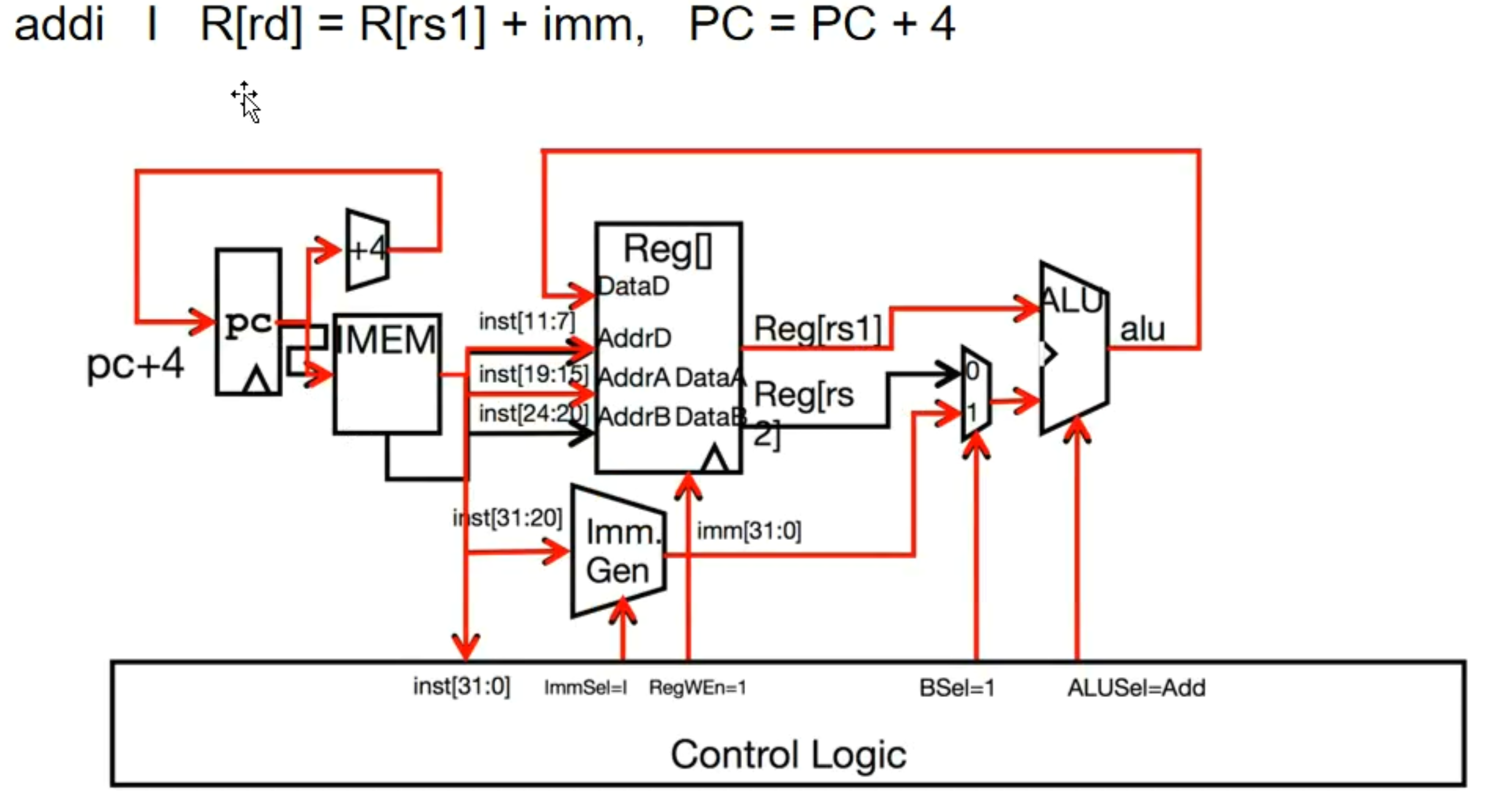

addi

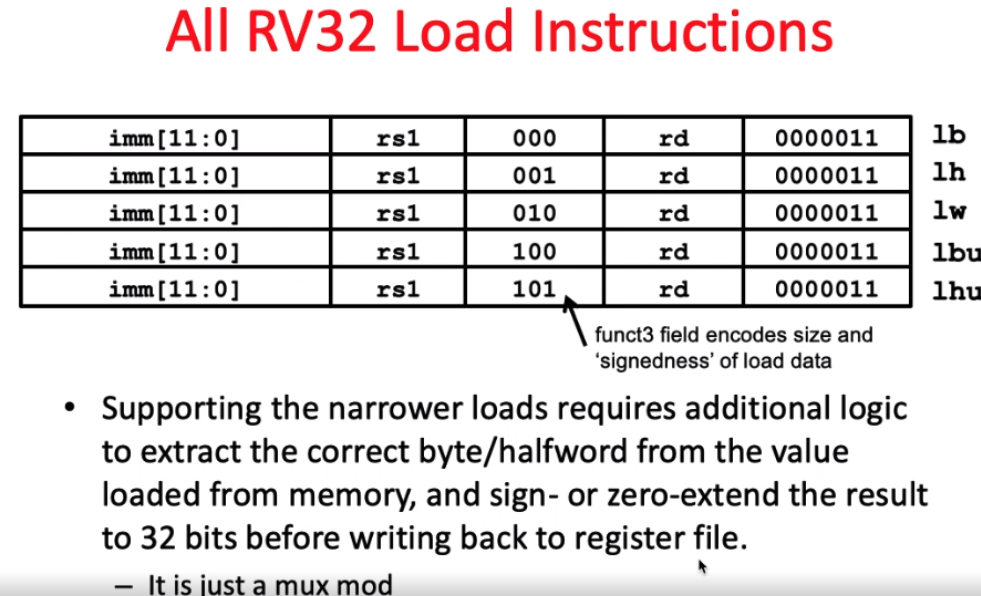

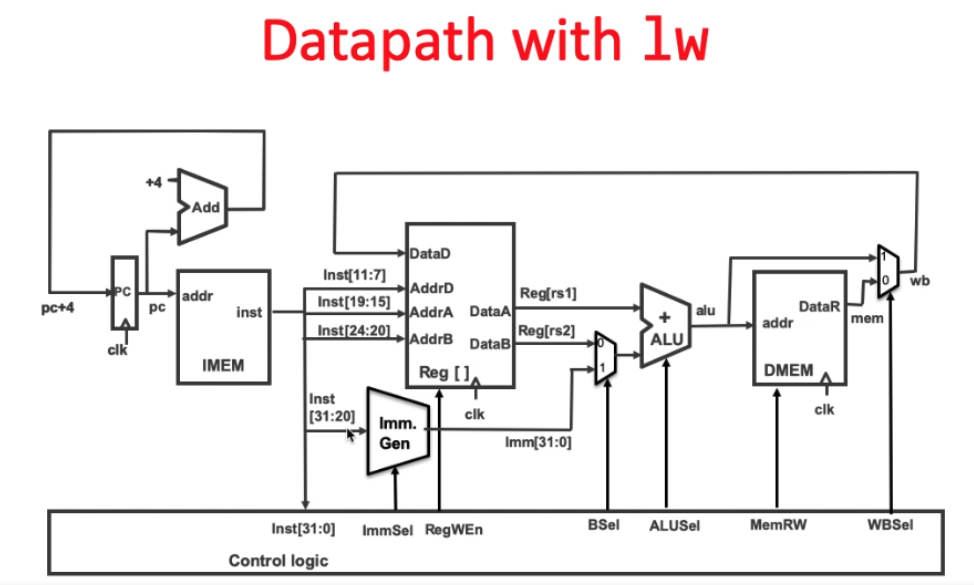

lw

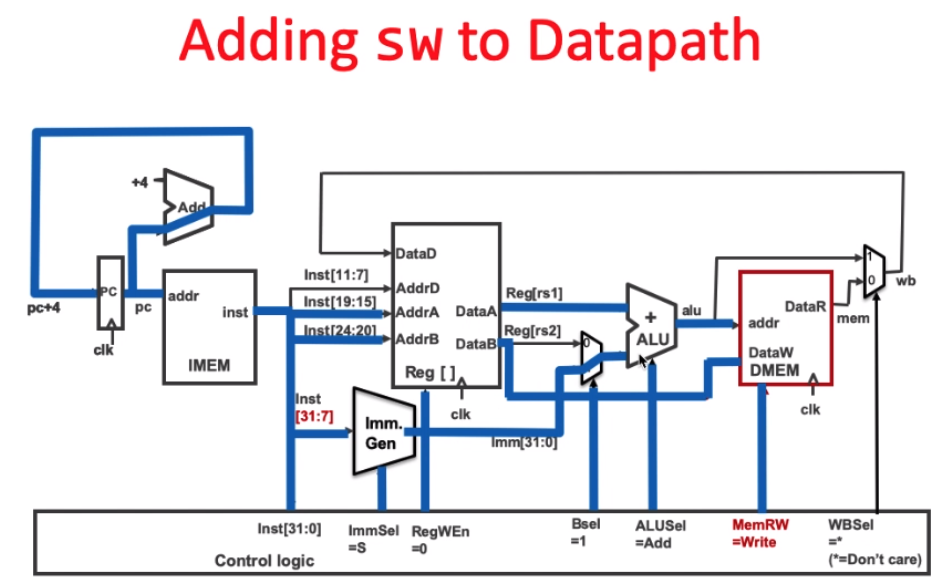

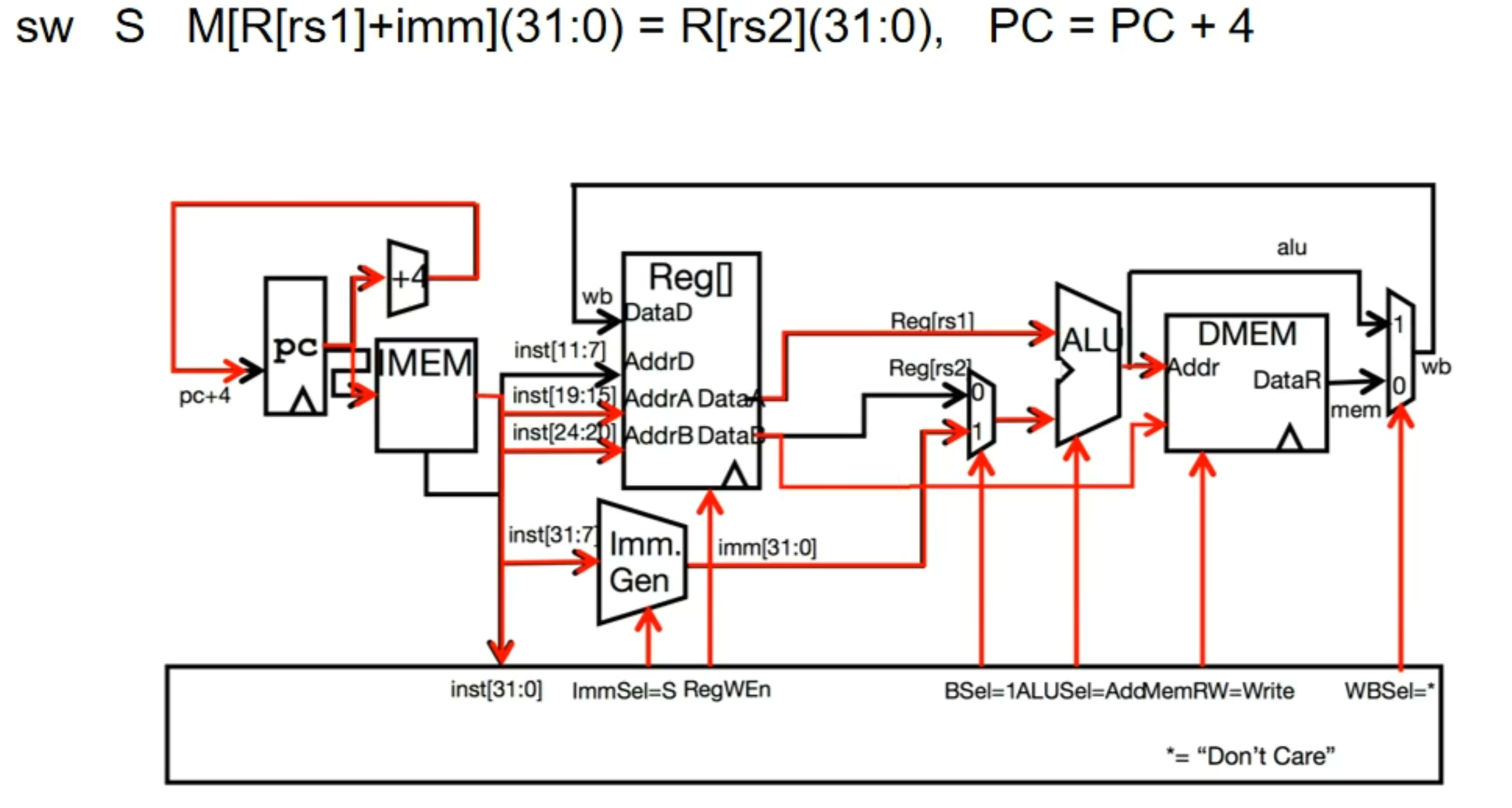

sw - critical path

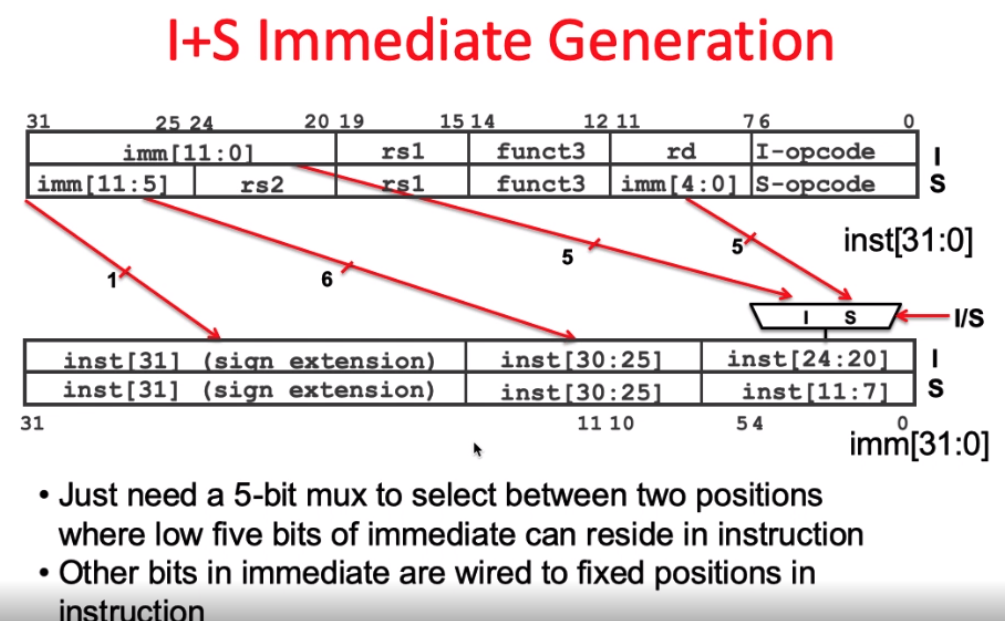

combined I+S Immediate generation

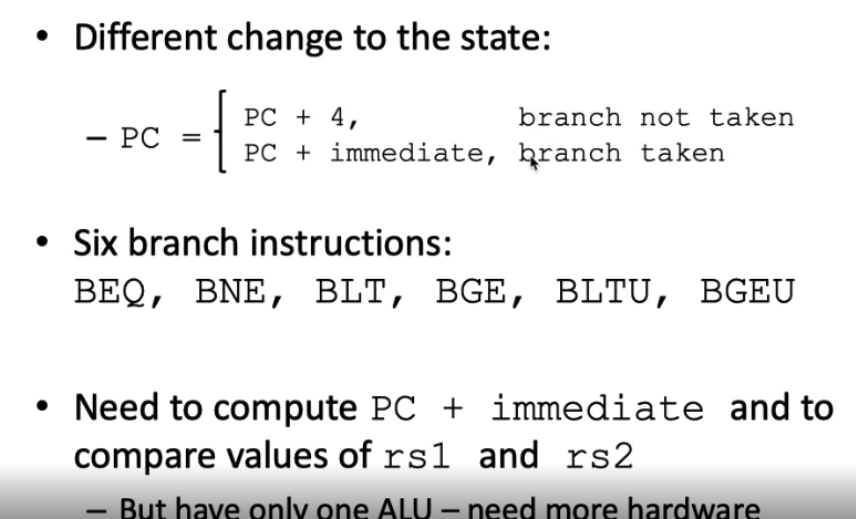

branches

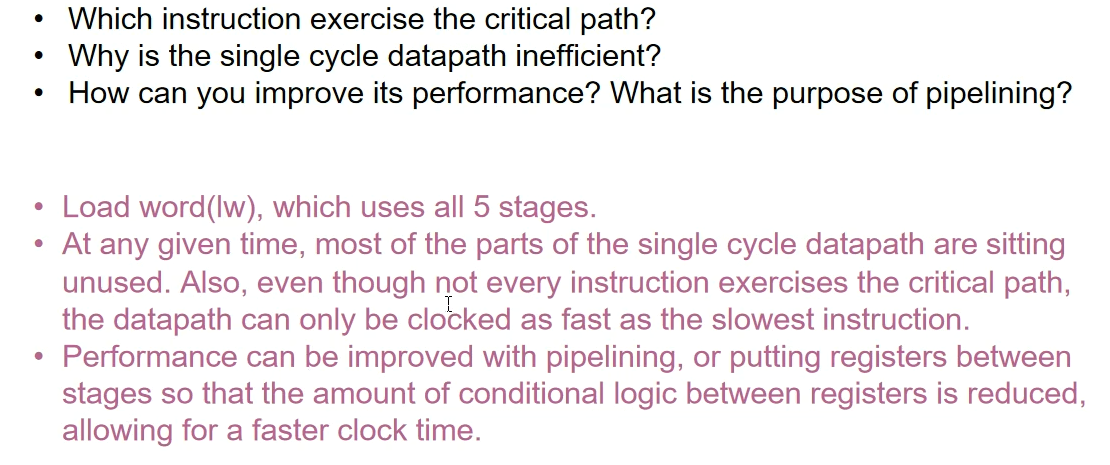

pipelining

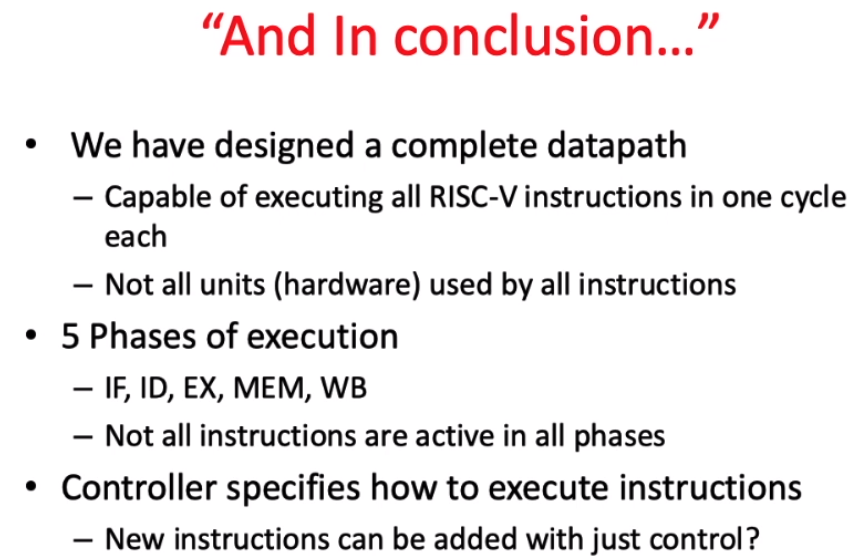

summary

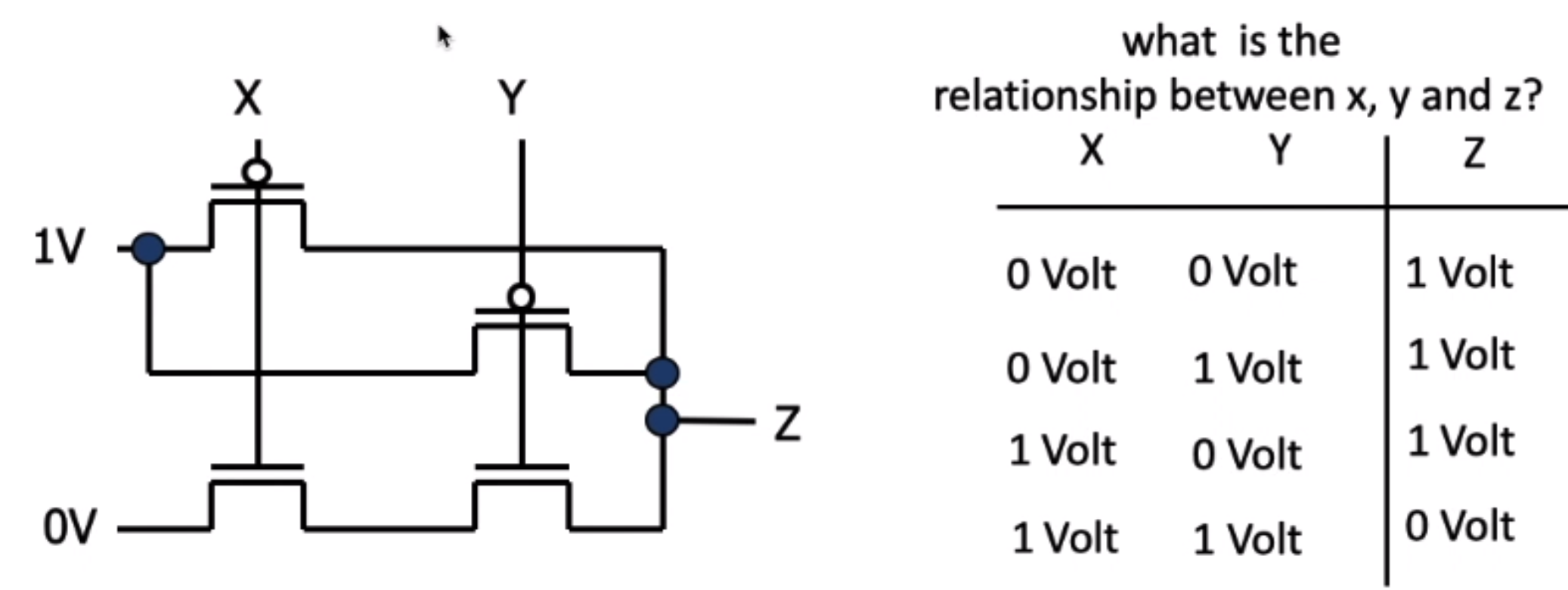

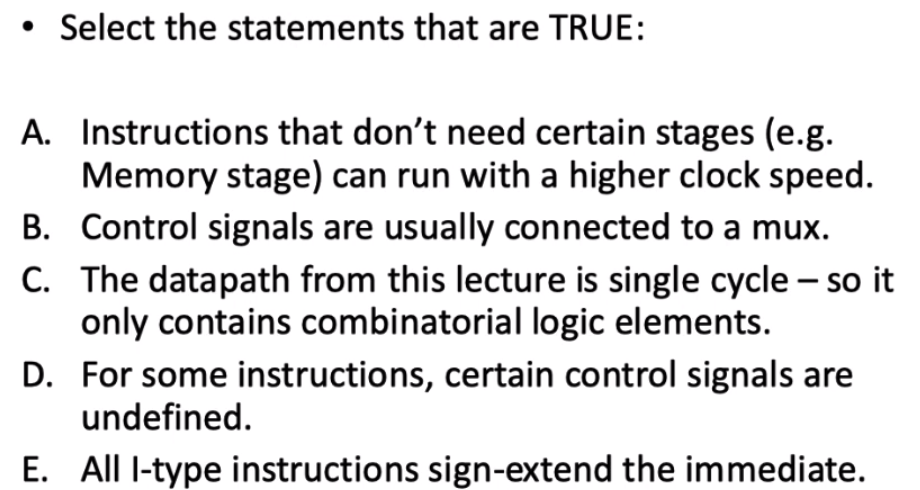

quiz

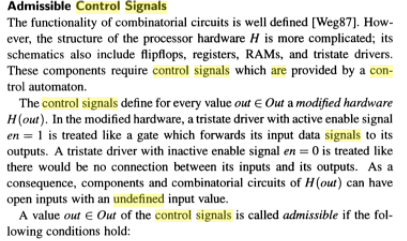

for D

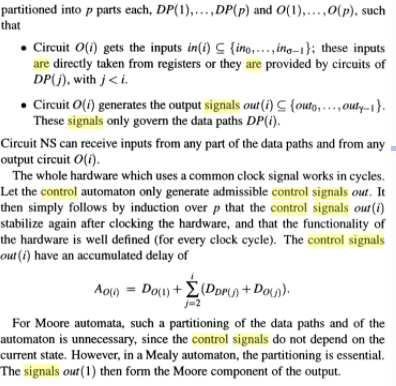

for E

vs

vs