This doc will be maintained in wiki.

When I was in OSDI this year, I talked with the Lead of KAIST OS lab Youngjin Kwon talking about bringing record and replay into the first-tier support. I challenged him about not using OS layer abstraction, but we should bring up a brand new architecture to view this problem from the bottom up. Especially, we don't actually need to implement OS because you will endure another implementation complexity explosion of what Linux is tailoring to. The best strategy is implemented in the library with the support of eBPF or other stuff for talking into the kernel and we leverage hardware extensxion like J extension. And we build a library upon all these.

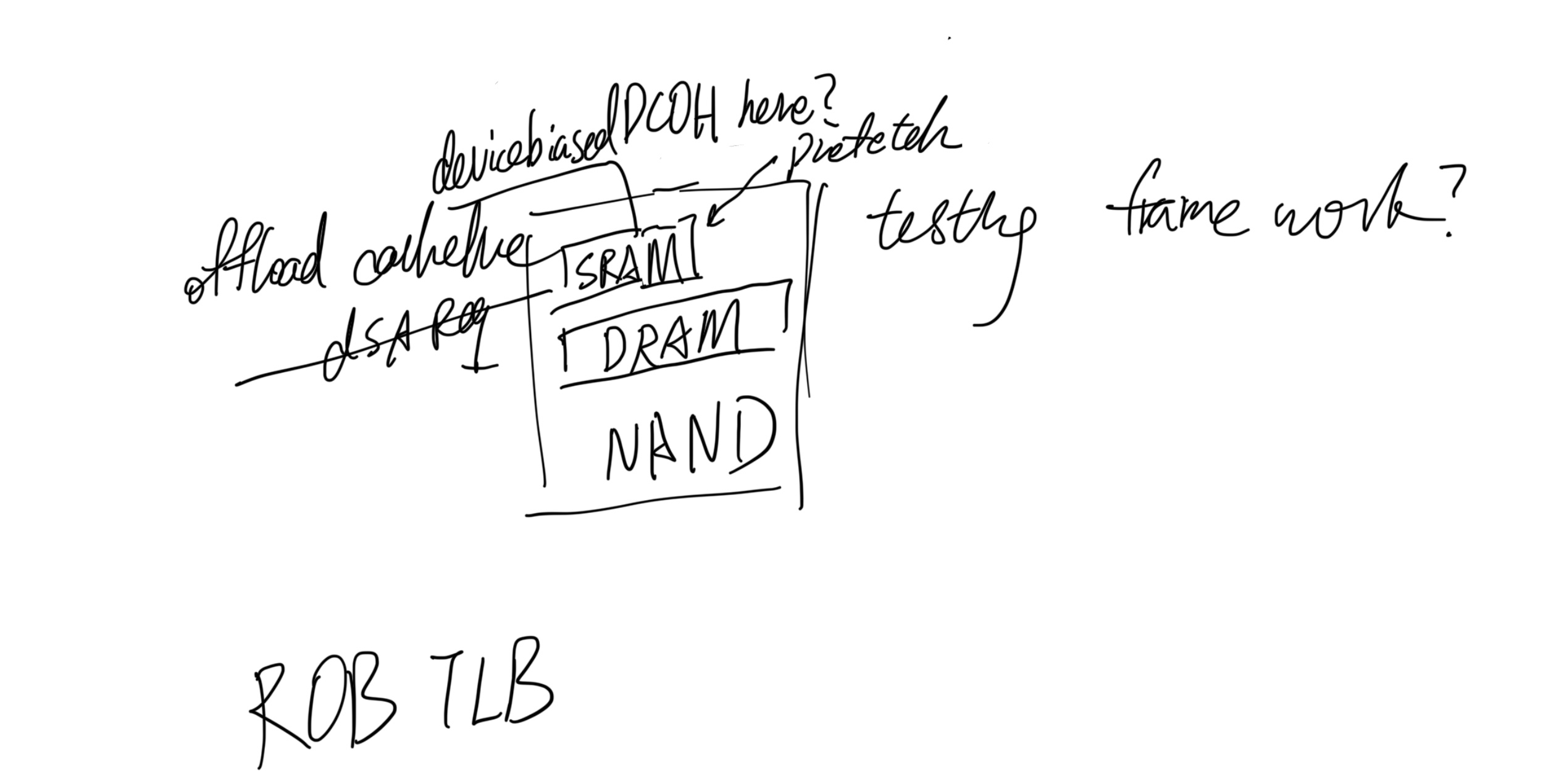

We live in a world of tons of NoC whose CPU count increases and from one farthest core to local req can live up to 20ns, the total access range of SRAM, and out of CPU accelerators like GPU or crypto ASIC. The demand for recording and replay in a performance-preserving way is very important. Remember debugging the performance bug inside any distributed system is painful. We maintained software epochs to hunt the bug or even live to migrate the whole spot to another cluster of computing devices. People try to make things stateless but get into the problem of metadata explosion. The demand to accelerate the record and replay using hardware acceleration is high.

- What's the virtualization of the CPU?

- General Register State.

- C State, P State, and machine state registers like performance counter.

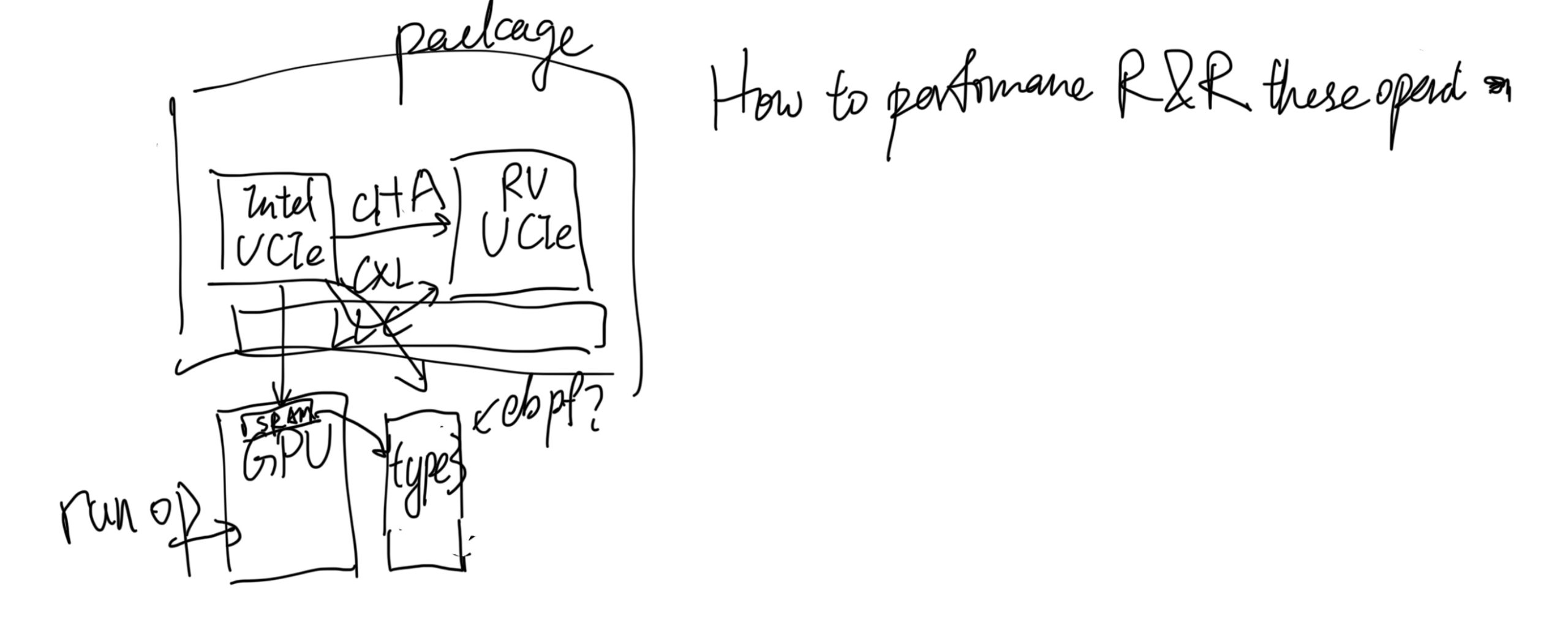

- CPU Extensions abstraction by record and replay. You normally interact with Intel extensions with drivers that map a certain address to it and get the results after the callback. Or even you are doing MPX-like style VMEXIT VMENTER. They are actually the same as CXL devices because, in the scenario of UCIe, every extension is a device and talks to others through the CXL link. The difference is only the cost model of latency and bandwidth.

- What's the virtualization of memory?

- MMU - process abstraction

- boundary check

- What's the virtualization of CXL devices in terms of CPU?

- Requests in the CXL link

- What's the virtualization of CXL devices in terms of outer devices?

- VFIO

- SRIOV

- DDA

Now we sit in the intersection of CXL, where NoC talk to each other the same as what GPU is talking to NIC or NIC talking to either core. I will regard them as Slug Architecture in the name of our lab. Remember the Von Noeman Architecture saying every IO/NIC/Outer device sending requests to CPU and CPU handler will record the state internally inside the memory. Harvard Architecture says every IO/NIC/Outer device is independent and stateless of each other. If you snapshot the CPU with memory, you don't necessarily get all the states of other stuff. I will take the record and replay of each component plus the link - CXL fabric as all the hacks take place. Say we have SmartNICs and SmartSSDs with growing computing power, we have NPUs and CPUs, The previous way of computing in the world of Von Noeman is CPU dominated everything, but in my view, which is Slug Architecture that is based upon Harvard Architecture, CPU fetches the results of outer devices results and continue, NPU fetches SmartSSDs results to continue. And for vector lock like timing recording, we need bus or fabric monitoring.

- Bus monitor

- CXL Address Translation Service

- Possible Implementation

- MVVM, we can actually leverage the virtualized env of WASM for core or endpoint abstraction

- J Extension with mmap memory for stall cycles until the observed signal

Why Ray is a dummy idea in terms of this? Ray just leverages Von Neumann Architecture but jumps its brain with the Architecture Wall. It requires every epoch of the GPU and sends everything back to the memory. We should reduce the data flow transmission and put control flow offloads.

Why LegoOS is a dummy idea in terms of this? LegoOS abstracts out the metadata server which is a centralized metadata server, which couldn't scale up. If you offload all the operations to the remote and add up the metadata of MDS this is also Von Neumann Bound. The programming model and OS abstraction of this is meaningless then, and our work can completely be a Linux userspace application.