失落的解释

不太理解asc评审人脑回路,同时看尽了一些国内的学术风气,同时联想到自己的GPA,觉得自己快没书读了。

安装

总之用rpmbuild最快。想改就改改 slurm.spec 就行

rpmbuild -ta slurm*.tar.bz2

大概有几个小坑,

- hdf5 spec 依赖有点问题,

tar -jxvf *, 把configure 部分改成 --with-hdf5=no。 - CC 一定是系统gcc

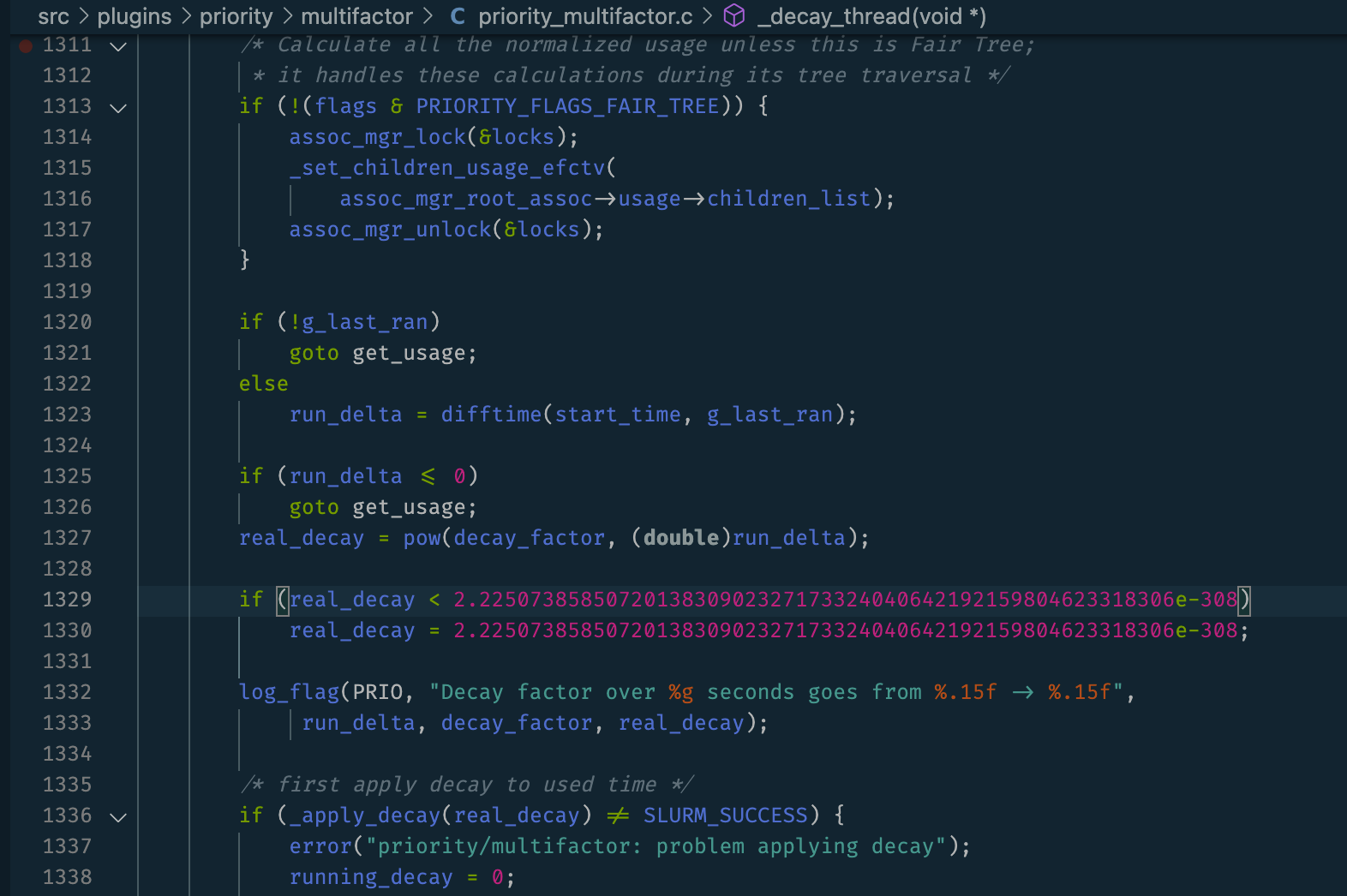

- undefined reference to DRL_MIN,源码DRL_MIN换成 2.2250738585072013830902327173324040642192159804623318306e-308。

装完进 $HOME/rpmbuild/RPMS/x86_64,yum install一下。

总之躲不开的就是看源码

配置

create 个 munged key 放 /etc/munge/munge.key

compute control 节点的 /etc/slurm/slurm.conf 得一样。

ClusterName=epyc

ControlMachine=epyc.node1

ControlAddr=192.168.100.5

SlurmUser=slurm1

MailProg=/bin/mail

SlurmctldPort=6817

SlurmdPort=6818

AuthType=auth/munge

StateSaveLocation=/var/spool/slurmctld

SlurmdSpoolDir=/var/spool/slurmd

SwitchType=switch/none

MpiDefault=none

SlurmctldPidFile=/var/run/slurmctld.pid

SlurmdPidFile=/var/run/slurmd.pid

ProctrackType=proctrack/linuxproc

#PluginDir=

#FirstJobId=

ReturnToService=0

# TIMERS

SlurmctldTimeout=300

SlurmdTimeout=300

InactiveLimit=0

MinJobAge=300

KillWait=30

Waittime=0

#

# SCHEDULING

SchedulerType=sched/backfill

#SchedulerAuth=

SelectType=select/cons_tres

SelectTypeParameters=CR_Core

#

# LOGGING

SlurmctldDebug=3

SlurmctldLogFile=/var/log/slurmctld.log

SlurmdDebug=3

SlurmdLogFile=/var/log/slurmd.log

JobCompType=jobcomp/none

#JobCompLoc=

#

# ACCOUNTING

#JobAcctGatherType=jobacct_gather/linux

#JobAcctGatherFrequency=30

#

#AccountingStorageType=accounting_storage/slurmdbd

#AccountingStorageHost=

#AccountingStorageLoc=

#AccountingStoragePass=

#AccountingStorageUser=

#

# COMPUTE NODES

NodeName=epyc.node1 NodeAddr=192.168.100.5 CPUs=256 RealMemory=1024 Sockets=2 CoresPerSocket=64 ThreadsPerCore=2 State=IDLE

NodeName=epyc.node2 NodeAddr=192.168.100.6 CPUs=256 RealMemory=1024 Sockets=2 CoresPerSocket=64 ThreadsPerCore=2 State=IDLE

PartitionName=control Nodes=epyc.node1 Default=YES MaxTime=INFINITE State=UP

PartitionName=compute Nodes=epyc.node2 Default=NO MaxTime=INFINITE State=UP

动态关注 /var/log/slurm* 会有各种新发现。

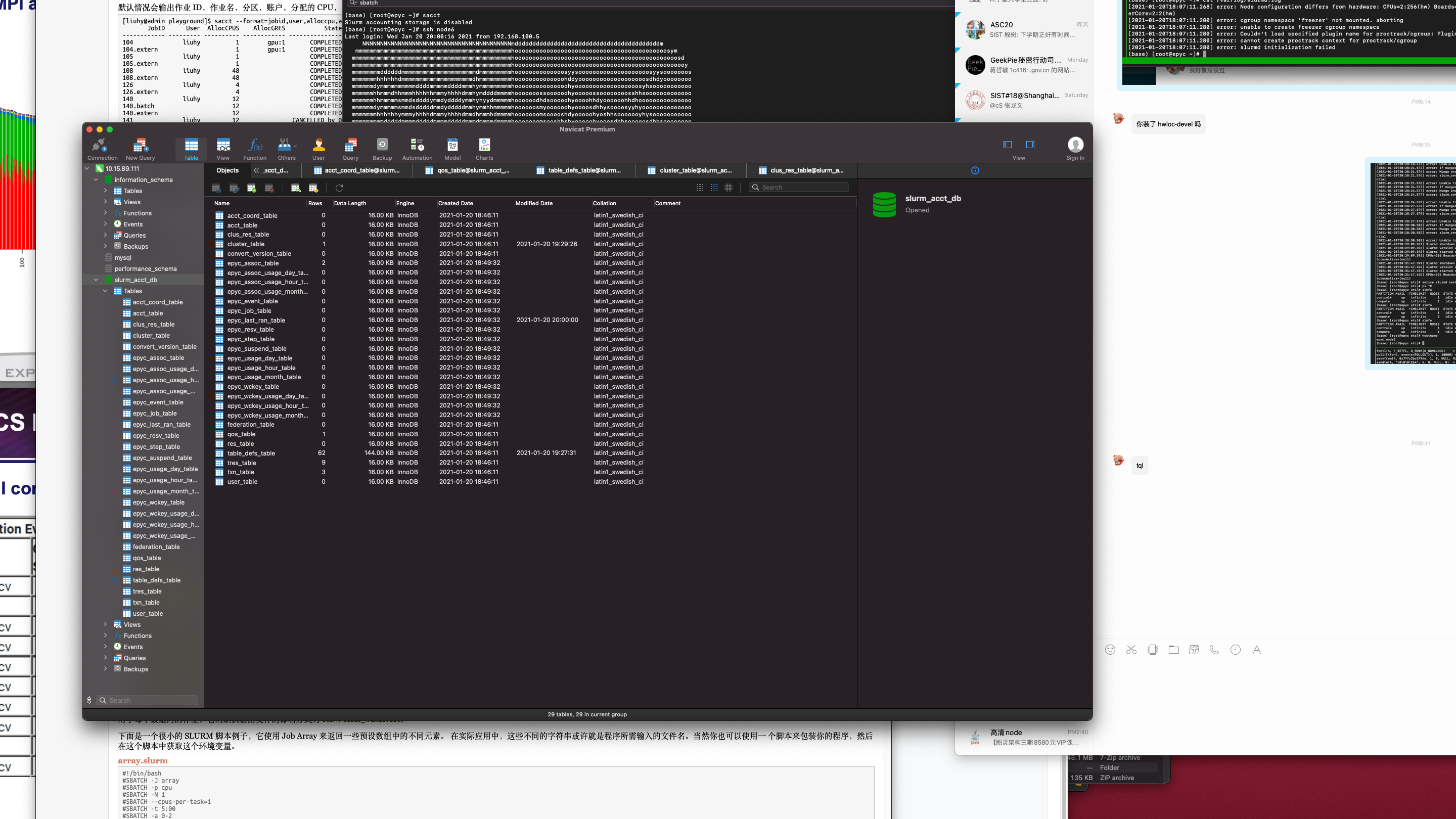

建议不要开 slurmdbd, 因为很难配成功。

sacct 不需要这个功能。

一点点关于QoS的尝试--基于 RDMA traffic

slurm 里面有基于负载均衡的QoS控制,而 RDMA traffic 的时序数据很好拿到,那就很好动态调QoS了。

$ sudo opensm -g 0x98039b03009fcfd6 -F /etc/opensm/opensm.conf -B

-------------------------------------------------

OpenSM 5.4.0.MLNX20190422.ed81811

Config file is `/etc/opensm/opensm.conf`:

Reading Cached Option File: /etc/opensm/opensm.conf

Loading Cached Option:qos = TRUE

Loading Changed QoS Cached Option:qos_max_vls = 2

Loading Changed QoS Cached Option:qos_high_limit = 255

Loading Changed QoS Cached Option:qos_vlarb_low = 0:64

Loading Changed QoS Cached Option:qos_vlarb_high = 1:192

Loading Changed QoS Cached Option:qos_sl2vl = 0,1

Warning: Cached Option qos_sl2vl: < 16 VLs listed

Command Line Arguments:

Guid <0x98039b03009fcfd6>

Daemon mode

Log File: /var/log/opensm.log

message table affinity

$ numactl --cpunodebind=0 ib_write_bw -d mlx5_0 -i 1 --report_gbits -F --sl=0 -D 10

---------------------------------------------------------------------------------------

RDMA_Write BW Test

Dual-port : OFF Device : mlx5_0

Number of qps : 1 Transport type : IB

Connection type : RC Using SRQ : OFF

CQ Moderation : 100

Mtu : 4096[B]

Link type : IB

Max inline data : 0[B]

rdma_cm QPs : OFF

Data ex. method : Ethernet

---------------------------------------------------------------------------------------

local address: LID 0x8f QPN 0xdd16 PSN 0x25f4a4 RKey 0x0e1848 VAddr 0x002b65b2130000

remote address: LID 0x8d QPN 0x02c6 PSN 0xdb2c00 RKey 0x17d997 VAddr 0x002b8263ed0000

---------------------------------------------------------------------------------------

#bytes #iterations BW peak[Gb/sec] BW average[Gb/sec] MsgRate[Mpps]

65536 0 0.000000 0.000000 0.000000

---------------------------------------------------------------------------------------

```

改affinity

$ numactl --cpunodebind=1 ib_write_bw -d mlx5_0 -i 1 --report_gbits -F --sl=1 -D 10

集成到slurm QoS 控制API中。

### 最后弄个playbook

```yml

---

slurm_upgrade: no

slurm_roles: []

slurm_partitions: []

slurm_nodes: []

slurm_config_dir: "{{ '/etc/slurm' }}"

slurm_configure_munge: yes

slurmd_service_name: slurmd

slurmctld_service_name: slurmctld

slurmdbd_service_name: slurmdbd

__slurm_user_name: "{{ (slurm_user | default({})).name | default('slurm') }}"

__slurm_group_name: "{{ (slurm_user | default({})).group | default(omit) }}"

__slurm_config_default:

AuthType: auth/munge

CryptoType: crypto/munge

SlurmUser: "{{ __slurm_user_name }}"

ClusterName: cluster

ProctrackType=proctrack/linuxproc

# slurmctld options

SlurmctldPort: 6817

SlurmctldLogFile: "{{ '/var/log/slurm/slurmctld.log' }}"

SlurmctldPidFile: >-

{{

'/var/run/slurm/slurmctld.pid'

}}

StateSaveLocation: >-

{{

'/var/lib/slurm/slurmctld'

}}

# slurmd options

SlurmdPort: 6818

SlurmdLogFile: "{{ '/var/log/slurm/slurmd.log' }}"

SlurmdPidFile: {{ '/var/run/slurm/slurmd.pid' }}

SlurmdSpoolDir: {{'/var/spool/slurm/slurmd' }}

__slurm_packages:

client: [slurm, munge]

slurmctld: [munge, slurm, slurm-slurmctld]

slurmd: [munge, slurm, slurm-slurmd]

slurmdbd: [munge, slurm-slurmdbd]

__slurmdbd_config_default:

AuthType: auth/munge

DbdPort: 6819

SlurmUser: "{{ __slurm_user_name }}"

LogFile: "{{ '/var/log/slurm/slurmdbd.log' }}"